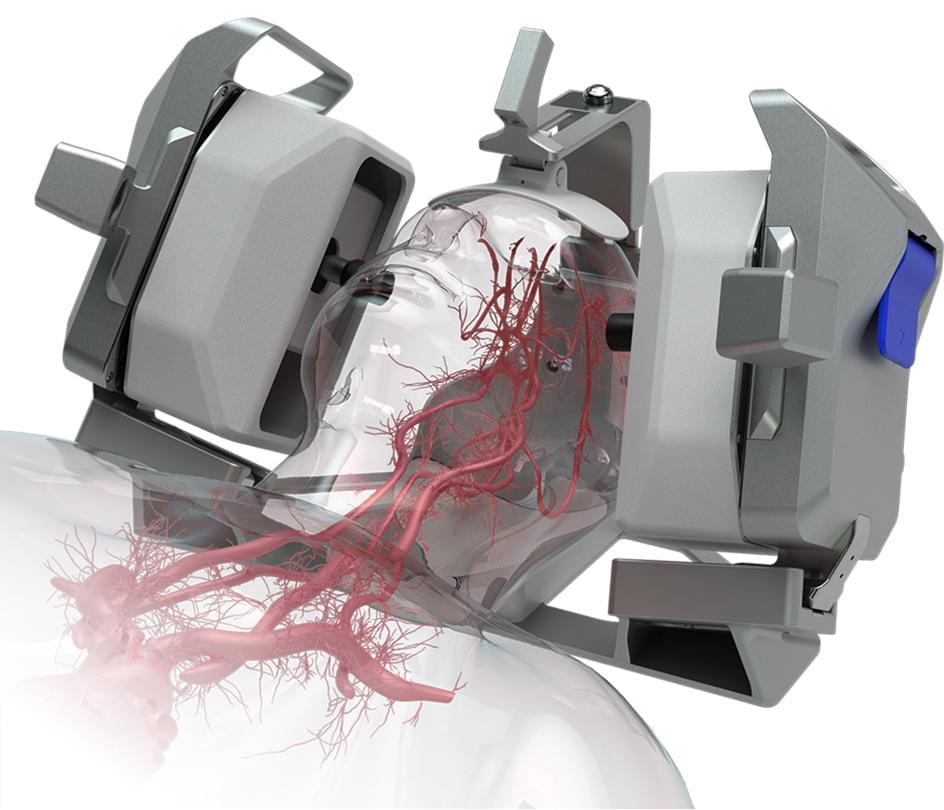

Will drone deliveries be a practical part of our future? We visit the test facilities of Wing to check out how their engineers and aircraft designers have developed a drone and drone fleet control system that is actually in operation today in parts of the world. Here’s how their VTOL drone works and what it’s like to both load and receive a package carried by an autonomous aircraft!

Shot by Joey Fameli and edited by Norman Chan.

Additional footage courtesy of Wing.

Music by Jinglepunks.

Join this channel to support Tested and get access to perks:

https://www.youtube.com/channel/UCiDJtJKMICpb9B1qf7qjEOA/join.

Tested Ts, stickers, mugs and more: https://tested-store.com.

Subscribe for more videos (and click the bell for notifications): https://www.youtube.com/subscription_center?add_user=testedcom.

Twitter: http://www.twitter.com/testedcom.

Facebook: http://www.facebook.com/testedcom.

Instagram: https://www.instagram.com/testedcom/

Discord: https://www.discord.gg/tested.

Amazon Storefront: http://www.amazon.com/shop/adamsavagestested?tag=lifeboatfound-20.

Savage Industries T-shirts: https://cottonbureau.com/stores/savage-merchandising#/shop.

Tested is: