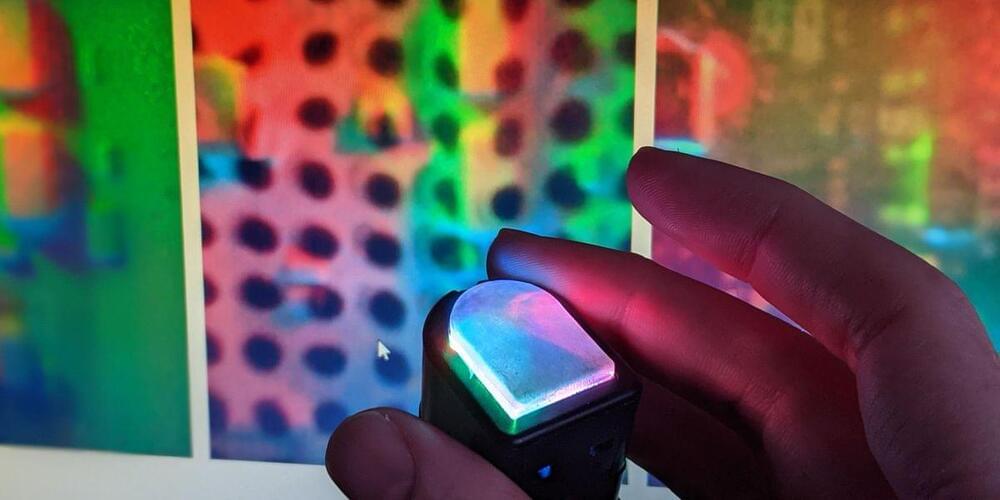

With the release of the most power Artificial Intelligence Accelerator Chip, future AI models like OpenAI’s GPT-4 will be able to surpass the Human Brain by supporting more than 100 Trillion Parameters. This new Chip made by Cerebras Systems is also be biggest Chip ever made by a longshot and thus can support ExaFlop Supercomputers for AI Model Training.

Cerebras Systems is an American semiconductor company with offices in Silicon Valley, San Diego, Toronto, and Tokyo. Cerebras builds computer systems for complex artificial intelligence and deep learning applications.

–

If you enjoyed this video, please consider rating this video and subscribing to our channel for more frequent uploads. Thank you! smile

–

TIMESTAMPS:

00:00 The biggest AI Chip ever made.

01:29 The world of AI Acceleration Chips.

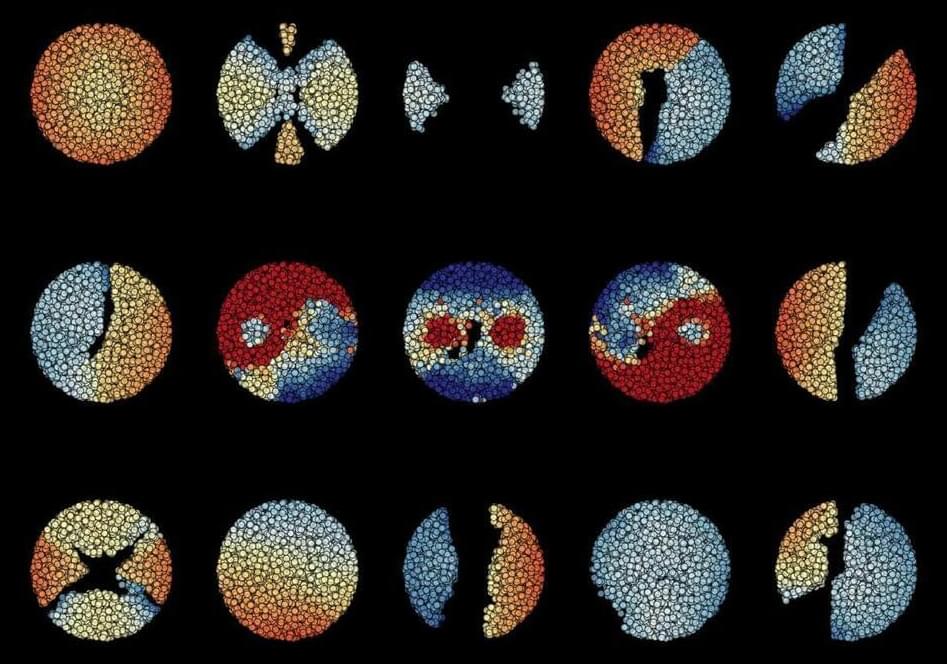

02:33 An Artificial Brain.

03:53 How does Cerebas’ Technology work.

06:12 What does this enable?

07:56 Last Words.

–

#gpt #openai #ai