The future is here.

Hints of a new particle carrying a fifth force of nature have been multiplying at the LHC – and many physicists are convinced this could finally be the big one.

Machine learning and machine learning algorithms are finding new applications in game building. Machine learning NPCs with machine learning processors have made it possible to have a virtual player.

Study reveals the different ways the brain parses information through interactions of waves of neural activity.

An AI asks Putin whether AI could also be president.

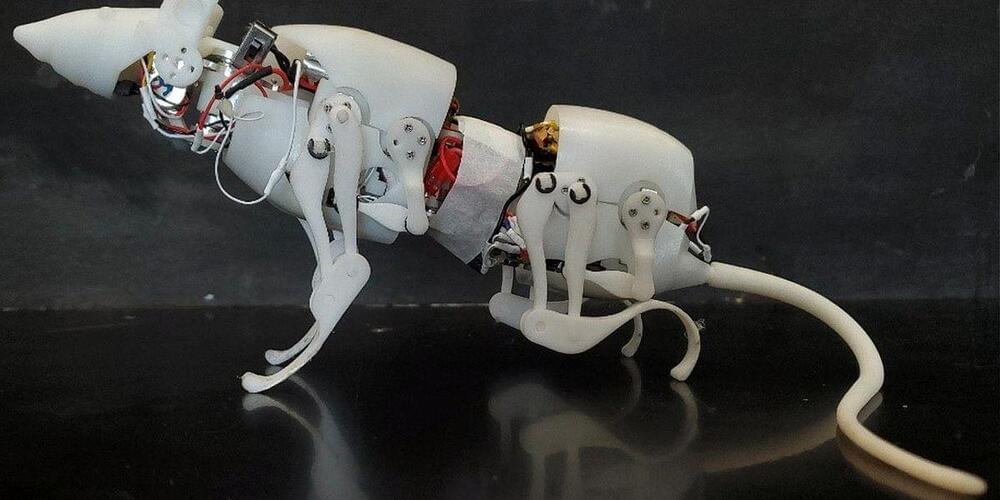

To SpaceX and Tesla CEO Elon Musk, the future is teeming with humanoid robots.

During a lengthy and freewheeling conversation with TED’s Chris Anderson last week, Musk expanded on his vision of what it could look like to share everyday life with automatons doing our bidding.

And, predictably, the conversation jumped directly to the sexual implications of humanoid androids. Despite his busy personal schedule, Musk clearly does have some time to daydream.

UK researchers have developed a small, flexible, snake-like “magnetic tentacle robot” to navigate deep into the lungs.

Labeling data can be a chore. It’s the main source of sustenance for computer-vision models; without it, they’d have a lot of difficulty identifying objects, people, and other important image characteristics. Yet producing just an hour of tagged and labeled data can take a whopping 800 hours of human time. Our high-fidelity understanding of the world develops as machines can better perceive and interact with our surroundings. But they need more help.

Scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), Microsoft, and Cornell University have attempted to solve this problem plaguing vision models by creating “STEGO,” an algorithm that can jointly discover and segment objects without any human labels at all, down to the pixel.

STEGO learns something called “semantic segmentation”—fancy speak for the process of assigning a label to every pixel in an image. Semantic segmentation is an important skill for today’s computer-vision systems because images can be cluttered with objects. Even more challenging is that these objects don’t always fit into literal boxes; algorithms tend to work better for discrete “things” like people and cars as opposed to “stuff” like vegetation, sky, and mashed potatoes. A previous system might simply perceive a nuanced scene of a dog playing in the park as just a dog, but by assigning every pixel of the image a label, STEGO can break the image into its main ingredients: a dog, sky, grass, and its owner.

Alan DeRossettPutin propaganda is dividing opinions on Elon Musk for helping Ukraine and standing up to the Fossil fuel industry.

Walter LynsdaleI’m all for people making billions through technical advancement (teslas, space X rockets, the dojo chip are all pretty cool), but he comes out with a fair amount of double speak:

“people aren’t having enough babies” vs “we can make a humanoid robot”… See more.

2 Replies.

View 8 more comments.

Shubham Ghosh Roy shared a link.

❤️ Check out Lambda here and sign up for their GPU Cloud: https://lambdalabs.com/papers.

📝 The paper “Hierarchical Text-Conditional Image Generation with CLIP Latents” is available here:

https://openai.com/dall-e-2/

https://www.instagram.com/openaidalle/

❤️ Watch these videos in early access on our Patreon page or join us here on YouTube:

- https://www.patreon.com/TwoMinutePapers.

- https://www.youtube.com/channel/UCbfYPyITQ-7l4upoX8nvctg/join.

🙏 We would like to thank our generous Patreon supporters who make Two Minute Papers possible:

Aleksandr Mashrabov, Alex Balfanz, Alex Haro, Andrew Melnychuk, Angelos Evripiotis, Benji Rabhan, Bryan Learn, B Shang, Christian Ahlin, Eric Martel, Gordon Child, Ivo Galic, Jace O’Brien, Javier Bustamante, John Le, Jonas, Jonathan, Kenneth Davis, Klaus Busse, Lorin Atzberger, Lukas Biewald, Matthew Allen Fisher, Michael Albrecht, Michael Tedder, Nikhil Velpanur, Owen Campbell-Moore, Owen Skarpness, Paul F, Rajarshi Nigam, Ramsey Elbasheer, Steef, Taras Bobrovytsky, Ted Johnson, Thomas Krcmar, Timothy Sum Hon Mun, Torsten Reil, Tybie Fitzhugh, Ueli Gallizzi.

If you wish to appear here or pick up other perks, click here: https://www.patreon.com/TwoMinutePapers.

Thumbnail background design: Felícia Zsolnai-Fehér — http://felicia.hu.

Chapters.