But then some have to write these AL programs.it will create more job opportunities for programmers.

Artificial Intelligence (AI), has been transforming multiple sectors of industries and impacting several aspects of our daily life. Mostly AI has acted prominently in the fields of automating manual processes. And therefore, we will be investigating how AI has affected the realm of software testing, automated testing in particular.

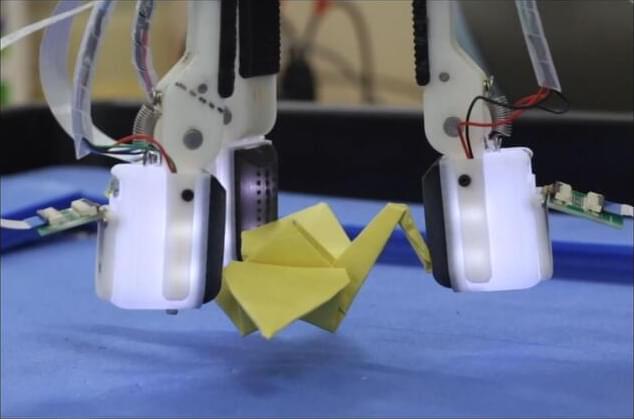

Software testing is the process of assessing the performance of a program developed to check whether it is developed as per the client’s requirements and to find out whether there are faults and improve them before it is deemed ready for use.

Each time developers add new code, new tests must be carried out and it is the responsibility of the QAs to spend significant time on ensuring that new code does not break the existing codebase. Regression testing cycles are extremely time-consuming when undertaken manually and can burden QAs to a large extent.