The classical neurophysiological approach for calculating PCI, power spectral density, and spectral exponent relies on many epochs to improve the reliability of statistical estimates of these indices21. However, these methods are only suitable for investigating the averaged brain states and they can only clarify general neurophysiological aspects. Machine learning (ML) allows decoding and identifying specific brain states and discriminating them from unrelated brain signals, even in a single trial in real-time22. This can potentially transform statistical results at the group level into individual predictions9. A deep neural network, which is a popular approach in ML, has been employed to classify or predict brain states using EEG data23. Particularly, a convolutional neural network (CNN) is the most extensively used technique in deep learning and has proven to be effective in the classification of EEG data24. However, a CNN has the drawback that it cannot provide information on why it made a particular prediction25. Recently, layer-wise relevance propagation (LRP) has successfully demonstrated why classifiers such as CNNs have made a specific decision26. Specifically, the relevance score resulting from the LRP indicates the contribution of each input variable to the classification or prediction decision. Thus, a high score in a particular area of an input variable implies that the classifier has made the classification or prediction using this feature. For example, neurophysiological data suggest that the left motor region is activated during right-hand motor imagery27. The LRP indicates that the neural network classifies EEG data as right-hand motor imagery because of the activity of the left motor region28. Therefore, the relevance score was higher in the left motor region than in other regions. Thus, it is possible to interpret the neurophysiological phenomena underlying the decisions of CNNs using LRP.

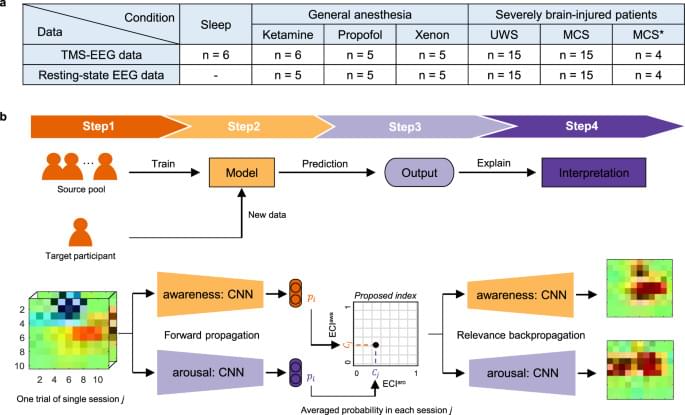

In this work, we develop a metric, called the explainable consciousness indicator (ECI), to simultaneously quantify the two components of consciousness—arousal and awareness—using CNN. The processed time-series EEG data were used as an input of the CNN. Unlike PCI, which relies on source modeling and permutation-based statistical analysis, ECI used event-related potentials at the sensor level for spatiotemporal dynamics and ML approaches. For a generalized model, we used the leave-one-participant-out (LOPO) approach for transfer learning, which is a type of ML that transfers information to a new participant not included in the training phase24,27. The proposed indicator is a 2D value consisting of indicators of arousal (ECIaro) and awareness (ECIawa). First, we used TMS–EEG data collected from healthy participants during NREM sleep with no subjective experience, REM sleep with subjective experience, and healthy wakefulness to consider each component of consciousness (i.e., low/high arousal and low/high awareness) with the aim to analyze correlations between the proposed ECI and the three states, namely NREM, REM, and wakefulness. Next, we measured ECI using TMS–EEG data collected under general anesthesia with ketamine, propofol, and xenon, again with the aim to measure correlation with these three anesthetics. Before anesthesia, TMS–EEG data were also recorded during healthy wakefulness. Upon awakening, healthy participants reported conscious experience during ketamine-induced anesthesia and no conscious experience during propofol-and xenon-induced anesthesia. Finally, TMS–EEG data were collected from patients with disorders of consciousness (DoC), which includes patients diagnosed as UWS and MCS patients. We hypothesized that our proposed ECI can clearly distinguish between the two components of consciousness under physiological, pharmacological, and pathological conditions.

To verify the proposed indicator, we next compared ECIawa with PCI, which is a reliable index for consciousness. Then, we applied ECI to additional resting-state EEG data acquired in the anesthetized participants and patients with DoC. We hypothesize that if CNN can learn characteristics related to consciousness, it could calculate ECI accurately even without TMS in the proposed framework. In terms of clinical applicability, it is important to use the classifier from the previous LOPO training of the old data to classify the new data (without additional training). Therefore, we computed ECI in patients with DoC using a hold-out approach29, where training data and evaluation data are arbitrarily divided, instead of cross-validation. Finally, we investigated why the classifier generated these decisions using LRP to interpret ECI30.