PhonlamaiPhoto/iStock.

The Hubbard Model

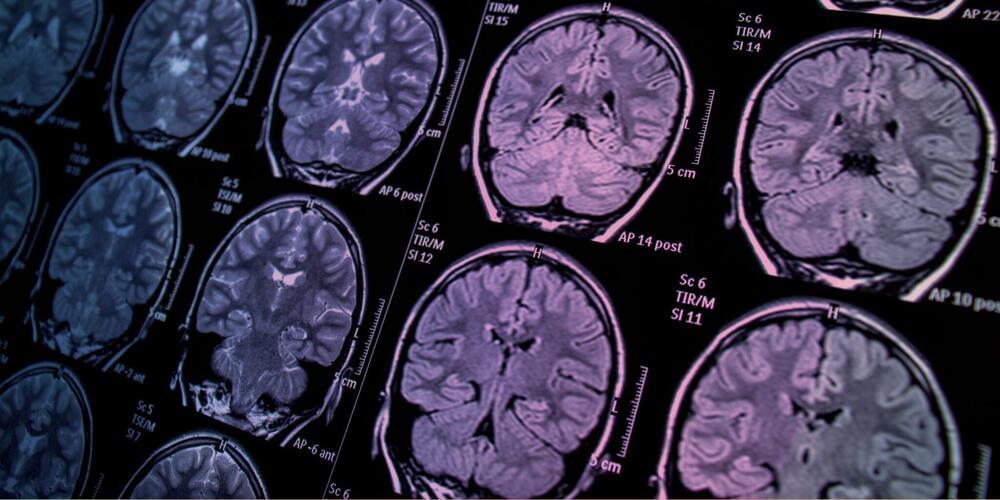

Since the infancy of functional magnetic resonance imaging (fMRI) in 1990, people have been fascinated by the potential for brain scans to unlock the mysteries of the human mind, our behaviors and beliefs. Many breathtaking applications for brain scans have been devised, but hype often exceeds what empirical science can deliver. It’s time to ask: What’s the big picture of neuroscience and what are the limitations of brain scans?

The specific aims of any research endeavor depend on who you ask and what funding agency is involved, says Michael Spezio, associate professor of psychology, data science and neuroscience at Scripps College. Some people believe neuroscience has the potential to explain human cognition and behavior as a fully mechanistic process, ultimately debunking an “illusion of free will.” Not all neuroscientists agree that free will is a myth, but it’s a strong current these days. Neuroscience also has applications in finance, artificial intelligence, weapons research and national security.

For other researchers and funders, the specific aim of neuroscience involves focusing on medical imaging, genetics, the study of proteins (proteomics) and the study of neural connections (connectomics). As caring persons who are biological, neurological, physical, social and spiritual, we can use neuroscience to think carefully and understand our humanity and possible ways to escape some of the traps we’ve built for ourselves, says Spezio. Also, brain scans can enhance research into spirituality, mindfulness and theory of mind – the awareness of emotions, values, empathy, beliefs, intentions and mental states to explain or predict others’ behavior.

Our brains are among the most complex objects in the known universe. Deciphering how they work could bring tremendous benefits, from finding ways to treat brain diseases and neurological disorders to inspiring new forms of machine intelligence.

But a critical starting point is coming up with a parts list. While everyone knows that brains are primarily made up of neurons, there are a dazzling array of different types of these cells. That’s not to mention the various kinds of glial cells that make up the connective tissue of the brain and play a crucial supporting role.

That’s why the National Institutes of Health’s BRAIN Initiative has just announced $500 million in funding over five years for an effort to characterize and map neuronal and other types of cells across the entire human brain. The project will be spearheaded by the Allen Institute in Seattle, but involves collaborations across 17 other institutions in the US, Europe, and Japan.

Michael Levin is a biologist at Tufts University working on novel ways to understand and control complex pattern formation in biological systems. Please support this podcast by checking out our sponsors:

- Henson Shaving: https://hensonshaving.com/lex and use code LEX to get 100 free blades with your razor.

- Eight Sleep: https://www.eightsleep.com/lex to get special savings.

- LMNT: https://drinkLMNT.com/lex to get free sample pack.

- InsideTracker: https://insidetracker.com/lex to get 20% off.

EPISODE LINKS:

Michael’s Twitter: https://twitter.com/drmichaellevin.

Michael’s Website: https://drmichaellevin.org.

Michael’s Papers:

Biological Robots: https://arxiv.org/abs/2207.00880

Synthetic Organisms: https://tandfonline.com/doi/full/10.1080/19420889.2021.2005863

Limb Regeneration: https://science.org/doi/10.1126/sciadv.abj2164

PODCAST INFO:

Podcast website: https://lexfridman.com/podcast.

Apple Podcasts: https://apple.co/2lwqZIr.

Spotify: https://spoti.fi/2nEwCF8

RSS: https://lexfridman.com/feed/podcast/

Full episodes playlist: https://www.youtube.com/playlist?list=PLrAXtmErZgOdP_8GztsuKi9nrraNbKKp4

Clips playlist: https://www.youtube.com/playlist?list=PLrAXtmErZgOeciFP3CBCIEElOJeitOr41

OUTLINE:

0:00 — Introduction.

1:40 — Embryogenesis.

9:08 — Xenobots: biological robots.

22:55 — Sense of self.

32:26 — Multi-scale competency architecture.

43:57 — Free will.

53:27 — Bioelectricity.

1:06:44 — Planaria.

1:18:33 — Building xenobots.

1:42:08 — Unconventional cognition.

2:06:39 — Origin of evolution.

2:13:41 — Synthetic organisms.

2:20:27 — Regenerative medicine.

2:24:13 — Cancer suppression.

2:28:15 — Viruses.

2:33:28 — Cognitive light cones.

2:38:03 — Advice for young people.

2:42:47 — Death.

2:52:17 — Meaning of life.

SOCIAL:

- Twitter: https://twitter.com/lexfridman.

- LinkedIn: https://www.linkedin.com/in/lexfridman.

- Facebook: https://www.facebook.com/lexfridman.

- Instagram: https://www.instagram.com/lexfridman.

- Medium: https://medium.com/@lexfridman.

- Reddit: https://reddit.com/r/lexfridman.

- Support on Patreon: https://www.patreon.com/lexfridman

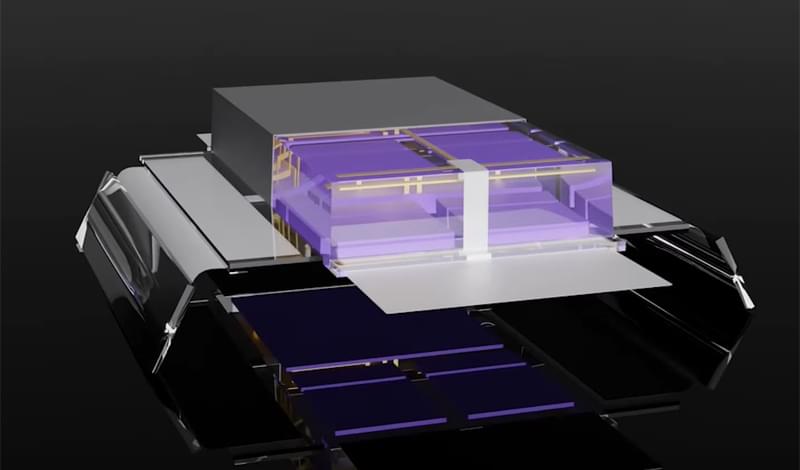

A collaborative effort has succeeded in upgrading solar-powered microbots – each of which now has their own built-in, miniature computer – allowing them to walk autonomously and without being externally controlled.

Cornell researchers and others had previously developed microscopic machines able to crawl, swim, walk, and fold themselves up. However, these always had “strings” attached; to generate motion, they needed wires to provide electrical current, or laser beams focused directly onto specific parts of robots.

“Before, we literally had to manipulate these ‘strings’ in order to get any kind of response from the robot,” explained Itai Cohen, Professor at Cornell’s Department of Physics. “But now that we have these brains on board, it’s like taking the strings off the marionette. It’s like when Pinocchio gains consciousness.”

A new method allows MIT’s Mini Cheetah to learn how to run fast and adapt to walking on challenging terrain. This learning-based method outperforms previous human-designed methods and allowed the Mini Cheetah to set a record for speed.

More info: https://news.mit.edu/2022/3-questions-how-mit-mini-cheetah-learns-run-fast-0317

Visit the project page at https://sites.google.com/view/model-free-speed/

The work was supported by DARPA Machine Common Sense Program, Naver Labs, MIT Biomimetic Robotics Lab, and the NSF AI Institute of AI and Fundamental Interactions. The research was conducted at the Improbable AI Lab.

Video edited by Tom Buehler.

Let your cargo follow you while you travel comfortably with the gita plus cargo carrying robot. Double the size of the gita mini robot, this robot comes with pedestrian etiquette. In fact, this robot is perfect for families who need larger cargo space, business owners, or anyone who wants an extra set of hands. The sleek design looks unique and one of a kind. In fact, this robot also has a built-in speaker. It allows you to use the mygita app to stream music from your smartphone. With the help of cameras and radar technology, this robot can see its surroundings and pair with its user. In fact, it takes just one tap for the gita plus to pair to you. It stands and self-balances, braking automatically when needed and adjusting its speed to keep pace along the way.

What does this mean for the field of robotics?

Some inventions are so strange they simply cannot help but catch the eye. Such is the case with David Bowen’s plant machete, first reported by designboom.

Robotics have come a long way as this project of an arm being controlled by the electric noises produced by a plant. Could this application be scaled up to allow for brain-controlled movement?

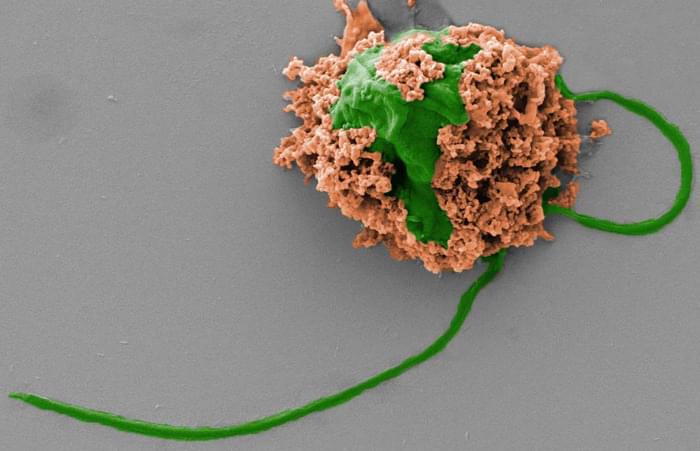

The last decade has brought a lot of attention to the use of microscopic robots (microrobots or nanorobots) for biomedical applications. Now, nanoengineers have developed microrobots that can swim around in the lungs and deliver medication to be used to treat bacterial pneumonia. A new study shows that the microrobots safely eliminated pneumonia-causing bacteria in the lungs of mice and resulted in 100% survival. By contrast, untreated mice all died within three days after infection.

The results are published Nature Materials in the paper, “Nanoparticle-modified microrobots for in vivo antibiotic delivery to treat acute bacterial pneumonia.”

The microrobots are made using click chemistry to attach antibiotic-loaded neutrophil membrane-coated polymeric nanoparticles to natural microalgae. The hybrid microrobots could be used for the active delivery of antibiotics in the lungs in vivo.

Scientists have been able to direct a swarm of microscopic swimming robots to clear out pneumonia microbes in the lungs of mice, raising hopes that a similar treatment could be developed to treat deadly bacterial pneumonia in humans.

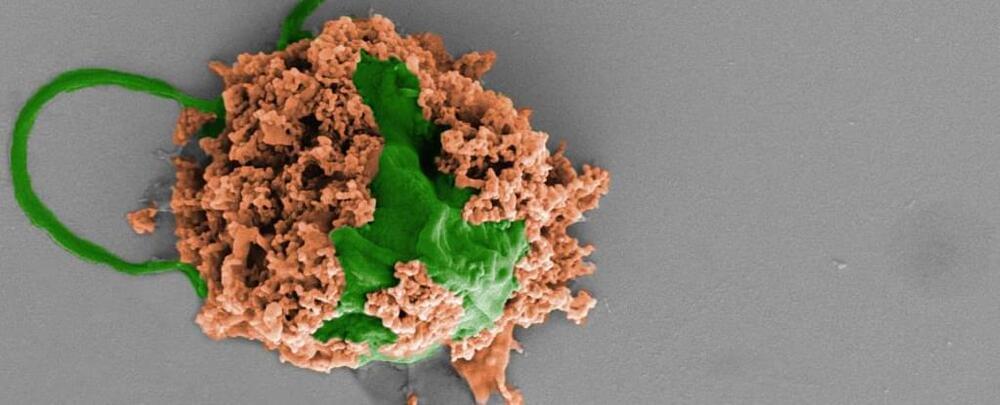

The microbots are made from algae cells and covered with a layer of antibiotic nanoparticles. The algae provide movement through the lungs, which is key to the treatment being targeted and effective.

In experiments, the infections in the mice treated with the algae bots all cleared up, whereas the mice that weren’t treated all died within three days.