https://youtube.com/watch?v=CWQTqw-fMrc&feature=share

👉For business inquiries: [email protected].

✅ Instagram: https://www.instagram.com/pro_robots.

You are on the PRO Robots channel and in this video we present information technology news. The world’s largest exhibition of robots and technologies of the future GITEX 2022 was held in Dubai. Revolutionary developments, innovative technologies, futuristic solutions, robots and unmanned cars. We have chosen all the most interesting things for you and compiled them into one video. Watch to the end and subscribe to the channel. Let’s fly!

00:00 Intro.

00:28 500 companies at the exhibition.

00:59 Ameca Robot.

01:34 XTurizmo Limited Edition Flying Motorcycle.

02:01 The largest flying vehicle.

02:56 Flying cars Skydrive.

03:29 Cadillac InnerSpace autonomous electric car.

04:13 Surgical Microscope.

05:29 EndiaTX’s PillBot.

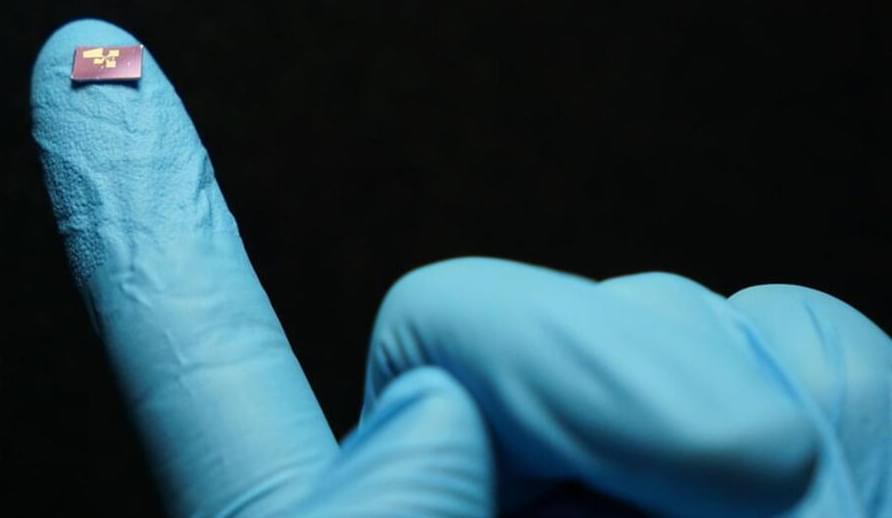

06:06 Mini Robot.

06:21 Promobot at the show.

07:03 Robosculptor.

07:32 Cruise Origin.

07:47 Taxi Microbus.

08:12 Thermite RS3 robot fireman from Howe & Howe.

08:42 FlyCam’s Neo Octocopter.

09:16 Swiss-Mile.

09:56 Mwafeq, a four-legged robot.

10:22 Mobile robots OttoBot by Ottonomy.

10:45 Acer Spatial labs.

11:08 Pepper robots.

#prorobots #robots #robot #futuretechnologies #robotics.

More interesting and useful content:

✅ Elon Musk Innovation https://www.youtube.com/playlist?list=PLcyYMmVvkTuQ-8LO6CwGWbSCpWI2jJqCQ

✅Future Technologies Reviews https://www.youtube.com/playlist?list=PLcyYMmVvkTuTgL98RdT8-z-9a2CGeoBQF

✅ Technology news.

https://www.facebook.com/PRO.Robots.Info.

#prorobots #technology #roboticsnews.