Discover agile mobile robots for research efforts across a wide range of industries — from construction to manufacturing, energy & utilities, oil & gas, and more.

Category: robotics/AI – Page 1,552

Meta’s newest AI determines proper protein folds 60 times faster

Life on Earth would not exist as we know it, if not for the protein molecules that enable critical processes from photosynthesis and enzymatic degradation to sight and our immune system. And like most facets of the natural world, humanity has only just begun to discover the multitudes of protein types that actually exist. But rather scour the most inhospitable parts of the planet in search of novel microorganisms that might have a new flavor of organic molecule, Meta researchers have developed a first-of-its-kind metagenomic database, the ESM Metagenomic Atlas, that could accelerate existing protein-folding AI performance by 60x.

Metagenomics is just coincidentally named. It is a relatively new, but very real, scientific discipline that studies “the structure and function of entire nucleotide sequences isolated and analyzed from all the organisms (typically microbes) in a bulk sample.” Often used to identify the bacterial communities living on our skin or in the soil, these techniques are similar in function to gas chromatography, wherein you’re trying to identify what’s present in a given sample system.

Similar databases have been launched by the NCBI, the European Bioinformatics Institute, and Joint Genome Institute, and have already cataloged billions of newly uncovered protein shapes. What Meta is bringing to the table is “a new protein-folding approach that harnesses large language models to create the first comprehensive view of the structures of proteins in a metagenomics database at the scale of hundreds of millions of proteins,” according to a Tuesday release from the company. The problem is that, while advances of genomics have revealed the sequences for slews of novel proteins, just knowing what those sequences are doesn’t actually tell us how they fit together into a functioning molecule and going figuring it out experimentally takes anywhere from a few months to a few years. Per molecule. Ain’t nobody got time for that.

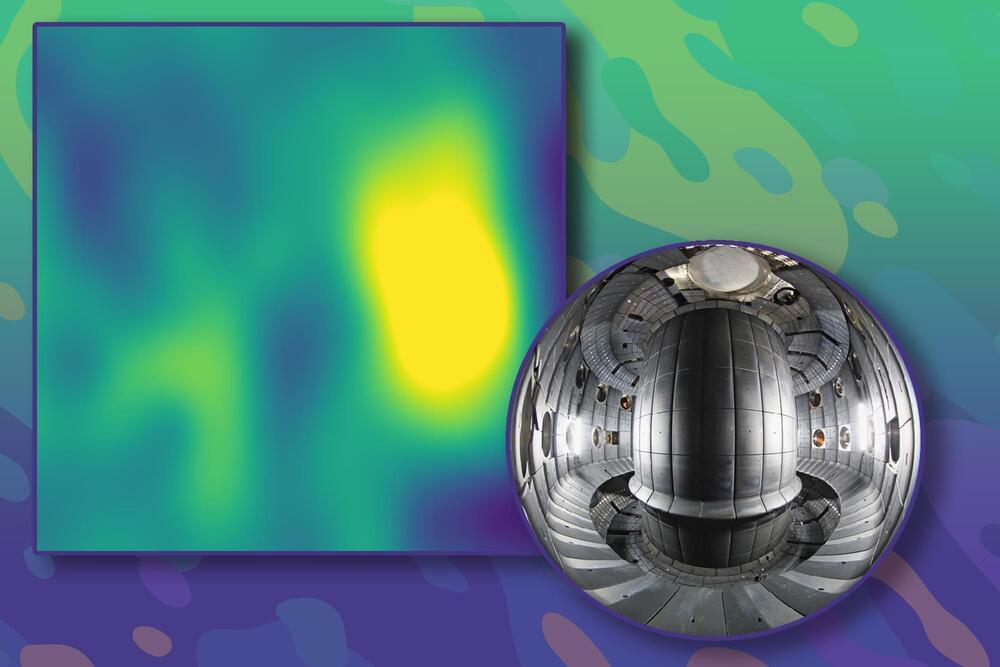

Machine learning facilitates “turbulence tracking” in fusion reactors

He and his co-authors took four well-established computer vision models, which are commonly used for applications like autonomous driving, and trained them to tackle this problem.

Simulating blobs

To train these models, they created a vast dataset of synthetic video clips that captured the blobs’ random and unpredictable nature.

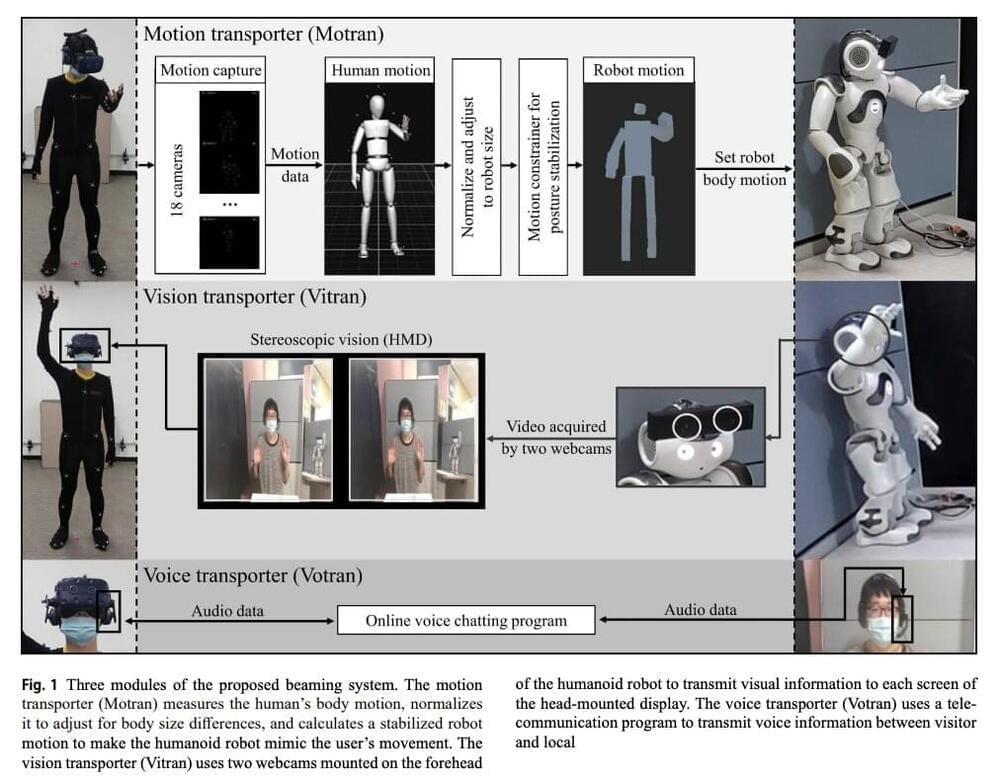

A system that allows users to communicate with others remotely while embodying a humanoid robot

Recent technological advancements are opening new and exciting opportunities for communicating with others and visiting places remotely. These advancements include telepresence robots, moving robotic systems that allow users to virtually navigate remote environments and interact with people in these environments.

Researchers at Hanyang University and Duksung Women’s University in South Korea have recently developed a promising telepresence system based on a humanoid robot, a head mounted display, a motion transporter, a voice transporter, and a vision transporter system.

This system, introduced in a paper published in the International Journal of Social Robotics, allows users to take full-body ownership of a humanoid robot’s body, thus accessing remote environments and interacting with both humans and objects in these environments as if they were physically there.

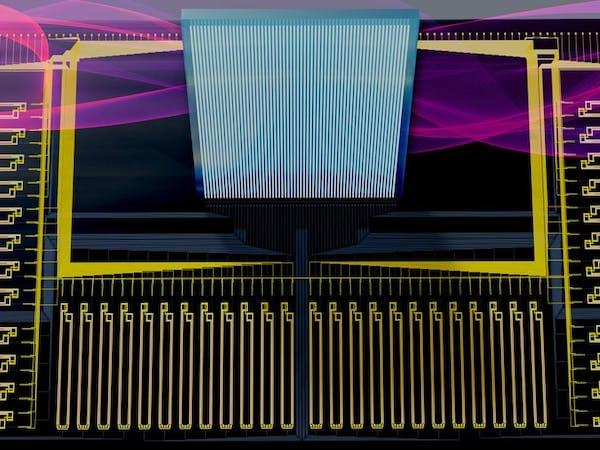

Learning at the Speed of Light

Of course running a state of the art machine learning model, with billions of parameters, is not exactly easy when memory is measured in kilobytes. But with some creative thinking and a hybrid approach that leverages the power of the cloud and blends it with the advantages of tinyML, it may just be possible. A team of researchers at MIT has shown how this may be possible with their method called Netcast that relies on heavily-resourced cloud computers to rapidly retrieve model weights from memory, then transmit them nearly instantaneously to the tinyML hardware via a fiber optic network. Once those weights are transferred, an optical device called a broadband “Mach-Zehnder” modulator combines them with sensor data to perform lightning-fast calculations locally.

The team’s solution makes use of a cloud computer with a large amount of memory to retain the weights of a full neural network in RAM. Those weights are streamed to the connected device as they are needed through an optical pipe with enough bandwidth to transfer an entire full feature-length movie in a single millisecond. This is one of the biggest limiting factors that prevents tinyML devices from executing large models, but it is not the only factor. Processing power is also at a premium on these devices, so the researchers also proposed a solution to this problem in the form of a shoe box-sized receiver that performs super-fast analog computations by encoding input data onto the transmitted weights.

This scheme makes it possible to perform trillions of multiplications per second on a device that is resourced like a desktop computer from the early 1990s. In the process, on-device machine learning that ensures privacy, minimizes latency, and that is highly energy efficient is made possible. Netcast was test out on image classification and digit recognition tasks with over 50 miles separating the tinyML device and cloud resources. After only a small amount of calibration work, average accuracy rates exceeding 98% were observed. Results of this quality are sufficiently good for use in commercial products.

Half Company designs transport network that combines cable cars with AI

A network of autonomous cable cars transports people and goods around a city in this concept developed by transport design studio Half Company.

Halfgrid is a proposal for a city-wide transport system that uses artificial intelligence (AI) to move suspended capsules to their designated location.

It is billed as “a fully autonomous transit system for people and goods, running on a separate layer above the ground and based on the smallest unit possible — an individual person-sized pod”.

‘Humans Will Live In Metaverse Soon’, Claims Mark Zuckerberg. What About Reality?

It mentions that Mark believes that people will migrate to the Metaverse and leave reality behind.

Here’s my conversation with Mark Zuckerberg, including a few opening words from me on Ukraine, Putin, and war. We talk about censorship, freedom, mental health, Social Dilemma, Instagram whistleblower, mortality, meaning & the future of the Metaverse & AI. https://www.youtube.com/watch?v=5zOHSysMmH0 pic.twitter.com/BLARIpXgL0— Lex Fridman (@lexfridman) February 26, 2022

SEE ALSO: Meta Shuts Down Its Students-only College Social Network ‘Campus’

Reports suggest that Meta intends to spend the next five to ten years creating an immersive virtual environment that includes fragrance, touch, and sound to allow users to lose themselves in virtual reality. The wealthy creator unveiled an AI system that can be used to construct totally personalized worlds to your own design in a recent update on future plans, and he’s working on higher-fidelity avatars and spatial audio to make communication simpler.

One of the Biggest Problems in Biology Has Finally Been Solved

There’s an age-old adage in biology: structure determines function. In order to understand the function of the myriad proteins that perform vital jobs in a healthy body—or malfunction in a diseased one—scientists have to first determine these proteins’ molecular structure. But this is no easy feat: protein molecules consist of long, twisty chains of up to thousands of amino acids, chemical compounds that can interact with one another in many ways to take on an enormous number of possible three-dimensional shapes. Figuring out a single protein’s structure, or solving the protein-folding problem, can take years of finicky experiments.

But earlier this year an artificial intelligence program called AlphaFold, developed by the Google-owned company DeepMind, predicted the 3D structures of almost every known protein —about 200 million in all. DeepMind CEO Demis Hassabis and senior staff research scientist John Jumper were jointly awarded this year’s $3-million Breakthrough Prize in Life Sciences for the achievement, which opens the door for applications that range from expanding our understanding of basic molecular biology to accelerating drug development.

DeepMind developed AlphaFold soon after its AlphaGo AI made headlines in 2016 by beating world Go champion Lee Sedol at the game. But the goal was always to develop AI that could tackle important problems in science, Hassabis says. DeepMind has made the structures of proteins from nearly every species for which amino acid sequences exist freely available in a public database.

Artificial intelligence might be able to treat various brain disorders

KanawatTH/iStock.

Researchers at the University of Toronto are combining artificial intelligence and microelectronics to create innovative technology that is safe and effective. The research team wants to incorporate neural implants into miniature silicone chips in a similar way that is done to manufacture chips used in today’s computers.