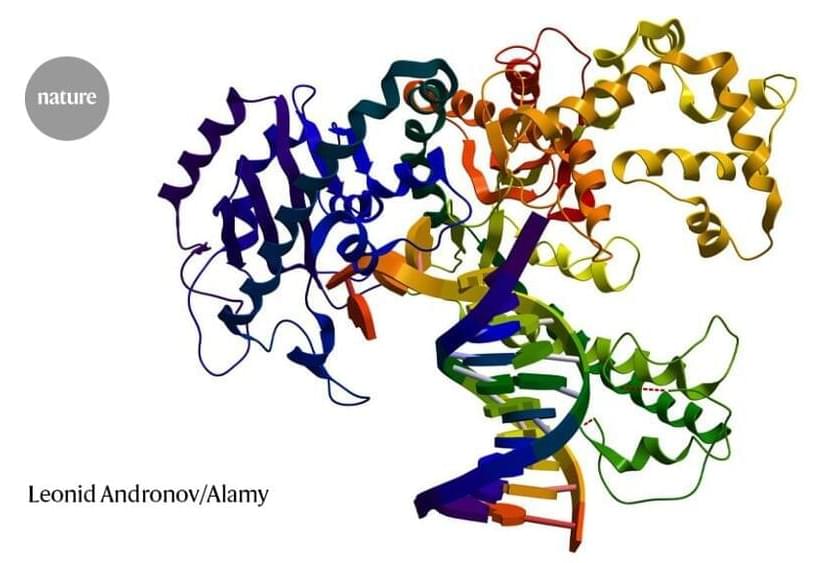

Two years after DeepMind’s revolutionary AI swept a competition for predicting protein structures, researchers are building on AlphaFold’s success.

Artificial intelligence (AI) is transforming the Federal Government—and it’s up to you to make it work for your mission. Booz Allen can help. We deliver custom, secure solutions that allow government to responsibly unlock its potential.

Our AI experts, scientists, domain experts, and consultants understand the technologies that make AI possible, the policies that make it necessary, the business cases that make it strategic, and the solutions that make it powerful.

These Remote Carriers will supplement manned aircraft and support pilots in their tasks and missions.

A group led by Airbus has successfully tested the launch and operation of a Remote Carrier flight test demonstrator, a modified Airbus Do-DT25 drone, from a flying A400M.

The project, jointly developed with Germany’s Bundeswehr, the German Aerospace Center DLR, and German companies SFL and Geradts, aims to supplement the upcoming European sixth-generation fighter jet, part of the Future Combat Air System (FCAS). “Multiplying the force and extending the range of unmanned systems will be one of the future roles of Airbus’ military transport aircraft in the FCAS,” said in a release.

The rules will go into effect starting in 2023.

China has issued rules and guidelines that regulate the use of artificial intelligence within the country. The regulations are cautious when it comes to AI. This includes the trending AI chatbots, such as ChatGPT, AI-generated art, the many methods of utilizing AI in the health care sector and all forms of artificial intelligence, in general.

The Cyberspace Administration of China (CAC), has issued a set of rules to follow when incorporating AI. The CAC is the internet regulator and censor in China. The agency released guidelines on “deep synthesis”. The regulatory measures will take effect starting on Jan. 10, 2023.

Igor Kutyaev/iStock.

The conditions regarding AI, or “deep synthesis”.

Less than two weeks ago, OpenAI released ChatGPT, a powerful new chatbot that can communicate in plain English using an updated version of its AI system. While versions of GPT have been around for a while, this model has crossed a threshold: It’s genuinely useful for a wide range of tasks, from creating software to generating business ideas to writing a wedding toast. While previous generations of the system could technically do these things, the quality of the outputs was much lower than that produced by an average human. The new model is much better, often startlingly so.

Put simply: This is a very big deal.

We’re hitting a tipping point for artificial intelligence: With ChatGPT and other AI models that can communicate in plain English, write and revise text, and write code, the technology is suddenly becoming more useful to a broader population of people. This has huge implications. The ability to produce text and code on command means people are capable of producing more work, faster than ever before. Its ability to do different kinds of writing means it’s useful for many different kinds of businesses. Its capacity to respond to notes and revise its own work means there’s significant potential for hybrid human/AI work. Finally, we don’t yet know the limits of these models. All of this could mean sweeping changes for how — and what — work is done in the near future.

Page-utils class= article-utils—vertical hide-for-print data-js-target= page-utils data-id= tag: blogs.harvardbusiness.org, 2007/03/31:999.345899 data-title= ChatGPT Is a Tipping Point for AI data-url=/2022/12/chatgpt-is-a-tipping-point-for-ai data-topic= AI and machine learning data-authors= Ethan Mollick data-content-type= Digital Article data-content-image=/resources/images/article_assets/2022/12/Dec22_14_1273459694-383x215.jpg data-summary=

The ability for anyone to produce pretty good text and code on command will transform work.

My avatars were cartoonishly pornified, while my male colleagues got to be astronauts, explorers, and inventors.

When I tried the new viral AI avatar app Lensa, I was hoping to get results similar to some of my colleagues at MIT Technology Review. The digital retouching app was first launched in 2018 but has recently become wildly popular thanks to the addition of Magic Avatars, an AI-powered feature which generates digital portraits of people based on their selfies.

But while Lensa generated realistic yet flattering avatars for them—think astronauts, fierce warriors, and cool cover photos for electronic music albums— I got tons of nudes.

Year 2020 face_with_colon_three

Scientists are working to end the need for human heart transplants by 2028. A team of researchers in the UK, Cambridge, and the Netherlands are developing a robot heart that can pump blood through the circulatory network but is soft and pliable. The first working model should be ready for implantation into animals within the next 3 years, and into humans within the next 8 years. The device is so promising that it is among just 4 projects that have made it to the shortlist for a £30-million prize, called the Big Beat Challenge for a therapy that can change the game in the treatment of heart disease.

The other projects include a genetic therapy for heart defects, a vaccine against heart disease, and wearable technology for early preclinical detection of heart attacks and strokes.

The need

There are about 7 million patients with heart and circulatory issues in the UK of which over 150,000 die every year. About 200 heart transplants occur each year in the UK alone, yet about 20 patients die in the same period while waiting for one. This is especially true if the patient waiting for one is a baby who was born with a defective heart, since babies need to have hearts transplanted from other babies – who must have died. And even with a successful transplant, strong immunosuppressive drugs must be started and often continued lifelong so that immune rejection does not occur. This is, however, accompanied by a higher risk of infectious and other complications.

😗

Japan sold a fully-functional robot suit that doesn’t just resemble a combat mech from a video game, it was able to actually fight like one too. With video games like “Armored Core,” mech fans already have a good idea of what giant fighting robots would be like in real life. One such fan is Kogoro Kurata –- an artist who wasn’t satisfied with leaving that notion to the imagination. Kurata explained that driving these giant robots was his dream, saying it was something the “Japanese had to do” (via Reuters). Unlike some of the coolest modern robots available today, Kurata envisioned a full-sized mech he can actually enter and operate: Something he actually accomplished in 2012.

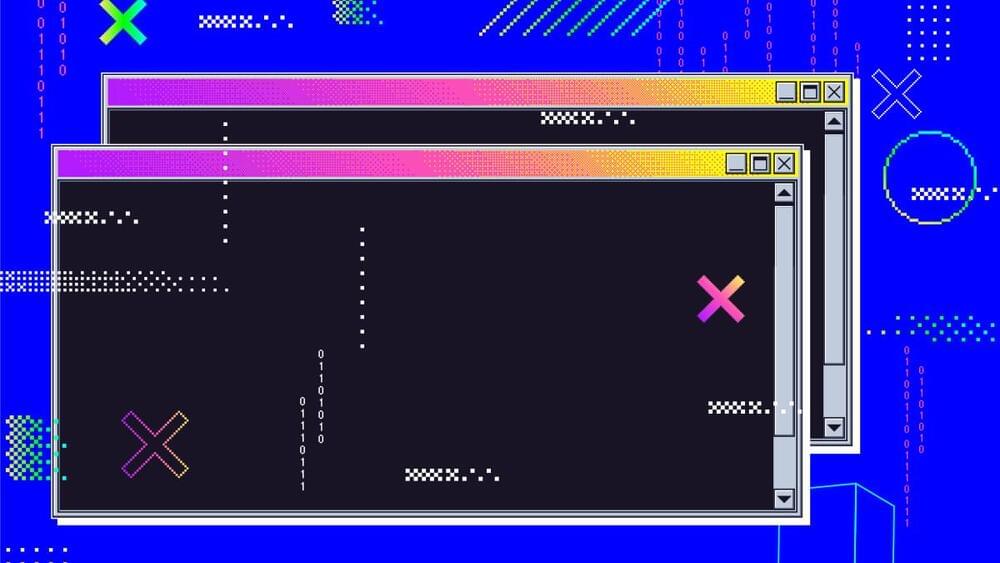

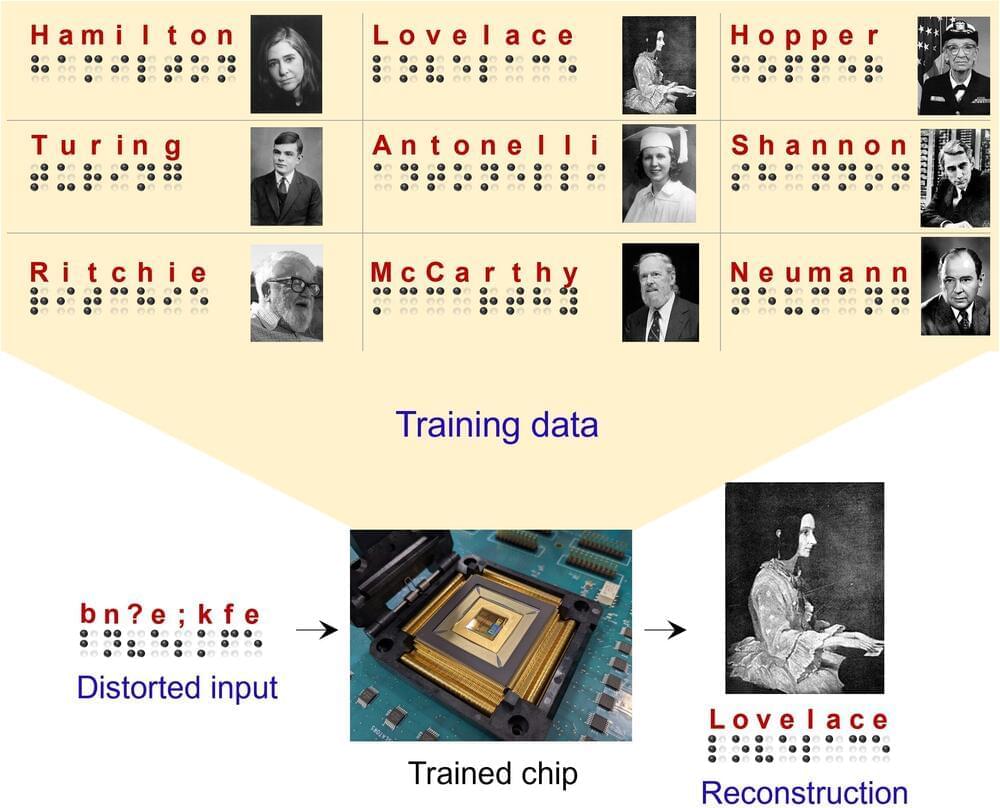

Deep-learning models have proven to be highly valuable tools for making predictions and solving real-world tasks that involve the analysis of data. Despite their advantages, before they are deployed in real software and devices such as cell phones, these models require extensive training in physical data centers, which can be both time and energy consuming.

Researchers at Texas A&M University, Rain Neuromorphics and Sandia National Laboratories have recently devised a new system for training deep learning models more efficiently and on a larger scale. This system, introduced in a paper published in Nature Electronics, relies on the use of new training algorithms and memristor crossbar hardware, that can carry out multiple operations at once.

“Most people associate AI with health monitoring in smart watches, face recognition in smart phones, etc., but most of AI, in terms of energy spent, entails the training of AI models to perform these tasks,” Suhas Kumar, the senior author of the study, told TechXplore.

Intel Labs and the Perelman School of Medicine at the University of Pennsylvania (Penn Medicine) have completed a joint research study using federated learning – a distributed machine learning (ML) artificial intelligence (AI) approach – to help international healthcare and research institutions identify malignant brain tumours.

The largest medical federated learning study to date with an unprecedented global dataset examined from 71 institutions across six continents, the project demonstrated the ability to improve brain tumour detection by 33%.

“Federated learning has tremendous potential across numerous domains, particularly within healthcare, as shown by our research with Penn Medicine,” says Jason Martin, principal engineer at Intel Labs. “Its ability to protect sensitive information and data opens the door for future studies and collaboration, especially in cases where datasets would otherwise be inaccessible.