After six decades we have finally reached controlled fusion “ignition.” Here is how it works and what it means (and doesn’t mean):

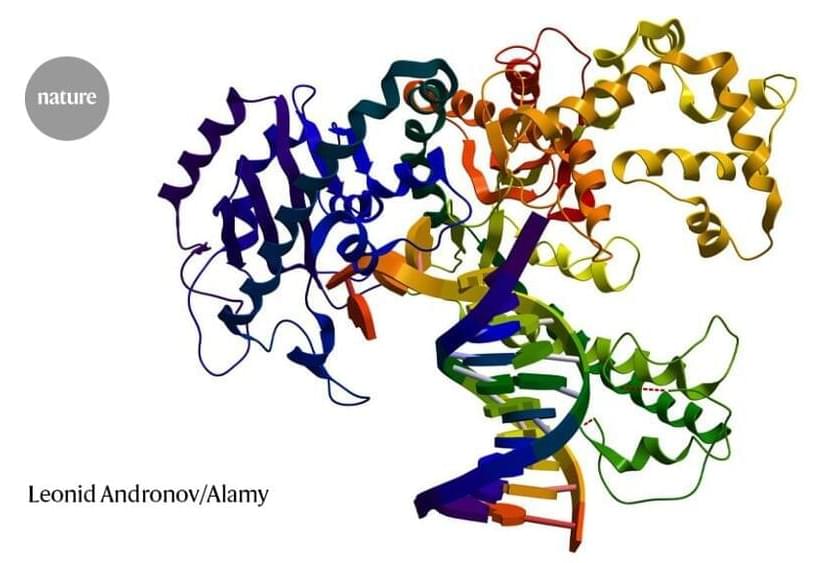

At the Lawrence Livermore National Lab (LLNL) the National Ignition Facility (NIF) starts with the Injection Laser System (ILS), a ytterbium-doped optical fiber laser (Master Oscillator) that produces a single very lower power, 1,053 nanometer (Infrared Light) beam. This single beam is split into 48 Pre-Amplifiers Modules (PAMs) that create four beams each (192 total). Each PAM conducts a two-stage amplification process via xenon flash lamps.

Self-coding and self-updating AI algorithms appear to be on the horizon. There are talks about Pitchfork AI, a top-secret Google Labs project that can independently code, refactor, and use both its own and other people’s code.

This type of AI has actually been discussed for a long time, and DeepMind mentioned it at the beginning of the year along with the AlphaCode AI, which, according to them, “code programs in competitive level” as a middle developer. However, since February, there hasn’t been any more interesting news.