From search engines to voice assistants, computers are getting better at understanding what we mean. That’s thanks to language-processing programs that make sense of a staggering number of words, without ever being told explicitly what those words mean. Such programs infer meaning instead through statistics—and a new study reveals that this computational approach can assign many kinds of information to a single word, just like the human brain.

The study, published April 14 in the journal Nature Human Behavior, was co-led by Gabriel Grand, a graduate student in electrical engineering and computer science who is affiliated with MIT’s Computer Science and Artificial Intelligence Laboratory, and Idan Blank Ph.D. ‘16, an assistant professor at the University of California at Los Angeles. The work was supervised by McGovern Institute for Brain Research investigator Ev Fedorenko, a cognitive neuroscientist who studies how the human brain uses and understands language, and Francisco Pereira at the National Institute of Mental Health. Fedorenko says the rich knowledge her team was able to find within computational language models demonstrates just how much can be learned about the world through language alone.

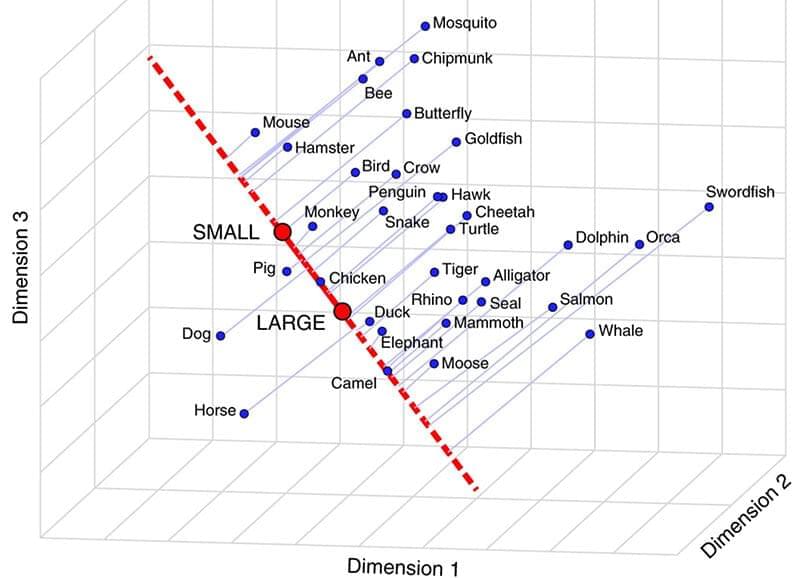

The research team began its analysis of statistics-based language processing models in 2015, when the approach was new. Such models derive meaning by analyzing how often pairs of words co-occur in texts and using those relationships to assess the similarities of words’ meanings. For example, such a program might conclude that “bread” and “apple” are more similar to one another than they are to “notebook,” because “bread” and “apple” are often found in proximity to words like “eat” or “snack,” whereas “notebook” is not.