Support us! https://www.patreon.com/mlst.

Irina Rish is a world-renowned professor of computer science and operations research at the Université de Montréal and a core member of the prestigious Mila organisation. She is a Canada CIFAR AI Chair and the Canadian Excellence Research Chair in Autonomous AI. Irina holds an MSc and PhD in AI from the University of California, Irvine as well as an MSc in Applied Mathematics from the Moscow Gubkin Institute. Her research focuses on machine learning, neural data analysis, and neuroscience-inspired AI. In particular, she is exploring continual lifelong learning, optimization algorithms for deep neural networks, sparse modelling and probabilistic inference, dialog generation, biologically plausible reinforcement learning, and dynamical systems approaches to brain imaging analysis. Prof. Rish holds 64 patents, has published over 80 research papers, several book chapters, three edited books, and a monograph on Sparse Modelling. She has served as a Senior Area Chair for NeurIPS and ICML. Irina’s research is focussed on taking us closer to the holy grail of Artificial General Intelligence. She continues to push the boundaries of machine learning, continually striving to make advancements in neuroscience-inspired AI.

In a conversation about artificial intelligence (AI), Irina and Tim discussed the idea of transhumanism and the potential for AI to improve human flourishing. Irina suggested that instead of looking at AI as something to be controlled and regulated, people should view it as a tool to augment human capabilities. She argued that attempting to create an AI that is smarter than humans is not the best approach, and that a hybrid of human and AI intelligence is much more beneficial. As an example, she mentioned how technology can be used as an extension of the human mind, to track mental states and improve self-understanding. Ultimately, Irina concluded that transhumanism is about having a symbiotic relationship with technology, which can have a positive effect on both parties.

Tim then discussed the contrasting types of intelligence and how this could lead to something interesting emerging from the combination. He brought up the Trolley Problem and how difficult moral quandaries could be programmed into an AI. Irina then referenced The Garden of Forking Paths, a story which explores the idea of how different paths in life can be taken and how decisions from the past can have an effect on the present.

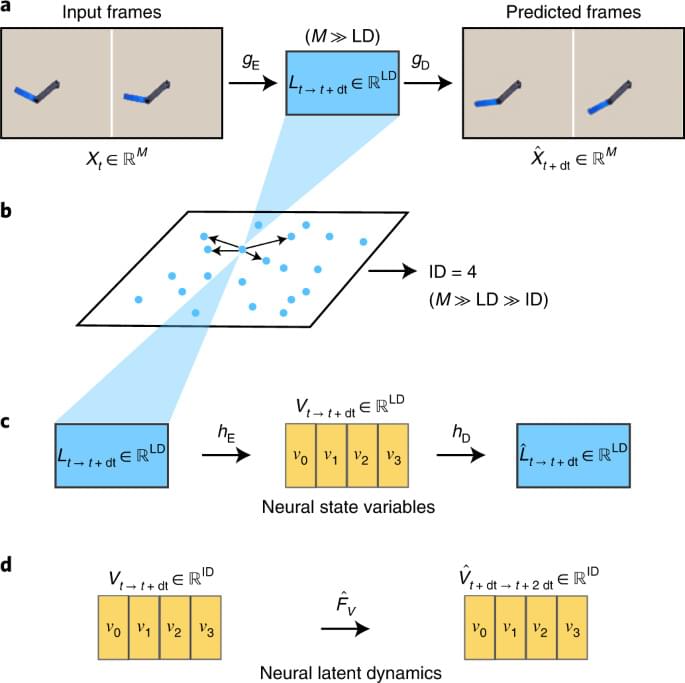

To better understand AI and intelligence, Irina suggested looking at it from multiple perspectives and understanding the importance of complex systems science in programming and understanding dynamical systems. She discussed the work of Michael Levin, who is looking into reprogramming biological computers with chemical interventions, and Tim mentioned Alex Mordvinsev, who is looking into the self-healing and repair of these systems. Ultimately, Irina argued that the key to understanding AI and intelligence is to recognize the complexity of the systems and to create hybrid models of human and AI intelligence.

Find Irina;