BlackRock’s Kevin Franklin explains how investors get comfortable with applying these tools to money management.

For all Morningstar videos: http://www.morningstar.com/articles/archive/467/us-videos.html

BlackRock’s Kevin Franklin explains how investors get comfortable with applying these tools to money management.

For all Morningstar videos: http://www.morningstar.com/articles/archive/467/us-videos.html

How Humanity Could be Transformed through Technology | Technology Documentary.

Watch ‘How Biotechnology Is Changing the World’ here: https://youtu.be/lFcF4DsuC9A

With Augmented Humanity we will travel from the US to Japan, into the heart of secret labs of the most borderline scientists in the world, who try to push the boundaries of life through technology. Robotics is an important step, but the future of our species is not in a massive substitution by robots, on the contrary, robotics and technology must be used to improve the human being.

Subscribe ENDEVR for free: https://bit.ly/3e9YRRG

Facebook: https://bit.ly/2QfRxbG

Instagram: https://www.instagram.com/endevrdocs/

▬▬▬▬▬▬▬▬▬

#FreeDocumentary #ENDEVR #Cyborg.

▬▬▬▬▬▬▬▬▬

ENDEVR explains the world we live in through high-class documentaries, special investigations, explainers videos and animations. We cover topics related to business, economics, geopolitics, social issues and everything in between that we think are interesting.

Transhuman brains are the melding of hyper-advanced electronics and super-artificial intelligence (AI) with neurobiological tissue. The goal is not only to repair injury and mitigate disease, but also to enhance brain capacity and boost mental function. What is the big vision, the end goal — how far can transhuman brains go? What does it mean for individual consciousness and personal identity? Is virtual immortality possible? What are the ethics, the morality, of transhuman brains? What are the dangers?

Free access to Closer to Truth’s library of 5,000 videos: http://bit.ly/376lkKN

Support the show with Closer To Truth merchandise: https://bit.ly/3P2ogje.

Watch more interviews on transhuman brains: https://bit.ly/3Wb7yRm.

Max Tegmark is Professor of Physics at Massachusetts Institute of Technology. He holds a BS in Physics and a BA in Economics from the Royal Institute of Technology in Sweden. He also earned a MA and PhD in physics from University of California, Berkeley.

Register for free at CTT.com for subscriber-only exclusives: http://bit.ly/2GXmFsP

I think communication with AI and each other will also be wireless so discoveries like this are important.

CityU

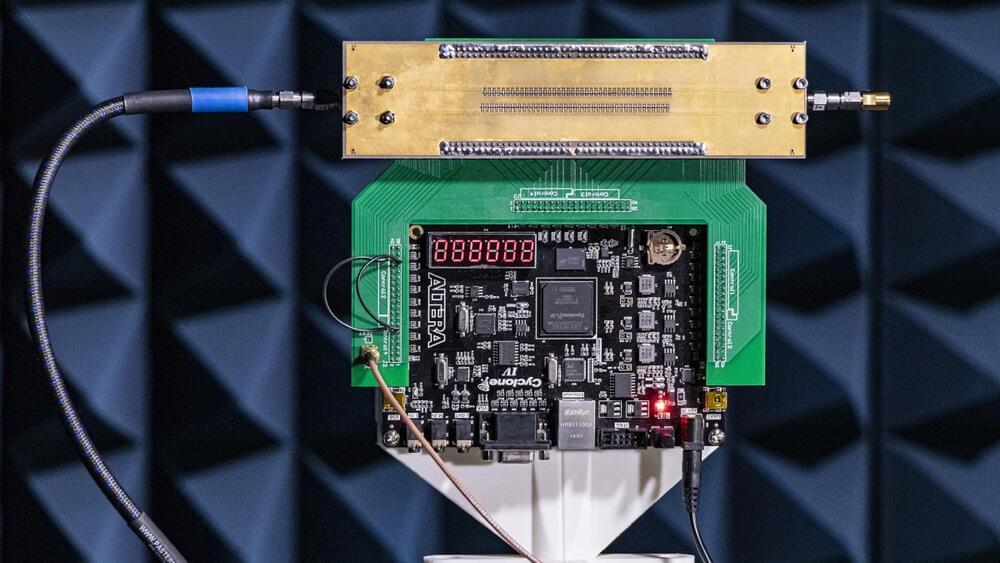

A research team led by a scientist at CityU has resulted in an innovative, game-changing antenna. This revolutionary invention allows unprecedented control of the direction, frequency, and intensity of its signal beam emission. On top of that, this antenna is invaluable for 6G wireless communications applications such as ISAC sensing and communication integration.

A team of about 30 teenage robotics enthusiasts in Ra’anana recently unveiled a 3D-printed kit that transforms a standard $500 wheelchair into the equivalent of a $2,500 electronic wheelchair with enhanced maneuverability and powerful braking.

The add-on doesn’t interfere with the chair’s folding mechanism and is easily removed, so it can be attached to a rented wheelchair that must be returned in its original condition.

2023: amateur movies, TV, books, & games.

2030: all above, and more, at professional level quality.

There’s a new Knives Out movie on Netflix, and I still haven’t seen a few of this season’s awards contenders. But the film I most wish I could watch right now is Squid Invasion From the Deep. It’s a sci-fi thriller directed by John Carpenter about a team of scientists led by Sigourney Weaver who discover an extraterrestrial cephalopod and then die one by one at its tentacles. The production design was inspired by Alien and The Thing; there are handmade creature FX and lots of gore; Wilford Brimley has a cameo. Unfortunately, though, I can’t see this movie, and neither can you, because it doesn’t exist.

For now, Squid Invasion is just a portfolio of concept art conjured by a redditor using Midjourney, an artificial-intelligence tool that creates images from human-supplied text prompts.

Check out all the on-demand sessions from the Intelligent Security Summit here.

It’s as good a time as any to discuss the implications of advances in artificial intelligence (AI). 2022 saw interesting progress in deep learning, especially in generative models. However, as the capabilities of deep learning models increase, so does the confusion surrounding them.

On the one hand, advanced models such as ChatGPT and DALL-E are displaying fascinating results and the impression of thinking and reasoning. On the other hand, they often make errors that prove they lack some of the basic elements of intelligence that humans have.

Commercial Purposes ► [email protected].

–

The kind of rapid technological advancement that humanity has seen in the last 100 years has been the story of the century. Thanks to this rapid advancement, the idea that humanity is approaching a “singularity” has moved from the realm of science fiction to a concern for serious scientific debate. Some people believe that AI will take over the world soon. In fact, some experts predict that this technological singularity will happen within the next 30 years. The idea that artificial intelligence will take over the world and humans will no longer be in charge is scary and opens up the stage for serious debate. Today we are on the brink of a technologically activated change that will fundamentally disrupt every human affair and the entire human ecosystem, as it exists today. SO what is technological singularity? And how will it change our very own reality? Let’s understand it.

-

“If You happen to see any content that is yours, and we didn’t give credit in the right manner please let us know at [email protected] and we will correct it immediately”

“Some of our visual content is under an Attribution-ShareAlike license. (https://creativecommons.org/licenses/) in its different versions such as 1.0, 2.0, 30, and 4.0 – permitting commercial sharing with attribution given in each picture accordingly in the video.”

Credits: Ron Miller, Mark A. Garlick / MarkGarlick.com.

Credits: NASA/Shutterstock/Storyblocks/Elon Musk/SpaceX/ESA/ESO/ Flickr.

00:00 Intro.

2:54 what is a singularity.

7:08 what happens to human intelligence.

8:10 Elon Musk — Neuralink.

8:56 when will technological singularity occur?

#insanecuriosity #technologicalsingularity #ai

The Royal Meteorological Society, which runs the Weather Photographer of the Year competition, has posed an intriguing question: Can artificial intelligence (AI) win a photography competition?

To answer this, the Society drew up a Turing test in which the viewer is invited to guess which is an AI image and which is an actual award-winning photo.

The Turing test, created by Alan Turing in 1950, is a test of a machine’s ability to exhibit intelligent behavior indistinguishable from a human.