Nearly three years into the pandemic, travel has returned but hotel staff have not. Unable to find workers, hotel owners and managers are having to adapt to what they believe is the new normal.

Check out all the on-demand sessions from the Intelligent Security Summit here.

Over the last half-decade, quantum computing has attracted tremendous media attention. Why?

After all, we have computers already, which have been around since the 1940s. Is the interest because of the use cases? Better AI? Faster and more accurate pricing for financial services firms and hedge funds? Better medicines once quantum computers get a thousand times bigger?

Check out all the on-demand sessions from the Intelligent Security Summit here.

The metaverse is becoming one of the hottest topics not only in technology but in the social and economic spheres. Tech giants and startups alike are already working on creating services for this new digital reality.

The metaverse is slowly evolving into a mainstream virtual world where you can work, learn, shop, be entertained and interact with others in ways never before possible. Gartner recently listed the metaverse as one of the top strategic technology trends for 2023, and predicts that by 2026, 25% of the population will spend at least one hour a day there for work, shopping, education, social activities and/or entertainment. That means organizations that use the metaverse effectively will be able to engage with both human and machine customers and create new revenue streams and markets.

Researchers tested GPT-3.5 with questions from the US Bar Exam. They predict that GPT-4 and comparable models might be able to pass the exam very soon.

In the U.S., almost all jurisdictions require a professional license exam known as the Bar Exam. By passing this exam, lawyers are admitted to the bar of a U.S. state.

In most cases, applicants must complete at least seven years of post-secondary education, including three years at an accredited law school.

Benjamin Franklin stated, “If you would not be forgotten as soon as you are dead and rotten, either write things worth reading, or do things worth the writing.”

MIT’s well-known late Director of Artificial Intelligence Laboratory, Patrick Winston, expanded upon this adage, saying, “Your success in life will be determined largely by your ability to speak, your ability to write, and the quality of your ideas. In that order.”

We are at a precarious point in human development, with the positive and negative impact of technology surrounding us as individuals and as a society. Technology has helped improve our living standards, extended our lives, cured diseases, fed our growing populations, and expanded our frontiers. But it has also helped create greater economic and digital divides, increased pollution and harm to our environment, and potentially endangered the intellectual development of our human population.

Year 2022 😗

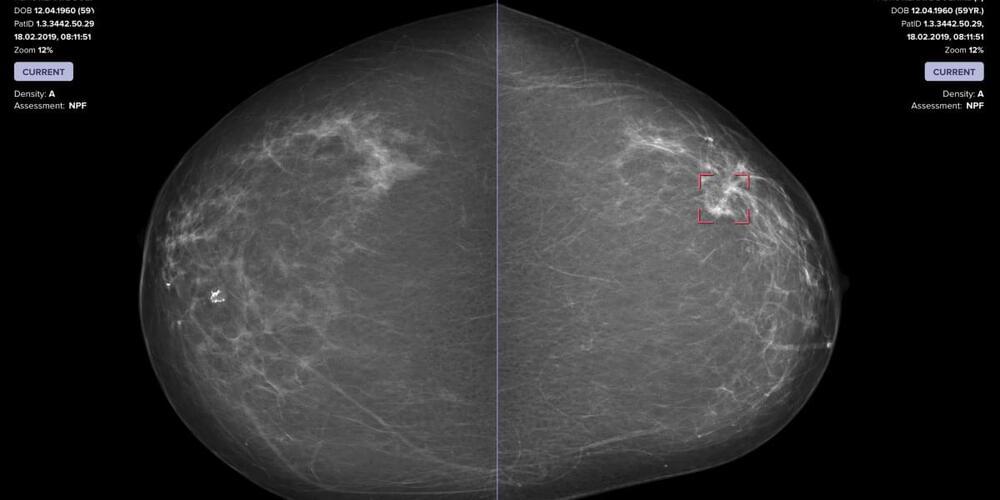

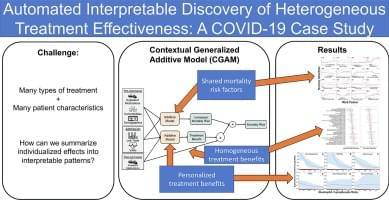

Testing multiple treatments for heterogeneous (varying) effectiveness with respect to many underlying risk factors requires many pairwise tests; we would like to instead automatically discover and visualize patient archetypes and predictors of treatment effectiveness using multitask machine learning. In this paper, we present a method to estimate these heterogeneous treatment effects with an interpretable hierarchical framework that uses additive models to visualize expected treatment benefits as a function of patient factors (identifying personalized treatment benefits) and concurrent treatments (identifying combinatorial treatment benefits). This method achieves state-of-the-art predictive power for COVID-19 in-hospital mortality and interpretable identification of heterogeneous treatment benefits. We first validate this method on the large public MIMIC-IV dataset of ICU patients to test recovery of heterogeneous treatment effects. Next we apply this method to a proprietary dataset of over 3,000 patients hospitalized for COVID-19, and find evidence of heterogeneous treatment effectiveness predicted largely by indicators of inflammation and thrombosis risk: patients with few indicators of thrombosis risk benefit most from treatments against inflammation, while patients with few indicators of inflammation risk benefit most from treatments against thrombosis. This approach provides an automated methodology to discover heterogeneous and individualized effectiveness of treatments.

Year 2022 😗

Automation is not just for high-throughput screening anymore. New devices and greater flexibility are transforming what’s possible throughout drug discovery and development. This article was written by Thomas Albanetti, AstraZeneca; Ryan Bernhardt, Biosero; Andrew Smith, AstraZeneca and Kevin Stewart, AstraZeneca for a 28-page DDW eBook, sponsored by Bio-Rad. Download the full eBook here.

A utomation has been a part of the drug discovery industry for decades. The earliest iterations of these systems were used in large pharmaceutical companies for high-throughput screening (HTS) experiments. HTS enabled the testing of libraries of small molecule compounds by a single or a series of multiple experimental conditions to i dentify the potential of those compounds as a treatment for a target disease. HTS has evolved to enable screening libraries of millions of compounds, but the high cost of equipment has largely resulted in automation occurring primarily in large pharmaceutical companies. Today, though, new types of robots paired with sophisticated software tools have helped to democratise access to automation, making it possible for pharma and biotechnology companies of almost any size to deploy these solutions in their labs.

Originally, automated solutions were only implemented for projects that involved a lot of repetitive tasks, which is typical of high-throughput experiments and assays. The equipment used in early automation efforts was expensive, specialised, and physically integrated together, effectively making the equipment unavailable for any non-automated use. Now, both the approaches and equipment are far more adaptive and flexible. The latest automation software is also much simpler to program, making it easier to swap in different instruments and robots as needed. For example, labs can run a particular HTS assay for a few weeks and then quickly pivot to run a new assay. Labs can also create and run bespoke standard operating procedures, assays, and experiments for drug targets they are interested in pursuing.

Support us! https://www.patreon.com/mlst.

Irina Rish is a world-renowned professor of computer science and operations research at the Université de Montréal and a core member of the prestigious Mila organisation. She is a Canada CIFAR AI Chair and the Canadian Excellence Research Chair in Autonomous AI. Irina holds an MSc and PhD in AI from the University of California, Irvine as well as an MSc in Applied Mathematics from the Moscow Gubkin Institute. Her research focuses on machine learning, neural data analysis, and neuroscience-inspired AI. In particular, she is exploring continual lifelong learning, optimization algorithms for deep neural networks, sparse modelling and probabilistic inference, dialog generation, biologically plausible reinforcement learning, and dynamical systems approaches to brain imaging analysis. Prof. Rish holds 64 patents, has published over 80 research papers, several book chapters, three edited books, and a monograph on Sparse Modelling. She has served as a Senior Area Chair for NeurIPS and ICML. Irina’s research is focussed on taking us closer to the holy grail of Artificial General Intelligence. She continues to push the boundaries of machine learning, continually striving to make advancements in neuroscience-inspired AI.

In a conversation about artificial intelligence (AI), Irina and Tim discussed the idea of transhumanism and the potential for AI to improve human flourishing. Irina suggested that instead of looking at AI as something to be controlled and regulated, people should view it as a tool to augment human capabilities. She argued that attempting to create an AI that is smarter than humans is not the best approach, and that a hybrid of human and AI intelligence is much more beneficial. As an example, she mentioned how technology can be used as an extension of the human mind, to track mental states and improve self-understanding. Ultimately, Irina concluded that transhumanism is about having a symbiotic relationship with technology, which can have a positive effect on both parties.

Tim then discussed the contrasting types of intelligence and how this could lead to something interesting emerging from the combination. He brought up the Trolley Problem and how difficult moral quandaries could be programmed into an AI. Irina then referenced The Garden of Forking Paths, a story which explores the idea of how different paths in life can be taken and how decisions from the past can have an effect on the present.

To better understand AI and intelligence, Irina suggested looking at it from multiple perspectives and understanding the importance of complex systems science in programming and understanding dynamical systems. She discussed the work of Michael Levin, who is looking into reprogramming biological computers with chemical interventions, and Tim mentioned Alex Mordvinsev, who is looking into the self-healing and repair of these systems. Ultimately, Irina argued that the key to understanding AI and intelligence is to recognize the complexity of the systems and to create hybrid models of human and AI intelligence.

Find Irina;