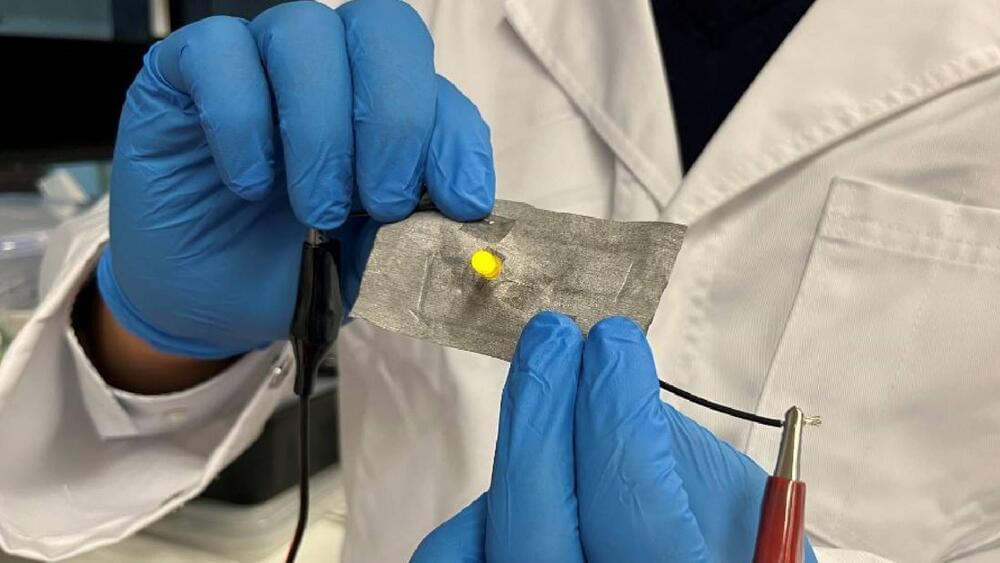

Scientists have invented a simple metallic coating treatment for clothing or wearable textiles, which can repair itself, repel bacteria, and even monitor a person’s electrocardiogram (ECG) heart signals.

This is according to a press release by Flinders University published last month.

The inventors of the new coating say the conductive circuits created by liquid metal (LM) particles can transform wearable electronics due to the fact that the ‘breathable’ electronic textiles have special connectivity powers to ‘autonomously heal’ themselves even when cut.