Researchers in the US have developed a machine learning algorithm that accurately reconstructs the s.

May 22 (Reuters) — ChatGPT’s creator OpenAI is testing how to gather broad input on decisions impacting its artificial intelligence, its president Greg Brockman said on Monday.

At AI Forward, an event in San Francisco hosted by Goldman Sachs Group Inc (GS.N) and SV Angel, Brockman discussed the broad contours of how the maker of the wildly popular chatbot is seeking regulation of AI globally.

One announcement he previewed is akin to the model of Wikipedia, which he said requires people with diverse views to coalesce and agree on the encyclopedia’s entries.

While much of what Aligned AI is doing is proprietary, Gorman says that at its core Aligned AI is working on how to give generative A.I. systems a much more robust understanding of concepts, an area where these systems continue to lag humans, often by a significant margin. “In some ways [large language models] do seem to have a lot of things that seem like human concepts, but they are also very fragile,” Gorman says. “So it’s very easy, whenever someone brings out a new chatbot, to trick it into doing things it’s not supposed to do.” Gorman says that Aligned AI’s intuition is that methods that make chatbots less likely to generate toxic content will also be helpful in making sure that future A.I. systems don’t harm people in other ways. The work on “the alignment problem”—which is the idea of how we align A.I. with human values so it doesn’t kill us all and from which Aligned AI takes its name—could also help address dangers from A.I. that are here today, such as chatbots that produce toxic content, is controversial. Many A.I. ethicists see talk of “the alignment problem,” which is what people who say they work on “A.I. Safety” often say is their focus, as a distraction from the important work of addressing present dangers from A.I.

But Aligned AI’s work is a good demonstration of how the same research methods can help address both risks. Giving A.I. systems a more robust conceptual understanding is something we all should want. A system that understands the concept of racism or self-harm can be better trained not to generate toxic dialogue; a system that understands the concept of avoiding harm and the value of human life, would hopefully be less likely to kill everyone on the planet.

Aligned AI and Xayn are also good examples that there are a lot of promising ideas being produced by smaller companies in the A.I. ecosystem. OpenAI, Microsoft, and Google, while clearly the biggest players in the space, may not have the best technology for every use case.

Join top executives in San Francisco on July 11–12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

According to Google, Meta and a number of other platforms, generative AI tools are the basis of the next era in creative testing and performance. Meta bills its Advantage+ campaigns as a way to “use AI to eliminate the manual steps of ad creation.”

Provide a platform with all of your assets, from website to logos, product images to colors, and they can make new creatives, test them and dramatically improve results.

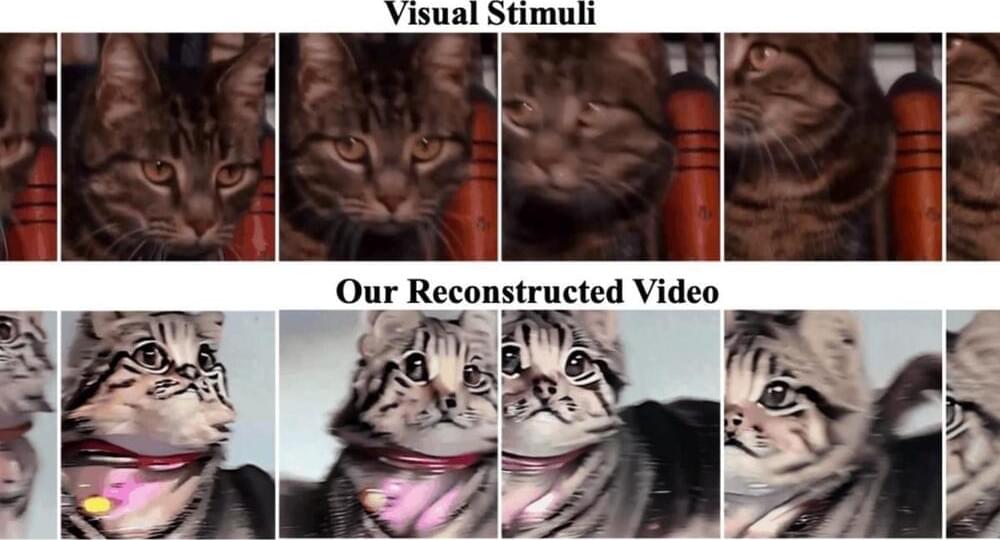

Researchers have used generative AI to reconstruct “high-quality” video from brain activity, a new study reports.

Researchers Jiaxin Qing, Zijiao Chen, and Juan Helen Zhou from the National University of Singapore and The Chinese University of Hong Kong used fMRI data and the text-to-image AI model Stable Diffusion to create a model called MinD-Video that generates video from the brain readings. Their paper describing the work was posted to the arXiv preprint server last week.

Their demonstration on the paper’s corresponding website shows a parallel between videos that were shown to subjects and the AI-generated videos created based on their brain activity. The differences between the two videos are slight and for the most part, contain similar subjects and color palettes.

Robots are helping humans in a growing number of places – from archaeological sites to disaster zones and sewers. Here’s 9 of the most interesting robots.

Believe it or not, one of the most important technology announcements of the past few months had nothing to do with artificial intelligence. While critics and boosters continue to stir and fret over the latest capabilities of ChatGPT, a largely unknown 60-person start-up, based out of Tel Aviv, quietly began demoing a product that might foretell an equally impactful economic disruption.

The company is named Sightful and their new offering is Spacetop: “the world’s first augmented reality laptop.” Spacetop consists of a standard computer keyboard tethered to pair of goggles, styled like an unusually chunky pair of sport sunglasses. When you put on the goggles, the Spacetop technology inserts multiple large virtual computer screens into your visual field, floating above the keyboard as if you were using a computer connected to large external monitors.

As oppose to virtual reality technology, which places you into an entirely artificial setting, Spacetop is an example of augmented reality (AR), which places virtual elements into the real world. The goggles are transparent: when you put them on at your table in Starbucks you still see the coffee shop all around you. The difference is now there are also virtual computer screens floating above your macchiato.

face_with_colon_three This new gold rush with AI will bring new jobs for even Psychiatry and Therapists which is already leading to new bots with human like therapists in texts. This could lead to even better mental health for the global population.

“Psychotherapy is very expensive and even in places like Canada, where I’m from, and other countries, it’s super expensive, the waiting lists are really long,” Ashley Andreou, a medical student focusing on psychiatry at Georgetown University, told Al Jazeera.

“People don’t have access to something that augments medication and is evidence-based treatment for mental health issues, and so I think that we need to increase access, and I do think that generative AI with a certified health professional will increase efficiency.”

The prospect of AI augmenting, or even leading, mental health treatment raises a myriad of ethical and practical concerns. These range from how to protect personal information and medical records, to questions about whether a computer programme will ever be truly capable of empathising with a patient or recognising warning signs such as the risk of self-harm.