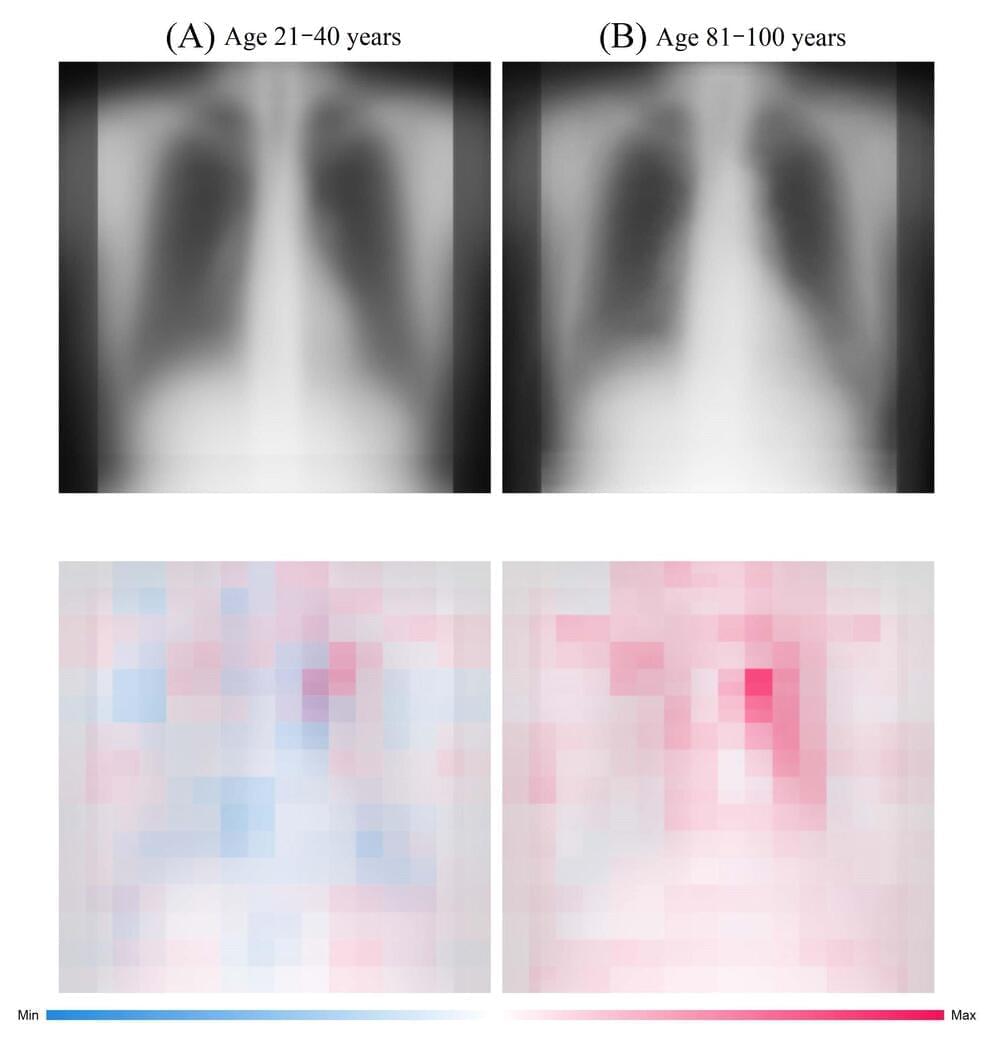

What if “looking your age” refers not to your face, but to your chest? Osaka Metropolitan University scientists have developed an advanced artificial intelligence (AI) model that utilizes chest radiographs to accurately estimate a patient’s chronological age. More importantly, when there is a disparity, it can signal a correlation with chronic disease.

These findings mark a leap in medical imaging, paving the way for improved early disease detection and intervention. The results are published in The Lancet Healthy Longevity.

The research team, led by graduate student Yasuhito Mitsuyama and Dr. Daiju Ueda from the Department of Diagnostic and Interventional Radiology at the Graduate School of Medicine, Osaka Metropolitan University, first constructed a deep learning-based AI model to estimate age from chest radiographs of healthy individuals.