Technological advancements like autonomous driving and computer vision are driving a surge in demand for computational power. Optical computing, with its high throughput, energy efficiency, and low latency, has garnered considerable attention from academia and industry. However, current optical computing chips face limitations in power consumption and size, which hinders the scalability of optical computing networks.

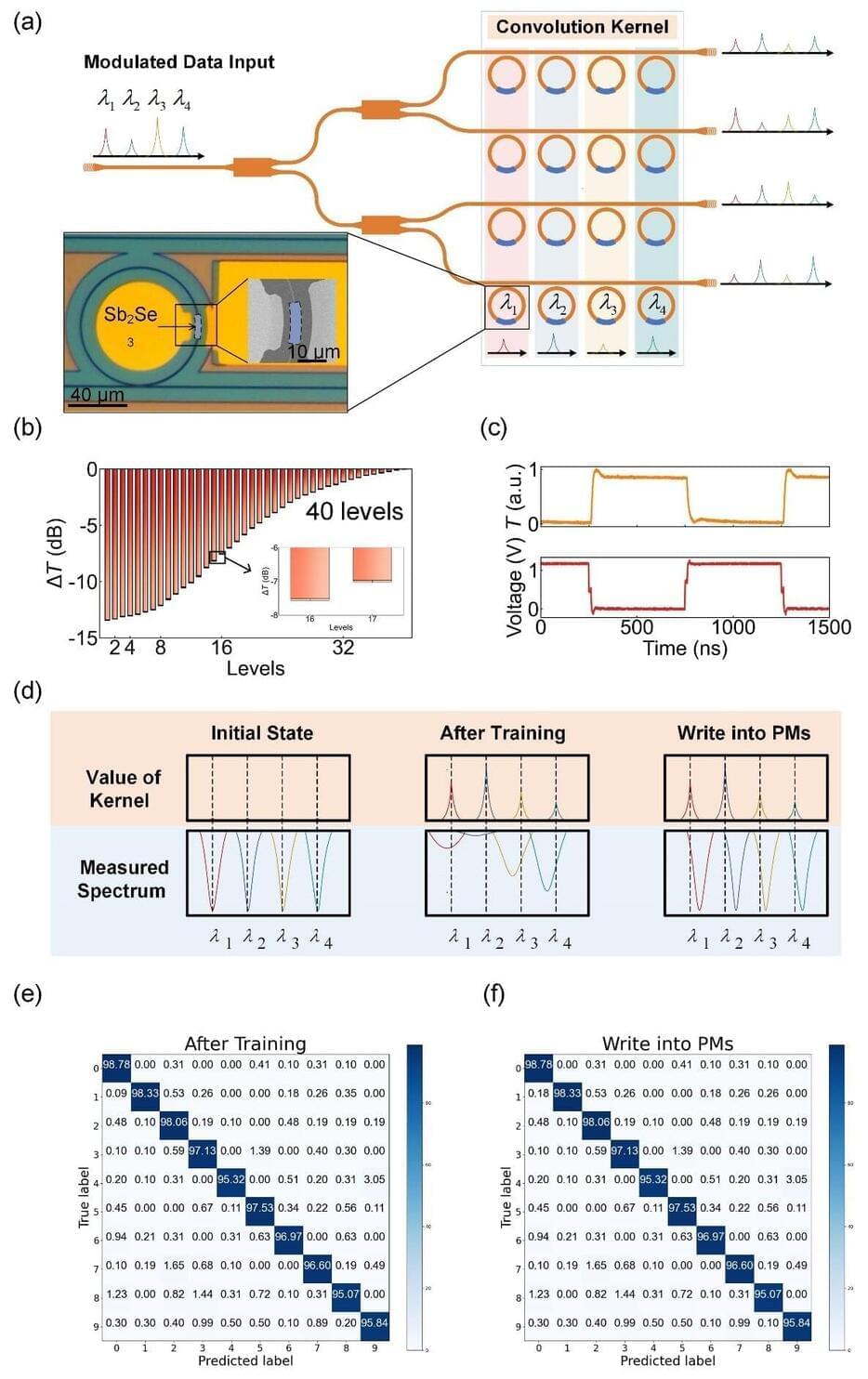

Thanks to the rise of nonvolatile integrated photonics, optical computing devices can achieve in-memory computing while operating with zero static power consumption. Phase-change materials (PCMs) have emerged as promising candidates for achieving photonic memory and nonvolatile neuromorphic photonic chips. PCMs offer high refractive index contrast between different states and reversible transitions, making them ideal for large-scale nonvolatile optical computing chips.

While the promise of nonvolatile integrated optical computing chips is tantalizing, it comes with its share of challenges. The need for frequent and rapid switching, essential for online training, is a hurdle that researchers are determined to overcome. Forging a path towards quick and efficient training is a vital step on the journey to unleash the full potential of photonic computing chips.