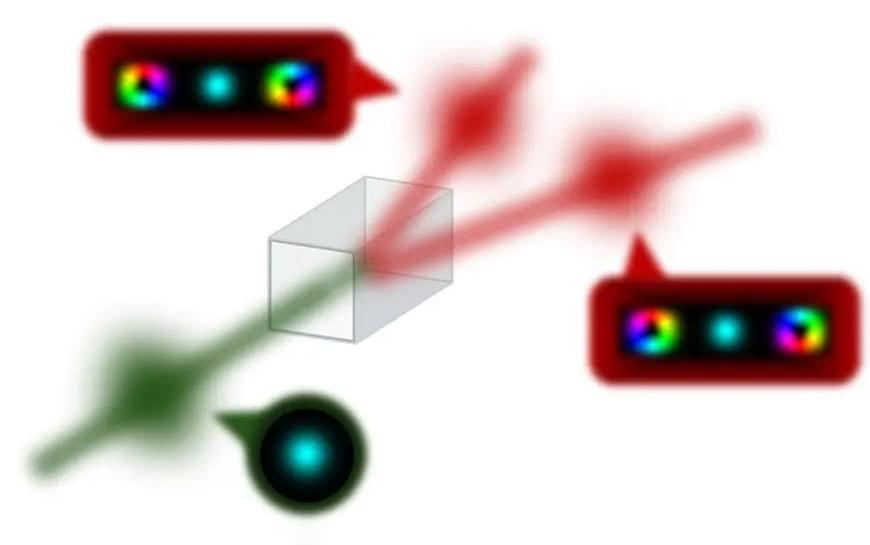

Researchers at Tampere University and their collaborators from Germany and India have experimentally confirmed that angular momentum is conserved when a single photon is converted into a pair – validating a key principle of physics at the quantum level for the first time. This breakthrough opens new possibilities for creating complex quantum states useful in computing, communication, and sensing.

Conservation laws are the heart of our natural scientific understanding as they govern which processes are allowed or forbidden. A simple example is that of colliding billiard balls, where the motion – and with it, their linear momentum – is transferred from one ball to another. A similar conservation rule also exists for rotating objects, which have angular momentum. Interestingly, light can also have an angular momentum, e.g., orbital angular momentum (OAM), which is connected to the light’s spatial structure.

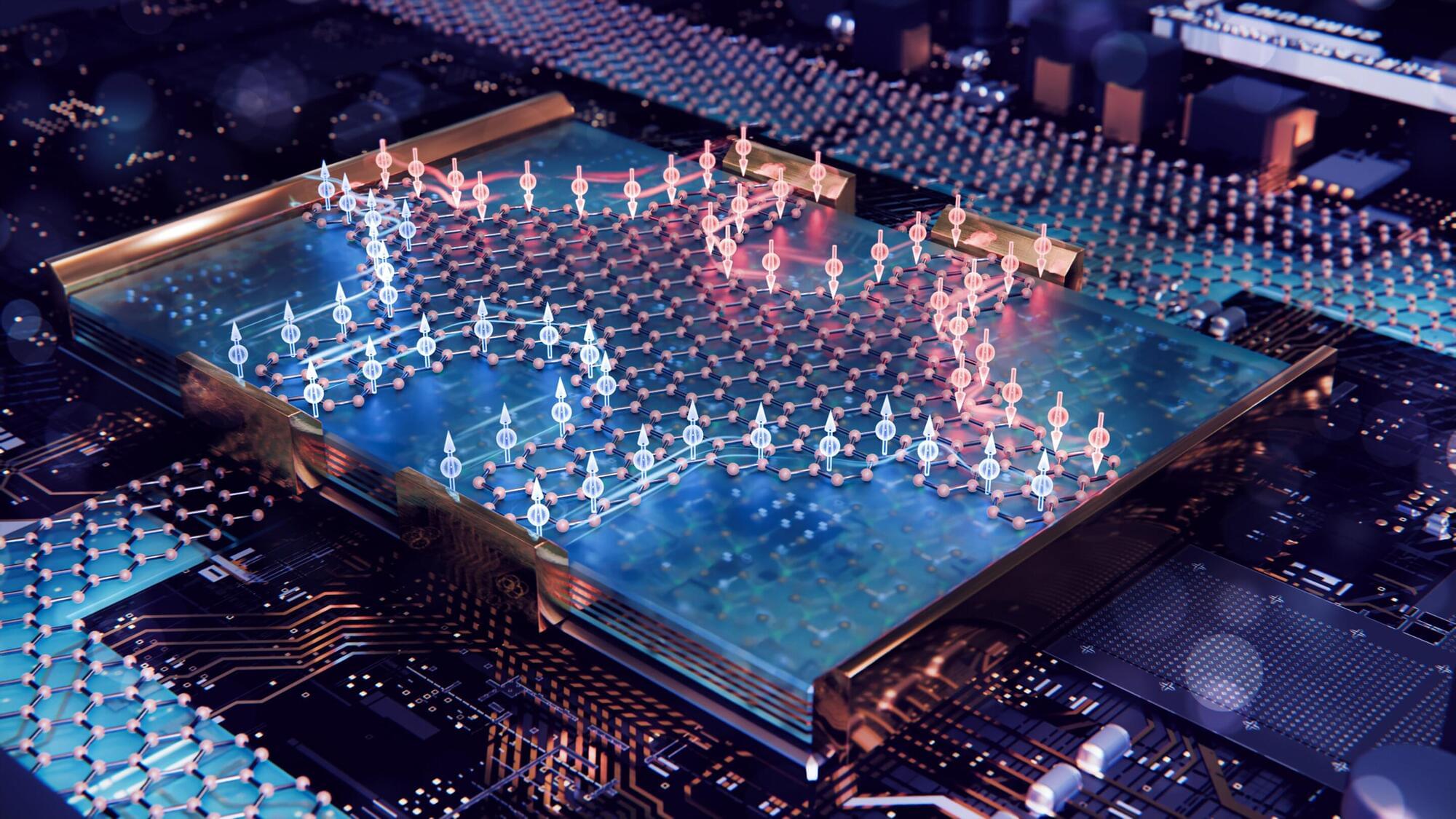

In the quantum realm, this implies that single particles of light, so-called photons, have well-defined quanta of OAM, which need to be conserved in light-matter interactions. In a recent study in Physical Review Letters, researchers from Tampere University and their collaborators, have now pushed the test of these conservation laws to absolute quantum limit. They explore if the conservation of OAM quanta holds when a single photon is split into a photon pair.