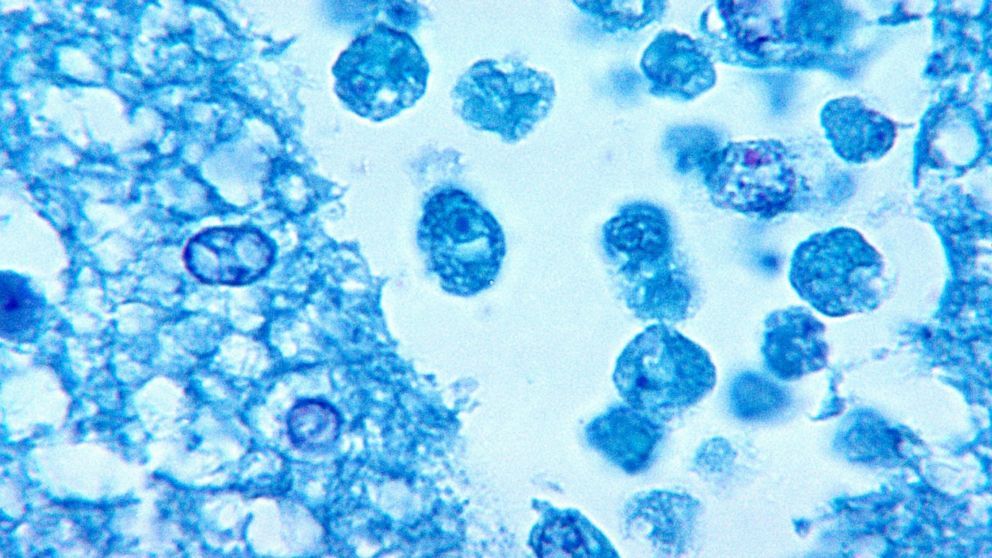

Definitely been seeing great research and success in Biocomputing; why I have been looking more and more in this area of the industry. Bio/ medical technology is our ultimate future state for singularity. It is the key that will help improve the enhancements we need to defeat cancer, aging, intelligence enhance, etc. as we have already seen the early hints already of what it can do for people, machines and data, the environment and resources. However, a word of caution, DNA ownership and security. We will need proper governance and oversight in this space.

© iStock/ Getty Images undefined How much storage do you have around the house? A few terabyte hard drives? What about USB sticks and old SATA drives? Humanity uses a staggering amount of storage, and our needs are only expanding as we build data centers, better cameras, and all sorts of other data-heavy gizmos. It’s a problem scientists from companies like IBM, Intel, and Microsoft are trying to solve, and the solution might be in our DNA.

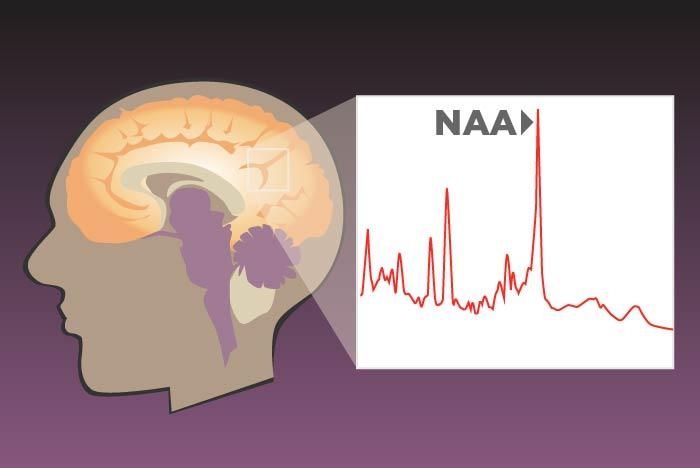

A recent Spectrum article takes a look at the quest to unlock the storage potential of human DNA. DNA molecules are the building blocks of life, piecing our genetic information into living forms. The theory is that we can convert digital files into biological material by translating it from binary code into genetic code. That’s right: the future of storage could be test tubes.

In April, representatives from IBM, Intel, Microsoft, and Twist Bioscience met with computer scientists and geneticists for a closed door session to discuss the issue. The event was cosponsored by the U.S. Intelligence Advanced Research Projects Activity (IARPA), who reportedly may be interested in helping fund a “DNA hard drive.”

© iStock/ Getty Images undefined How much storage do you have around the house? A few terabyte hard drives? What about USB sticks and old SATA drives? Humanity uses a staggering amount of storage, and our needs are only expanding as we build data centers, better cameras, and all sorts of other data-heavy gizmos. It’s a problem scientists from companies like IBM, Intel, and Microsoft are trying to solve, and the solution might be in our DNA.

© iStock/ Getty Images undefined How much storage do you have around the house? A few terabyte hard drives? What about USB sticks and old SATA drives? Humanity uses a staggering amount of storage, and our needs are only expanding as we build data centers, better cameras, and all sorts of other data-heavy gizmos. It’s a problem scientists from companies like IBM, Intel, and Microsoft are trying to solve, and the solution might be in our DNA.