Mark Hamilton, an MIT Ph.D. student in electrical engineering and computer science and affiliate of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), wants to use machines to understand how animals communicate. To do that, he set out first to create a system that can learn human language “from scratch.”

Category: information science – Page 86

What using artificial intelligence to help monitor surgery can teach us

1. Privacy is important, but not always guaranteed. Grantcharov realized very quickly that the only way to get surgeons to use the black box was to make them feel protected from possible repercussions. He has designed the system to record actions but hide the identities of both patients and staff, even deleting all recordings within 30 days. His idea is that no individual should be punished for making a mistake.

The black boxes render each person in the recording anonymous; an algorithm distorts people’s voices and blurs out their faces, transforming them into shadowy, noir-like figures. So even if you know what happened, you can’t use it against an individual.

But this process is not perfect. Before 30-day-old recordings are automatically deleted, hospital administrators can still see the operating room number, the time of the operation, and the patient’s medical record number, so even if personnel are technically de-identified, they aren’t truly anonymous. The result is a sense that “Big Brother is watching,” says Christopher Mantyh, vice chair of clinical operations at Duke University Hospital, which has black boxes in seven operating rooms.

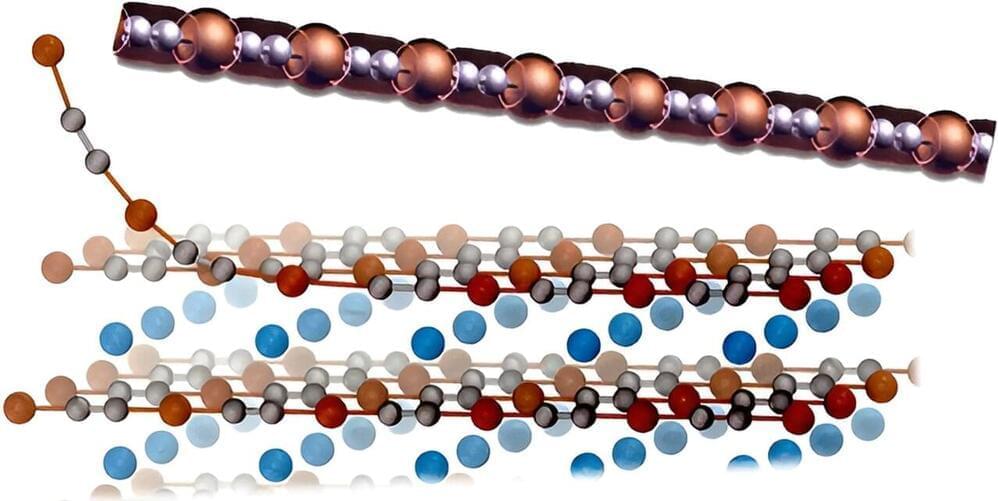

A chain of copper and carbon atoms may be the thinnest metallic wire

While carbon nanotubes are the materials that have received most of the attention so far, they have proved very difficult to manufacture and control, so scientists are eager to find other compounds that could be used to create nanowires and nanotubes with equally interesting properties, but easier to handle.

So, Chiara Cignarella, Davide Campi and Nicola Marzari thought to use computer simulations to parse known three-dimensional crystals, looking for those that—based on their structural and electronic properties —look like they could be easily “exfoliated,” essentially peeling away from them a stable 1-D structure. The same method has been successfully used in the past to study 2D materials, but this is the first application to their 1-D counterparts.

The researchers started from a collection of over 780,000 crystals, taken from various databases found in the literature and held together by van der Waals forces, the sort of weak interactions that happen when atoms are close enough for their electrons to overlap. Then they applied an algorithm that considered the spatial organization of their atoms looking for the ones that incorporated wire-like structures, and calculated how much energy would be necessary to separate that 1-D structure from the rest of the crystal.

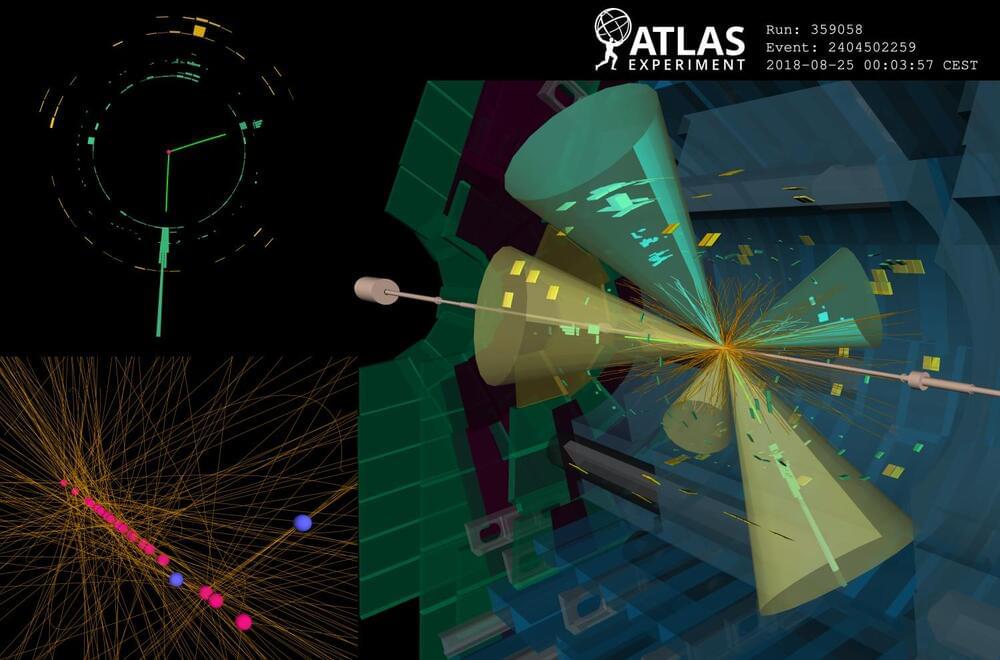

ATLAS chases long-lived particles with the Higgs boson

The Higgs boson has an extremely short lifespan, living for about 10–22 seconds before quickly decaying into other particles. For comparison, in that brief time, light can only travel about the width of a small atomic nucleus. Scientists study the Higgs boson by detecting its decay products in particle collisions at the Large Hadron Collider. But what if the Higgs boson could also decay into unexpected new particles that are long-lived? What if these particles can travel a few centimeters through the detector before they decay? These long-lived particles (LLPs) could shed light on some of the universe’s biggest mysteries, such as the reason matter prevailed over antimatter in the early universe and the nature of dark matter. Searching for LLPs is extremely challenging because they rarely interact with matter, making them difficult to observe in a particle detector. However, their unusual signatures provide exciting prospects for discovery. Unlike particles that leave a continuous track, LLPs result in noticeable displacements between their production and decay points within the detector. Identifying such a signature requires dedicated algorithms. In a new study submitted to Physical Review Letters, ATLAS scientists used a new algorithm to search for LLPs produced in the decay of Higgs bosons. Boosting sensitivity with a new algorithm Figure 1: A comparison of the radial distributions of reconstructed displaced vertices in a simulated long-lived particle (LLP) sample using the legacy and new (updated) track reconstruction configurations. The circular markers represent reconstructed vertices that are matched to LLP decay vertices and the dashed lines represent reconstructed vertices from background decay vertices (non-LLP). (Image: ATLAS Collaboration/CERN) Despite being critical to the LLP searches, dedicated reconstruction algorithms were previously so resource intensive that they could only be applied to less than 10% of all recorded ATLAS data. Recently, however, ATLAS scientists implemented a new “Large-Radius Tracking” algorithm (LRT), which significantly speeds up the reconstruction of charged particle trajectories in the ATLAS Inner Detector that do not point back to the primary proton-proton collision point, while drastically reducing backgrounds and random combinations of detector signals. The LRT algorithm is executed after the primary tracking iteration using exclusively the detector hits (energy deposits from charged particles recorded in individual detector elements) not already assigned to primary tracks. As a result, ATLAS saw an enormous increase in the efficiency of identifying LLP decays (see Figure 1). The new algorithm also improved CPU processing time more than tenfold compared to the legacy implementation, and the disk space usage per event was reduced by more than a factor of 50. These improvements enabled physicists to fully integrate the LRT algorithm into the standard ATLAS event reconstruction chain. Now, every recorded collision event can be scrutinized for the presence of new LLPs, greatly enhancing the discovery potential of such signatures. Physicists are searching for Higgs bosons decaying into new long-lived particles, which may leave a ‘displaced’ signature in the ATLAS detector. Exploring the dark with the Higgs boson Figure 2: Observed 95% confidence-limit on the decay of the Higgs boson to a pair of long-lived s particles that decay back to Standard-Model particles shown as a function of the mean proper decay length ( of the long-lived particle. The observed limits for the Higgs Portal model from the previous ATLAS search are shown with the dotted lines. (Image: ATLAS Collaboration/CERN) In their new result, ATLAS scientists employed the LRT algorithm to search for LLPs that decay hadronically, leaving a distinct signature of one or more hadronic “jets” of particles originating at a significantly displaced position from the proton–proton collision point (a displaced vertex). Physicists also focused on the Higgs “portal” model, in which the Higgs boson mediates interactions with dark-matter particles through its coupling to a neutral boson s, resulting in exotic decays of the Higgs boson to a pair of long-lived s particles that decay into Standard-Model particles. The ATLAS team studied collision events with unique characteristics consistent with the production of the Higgs boson. The background processes that mimic the LLP signature are complex and challenging to model. To achieve good discrimination between signal and background processes, ATLAS physicists used a machine learning algorithm trained to isolate events with jets arising from LLP decays. Complementary to this, a dedicated displaced vertex reconstruction algorithm was used to pinpoint the origin of hadronic jets originating from the decay of LLPs. This new search did not uncover any events featuring Higgs-boson decays to LLPs. It improves bounds on Higgs-boson decays to LLPs by a factor of 10 to 40 times compared to the previous search using the exact same dataset (see Figure 2)! For the first time at the LHC, bounds on exotic decays of the Higgs boson for low LLP masses (less than 16 GeV) have surpassed results for direct searches of exotic Higgs-boson decays to undetected states. About the event display: A 13 TeV collision event recorded by the ATLAS experiment containing two displaced decay vertices (blue circles) significantly displaced from the beam line showing “prompt” non displaced decay vertices (pink circles). The event characteristics are compatible with what would be expected if a Higgs boson is produced in association with a Z boson (decaying to two electrons indicated by green towers), and decayed into two LLPs (decaying into two b-quarks each). Tracks shown in yellow and jets are indicated by cones. The green and yellow blocks correspond to energy deposition in the electromagnetic and hadronic calorimeters, respectively. (Image: ATLAS Collaboration/CERN) Learn more Search for light long-lived particles in proton-proton collisions at 13 TeV using displaced vertices in the ATLAS inner detector (Submitted to PRL, arXiv:2403.15332, see figures) Performance of the reconstruction of large impact parameter tracks in the inner detector of ATLAS (Eur. Phys. J. C 83 (2023) 1,081, arXiv:2304.12867, see figures) Search for exotic decays of the Higgs boson into long-lived particles in proton-proton collisions at 13 TeV using displaced vertices in the ATLAS inner detector (JHEP 11 (2021) 229, arXiv:2107.06092, see figures)

The Missing Piece: Combining Foundation Models and Open-Endedness for Artificial Superhuman Intelligence ASI

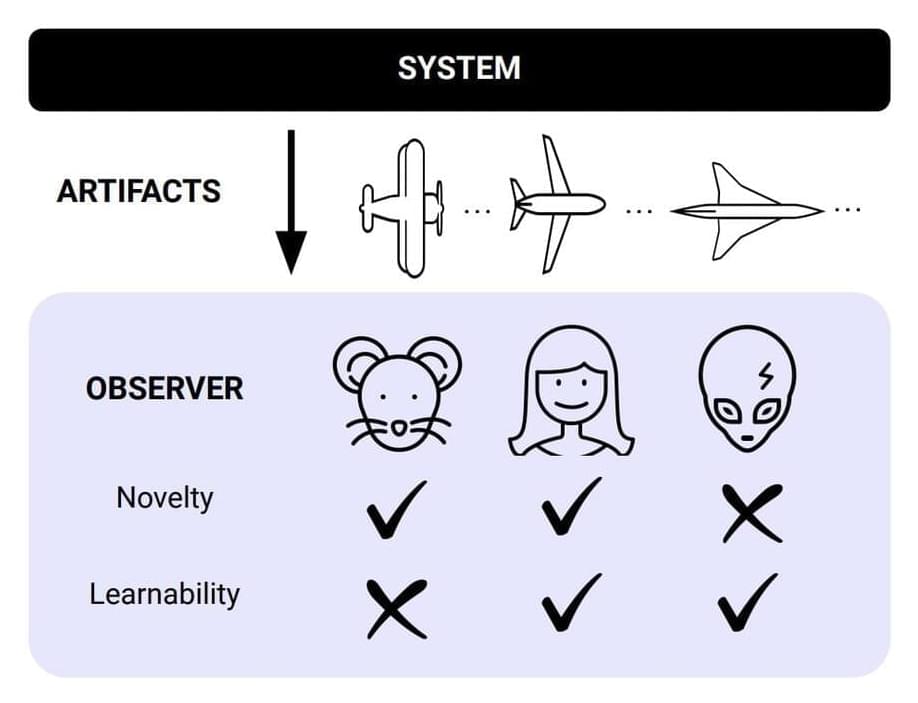

Recent advances in artificial intelligence, primarily driven by foundation models, have enabled impressive progress. However, achieving artificial general intelligence, which involves reaching human-level performance across various tasks, remains a significant challenge. A critical missing component is a formal description of what it would take for an autonomous system to self-improve towards increasingly creative and diverse discoveries without end—a “Cambrian explosion” of emergent capabilities i-e the creation of open-ended, ever-self-improving AI remains elusive., behaviors, and artifacts. This open-ended invention is how humans and society accumulate new knowledge and technology, making it essential for artificial superhuman intelligence.

DeepMind researchers propose a concrete formal definition of open-endedness in AI systems from the perspective of novelty and learnability. They illustrate a path towards achieving artificial superhuman intelligence (ASI) by developing open-ended systems built upon foundation models. These open-ended systems would be capable of making robust, relevant discoveries that are understandable and beneficial to humans. The researchers argue that such open-endedness, enabled by the combination of foundation models and open-ended algorithms, is an essential property for any ASI system to continuously expand its capabilities and knowledge in a way that can be utilized by humanity.

The researchers provide a formal definition of open-endedness from the perspective of an observer. An open-ended system produces a sequence of artifacts that are both novel and learnable. Novelty is defined as artifacts becoming increasingly unpredictable to the observer’s model over time. Learnability requires that conditioning on a longer history of past artifacts makes future artifacts more predictable. The observer uses a statistical model to predict future artifacts based on the history, judging the quality of predictions using a loss metric. Interestingness is represented by the observer’s choice of loss function, capturing which features they find useful to learn about. This formal definition quantifies the key intuition that an open-ended system endlessly generates artifacts that are both novel and meaningful to the observer.

Researcher suggests that gravity can exist without mass, mitigating the need for hypothetical dark matter

Researchers for the first time showed, how gravity can exist without mass, providing an alternative theory that could potentially mitigate the need for dark matter…

Dark matter is a hypothetical form of matter that is implied by gravitational effects that can’t be explained by general relativity unless more matter is present in the universe than can be seen. It remains virtually as mysterious as it was nearly a century ago when first suggested by Dutch astronomer Jan Oort in 1932 to explain the so-called “missing mass” necessary for things like galaxies to clump together.

Now Dr. Richard Lieu at The University of Alabama in Huntsville (UAH) has published a paper in the Monthly Notices of the Royal Astronomical Society that shows, for the first time, how gravity can exist without mass, providing an alternative theory that could potentially mitigate the need for dark matter.

“My own inspiration came from my pursuit for another solution to the gravitational field equations of general relativity—the simplified version of which, applicable to the conditions of galaxies and clusters of galaxies, is known as the Poisson equation—which gives a finite gravitation force in the absence of any detectable mass,” says Lieu, a distinguished professor of physics and astronomy at UAH, a part of the University of Alabama System.

Brian Greene — What Was There Before The Big Bang?

The American theoretical physicist, Brian Greene explains various hypotheses about the causation of the big bang. Brian Greene is an excellent science communicator and he makes complex cosmological concepts more easy to understand.

The Big Bang explains the evolution of the universe from a starting density and temperature that is currently well beyond humanity’s capability to replicate. Thus the most extreme conditions and earliest times of the universe are speculative and any explanation for what caused the big bang should be taken with a grain of salt. Nevertheless that shouldn’t stop us to ask questions like what was there before the big bang.

Brian Greene mentions the possibility that time itself may have originated with the birth of the cosmos about 13.8 billion years ago.

To understand how the Universe came to be, scientists combine mathematical models with observations and develop workable theories which explain the evolution of the cosmos. The Big Bang theory, which is built upon the equations of classical general relativity, indicates a singularity at the origin of cosmic time.

However, the physical theories of general relativity and quantum mechanics as currently realized are not applicable before the Planck epoch, which is the earliest period of time in the history of the universe, and correcting this will require the development of a correct treatment of quantum gravity.

Certain quantum gravity treatments imply that time itself could be an emergent property. Which leads some physicists to conclude that time did not exist before the Big Bang. While others are open to the possibility of time preceding the big bang.

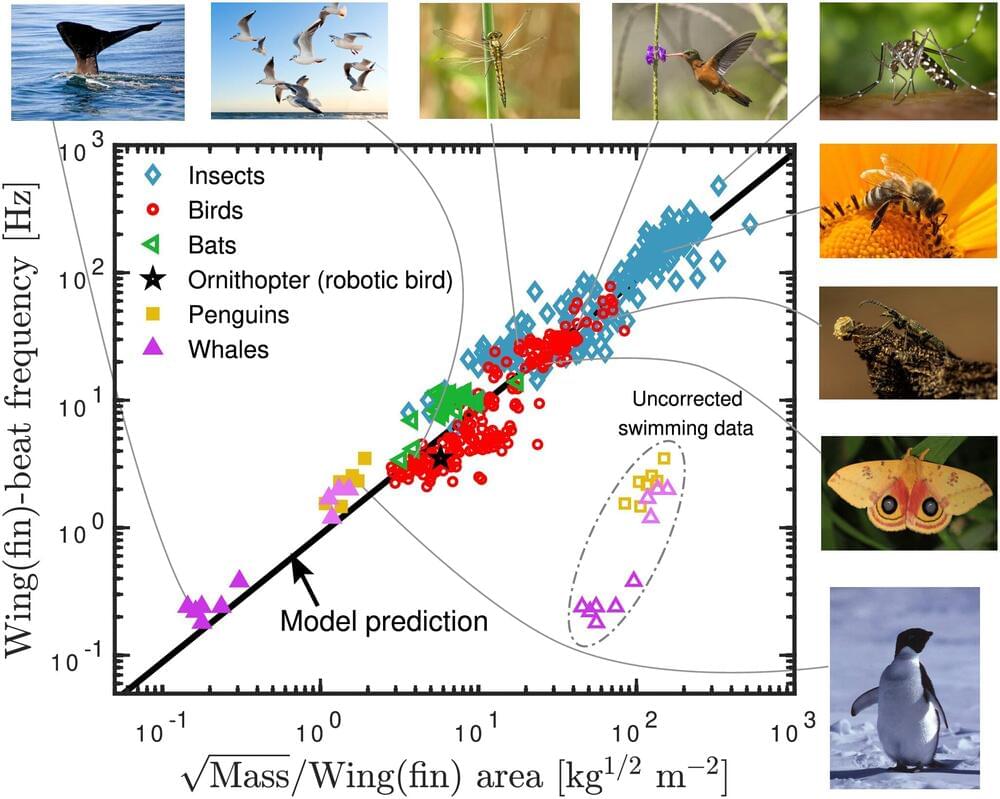

Flapping frequency of birds, insects, bats and whales predicted with just body mass and wing area

A single universal equation can closely approximate the frequency of wingbeats and fin strokes made by birds, insects, bats and whales, despite their different body sizes and wing shapes, Jens Højgaard Jensen and colleagues from Roskilde University in Denmark report in a new study published in PLOS ONE on June 5.

The ability to fly has evolved independently in many different animal groups. To minimize the energy required to fly, biologists expect that the frequency that animals flap their wings should be determined by the natural resonance frequency of the wing. However, finding a universal mathematical description of flapping flight has proved difficult.

Researchers used dimensional analysis to calculate an equation that describes the frequency of wingbeats of flying birds, insects and bats, and the fin strokes of diving animals, including penguins and whales.

Google’s Quantum AI Challenges Long-Standing Physics Theories

Quantum simulators are now addressing complex physics problems, such as the dynamics of 1D quantum magnets and their potential similarities to classical phenomena like snow accumulation. Recent research confirms some aspects of this theory, but also highlights challenges in fully validating the KPZ universality class in quantum systems. Credit: Google LLC

Quantum simulators are advancing quickly and can now tackle issues previously confined to theoretical physics and numerical simulation. Researchers at Google Quantum AI and their collaborators demonstrated this new potential by exploring dynamics in one-dimensional quantum magnets, specifically focusing on chains of spin-1/2 particles.

They investigated a statistical mechanics problem that has been the focus of attention in recent years: Could such a 1D quantum magnet be described by the same equations as snow falling and clumping together? It seems strange that the two systems would be connected, but in 2019, researchers at the University of Ljubljana found striking numerical evidence that led them to conjecture that the spin dynamics in the spin-1⁄2 Heisenberg model are in the Kardar-Parisi-Zhang (KPZ) universality class, based on the scaling of the infinite-temperature spin-spin correlation function.