By making use of randomness, a team has created a simple algorithm for estimating large numbers of distinct objects in a stream of data.

Oscar Wilde once said that sarcasm was the lowest form of wit, but the highest form of intelligence. Perhaps that is due to how difficult it is to use and understand. Sarcasm is notoriously tricky to convey through text—even in person, it can be easily misinterpreted. The subtle changes in tone that convey sarcasm often confuse computer algorithms as well, limiting virtual assistants and content analysis tools.

“Big machine learning models have to consume lots of power to crunch data and come out with the right parameters, whereas our model and training is so extremely simple that you could have systems learning on the fly,” said Robert Kent.

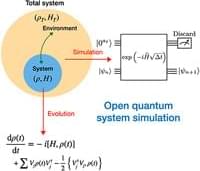

How can machine learning be improved to provide better efficiency in the future? This is what a recent study published in Nature Communications hopes to address as a team of researchers from The Ohio State University investigated the potential for controlling future machine learning products by creating digital twins (copies) that can be used to improve machine learning-based controllers that are currently being used in self-driving cars. However, these controllers require large amounts of computing power and are often challenging to use. This study holds the potential to help researchers better understand how future machine learning algorithms can exhibit better control and efficiency, thus improving their products.

“The problem with most machine learning-based controllers is that they use a lot of energy or power, and they take a long time to evaluate,” said Robert Kent, who is a graduate student in the Department of Physics at The Ohio State University and lead author of the study. “Developing traditional controllers for them has also been difficult because chaotic systems are extremely sensitive to small changes.”

For the study, the researchers created a fingertip-sized digital twin that can function without the internet with the goal of improving the productivity and capabilities of a machine learning-based controller. In the end, the researchers discovered a decrease in the controller’s power needs due to a machine learning method known as reservoir computing, which involves reading in data and mapping out to the target location. According to the researchers, this new method can be used to simplify complex systems, including self-driving cars while decreasing the amount of power and energy required to run the system.

Scientists have published the most detailed data set to date on the neural connections of the brain, which was obtained from a cubic millimeter of tissue sample.

A cubic millimeter of brain tissue may not sound like much. But considering that that tiny square contains 57,000 cells, 230 millimeters of blood vessels, and 150 million synapses, all amounting to 1,400 terabytes of data, Harvard and Google researchers have just accomplished something stupendous.

Led by Jeff Lichtman, the Jeremy R. Knowles Professor of Molecular and Cellular Biology and newly appointed dean of science, the Harvard team helped create the largest 3D brain reconstruction to date, showing in vivid detail each cell and its web of connections in a piece of temporal cortex about half the size of a rice grain.

Published in Science, the study is the latest development in a nearly 10-year collaboration with scientists at Google Research, combining Lichtman’s electron microscopy imaging with AI algorithms to color-code and reconstruct the extremely complex wiring of mammal brains. The paper’s three first co-authors are former Harvard postdoc Alexander Shapson-Coe, Michał Januszewski of Google Research, and Harvard postdoc Daniel Berger.

ALGORITHMS THAT DECODE IMAGES A PERSON SEES OR IMAGINES will enable visual representations of dreams a sleeper is having, and give deeper insights into emotionally disturbed or mentally ill patients.

Go to a href= https://brilliant.org/coldfusion

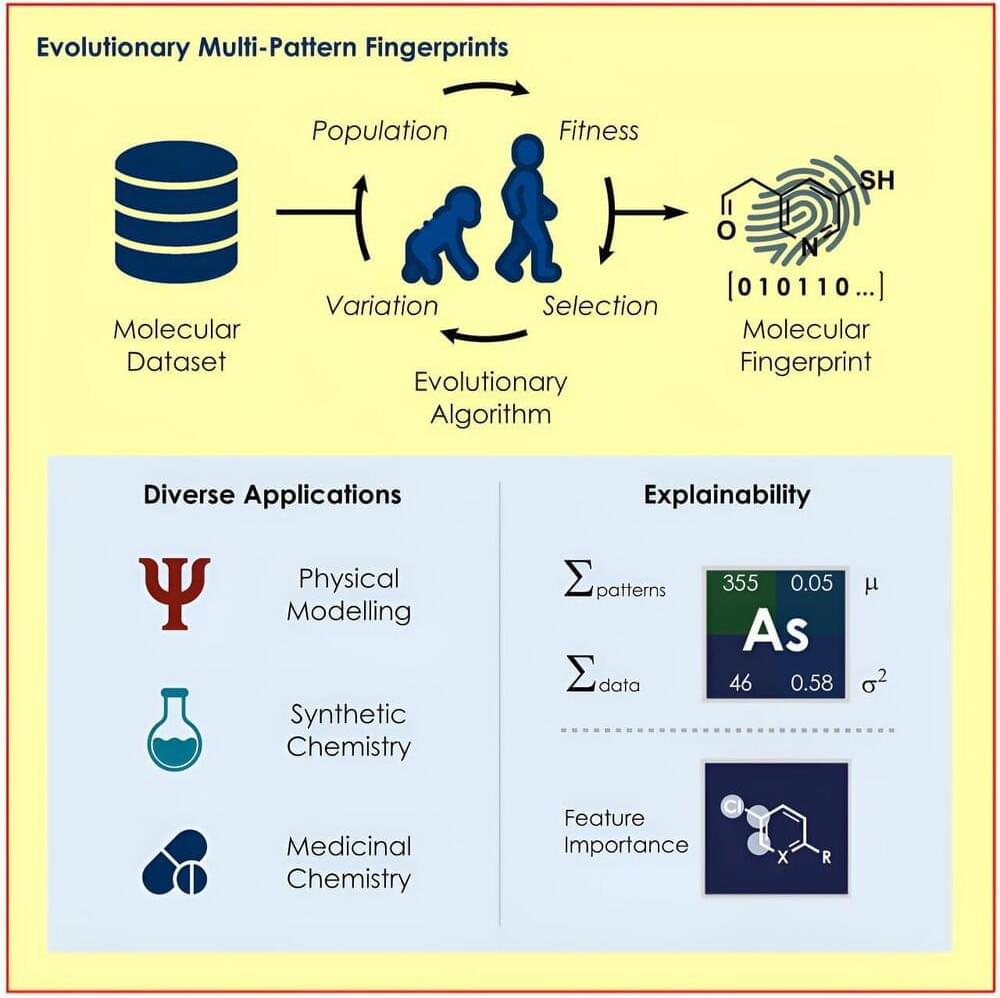

A team led by Prof Frank Glorius from the Institute of Organic Chemistry at the University of Münster has developed an evolutionary algorithm that identifies the structures in a molecule that are particularly relevant for a respective question and uses them to encode the properties of the molecules for various machine-learning models.