Google claims to have achieved a verifiable quantum breakthrough with a new algorithm that outperforms the most powerful supercomputers.

All three are explained in more detail in the bill, but arrive at broadly the same destination. This law, if approved, would prevent 3D printer brands from selling their wares in Washington State without stringent controls to prevent the printing of 3D firearms, or indeed parts that could be used to modify existing weapons.

According to the bill, violating this proposed law would be a class C felony, which means anyone found in violation of these terms could face up to five years in prison and a $15,000 fine.

Washington is not the first state to propose addressing 3D-printed firearms by way of legislation, and is unlikely to be the last. Earlier this month New York took steps to ban 3D-printed guns, proposing the mandating of 3D printer safeguards and cracking down on the sharing and possession of 3D files containing guns or gun components.

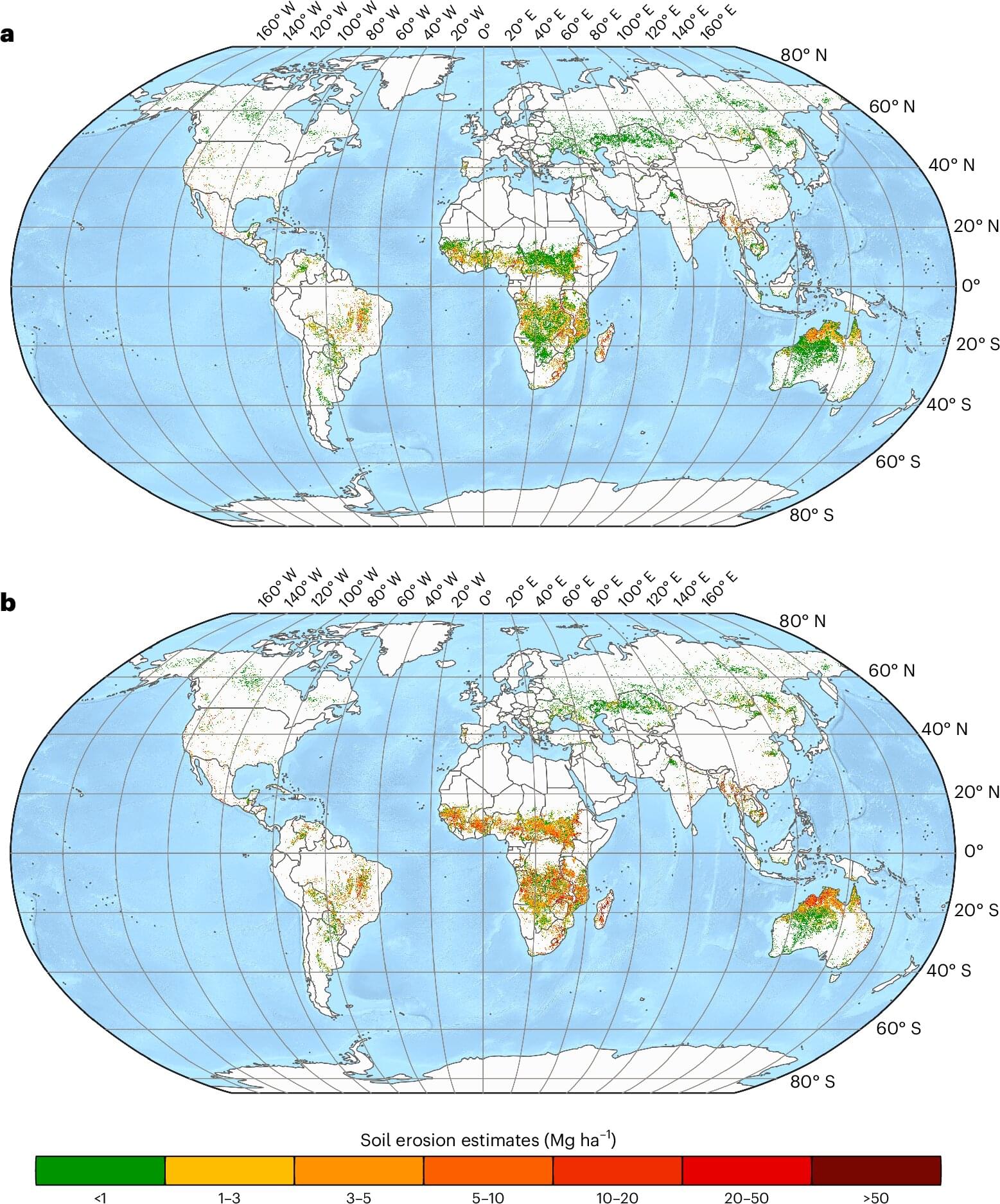

Wildfires are devastating events that destroy forests, burn homes and force people to leave their communities. They also have a profound impact on local ecosystems. But there is another problem that has been largely overlooked until now. When rain falls on the charred landscapes, it increases surface runoff and soil erosion that can last for decades, according to a new study published in Nature Geoscience.

On average, wildfires burn approximately 4 million square kilometers of land per year, an area equivalent to the size of the European Union. Despite this, there hasn’t been a global long-term assessment of how these fires affect soil erosion over time. So researchers from the European Commission’s Joint Research Center and the University of Basel, Switzerland, studied two decades’ worth of data to compile the world’s first global map of post-fire soil erosion.

The team used a sophisticated computer model called RUSLE (Revised Universal Soil Loss Equation), which they adapted for post-fire conditions to calculate how much soil moves based on factors such as vegetation cover and rainfall intensity. They combined this with satellite data of global wildfires from 2001 to 2019 and compared these areas with how the land looked before the flames took hold.

The musk blueprint: navigating the supersonic tsunami to hyperabundance when exponential curves multiply: understanding the triple acceleration.

On January 22, 2026, Elon Musk sat down with BlackRock CEO Larry Fink at the World Economic Forum in Davos and delivered what may be the most important articulation of humanity’s near-term trajectory since the invention of the internet.

Not because Musk said anything fundamentally new—his companies have been demonstrating this reality for years—but because he connected the dots in a way that makes the path to hyperabundance undeniable.

[Watch Elon Musk’s full WEF interview]

This is not visionary speculation.

This is engineering analysis from someone building the physical infrastructure of abundance in real-time.

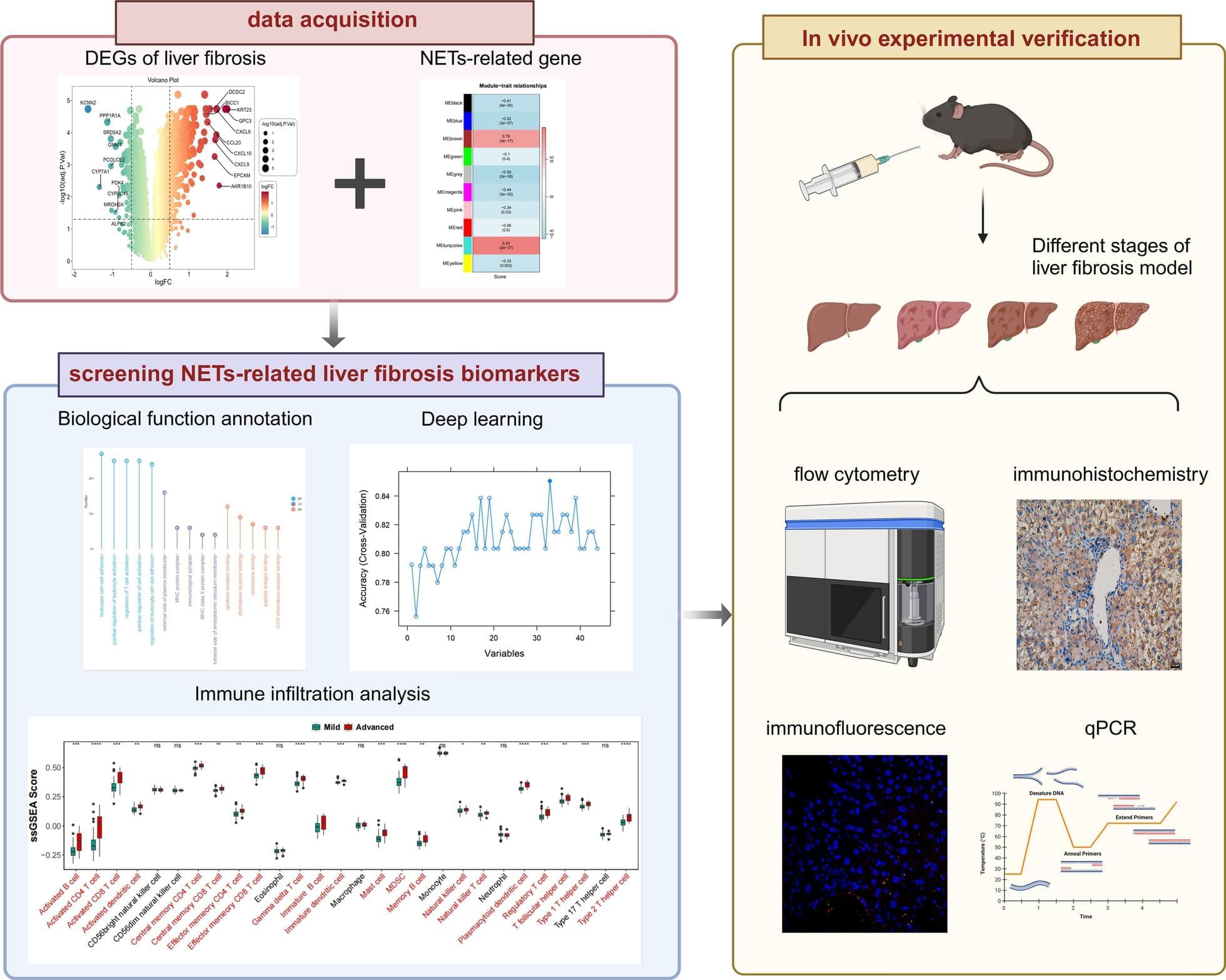

The diagnostic and therapeutic potential of neutrophil extracellular traps (NETs) in liver fibrosis (LF) has not been fully explored. We aim to screen and verify NETs-related liver fibrosis biomarkers through machine learning.

In order to obtain NETs-related differentially expressed genes (NETs-DEGs), differential analysis and WGCNA analysis were performed on the GEO dataset (GSE84044, GSE49541) and the NETs dataset. Enrichment analysis and protein interaction analysis were used to reveal the candidate genes and potential mechanisms of NETs-related liver fibrosis. Biomarkers were screened using SVM-RFE and Boruta machine learning algorithms, followed by immune infiltration analysis. A multi-stage model of fibrosis in mice was constructed, and neutrophil infiltration, NETs accumulation and NETs-related biomarkers were characterized by immunohistochemistry, immunofluorescence, flow cytometry and qPCR. Finally, the molecular regulatory network and potential drugs of biomarkers were predicted.

A total of 166 NETs-DEGs were identified. Through enrichment analysis, these genes were mainly enriched in chemokine signaling pathway and cytokine-cytokine receptor interaction pathway. Machine learning screened CCL2 as a NETs-related liver fibrosis biomarker, involved in ribosome-related processes, cell cycle regulation and allograft rejection pathways. Immune infiltration analysis showed that there were significant differences in 22 immune cell subtypes between fibrotic samples and healthy samples, including neutrophils mainly related to NETs production. The results of in vivo experiments showed that neutrophil infiltration, NETs accumulation and CCL2 level were up-regulated during fibrosis. A total of 5 miRNAs, 2 lncRNAs, 20 function-related genes and 6 potential drugs were identified based on CCL2.

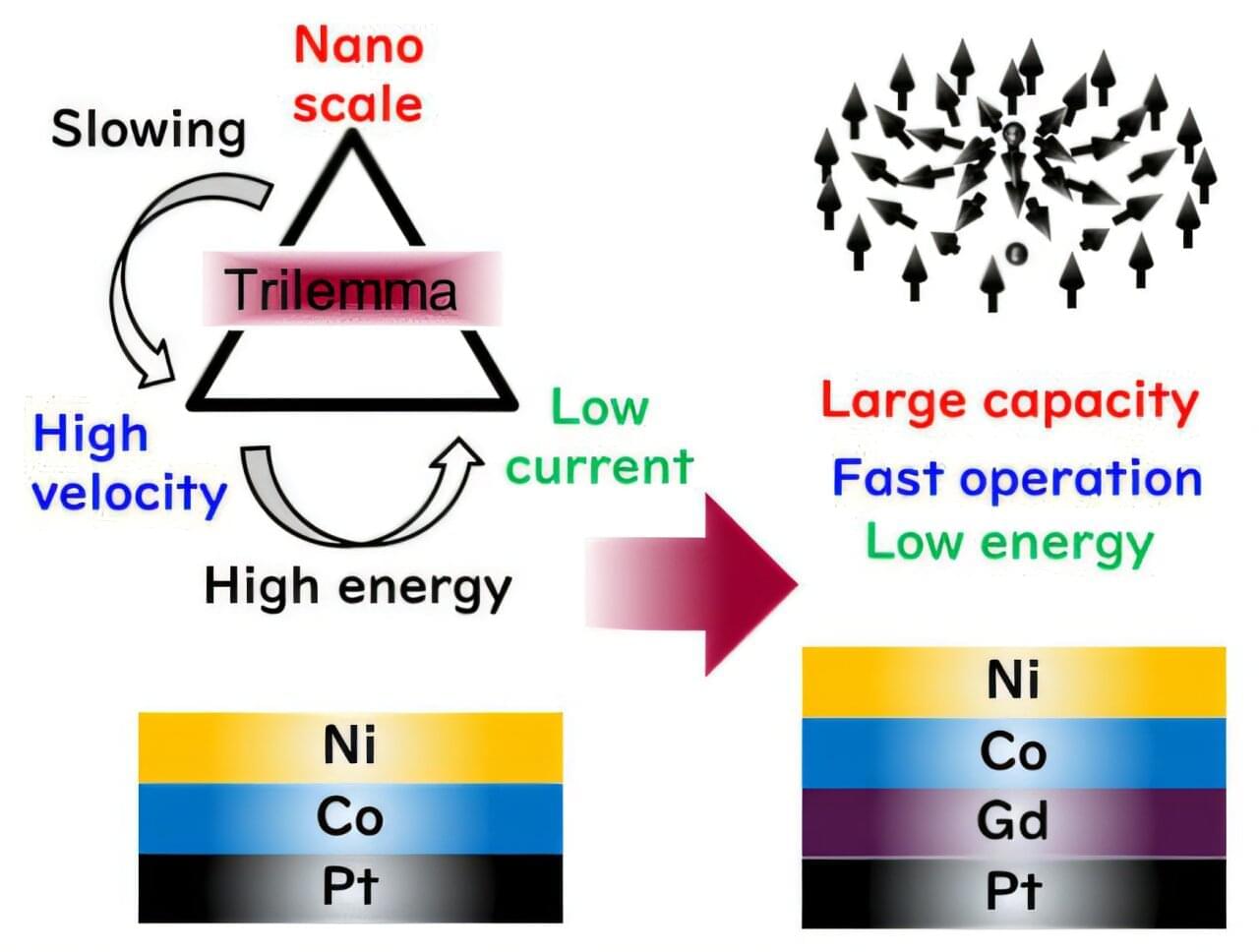

Researchers at Kyushu University have shown that careful engineering of materials interfaces can unlock new applications for nanoscale magnetic spins, overcoming the limits of conventional electronics. Their findings, published in APL Materials, open up a promising path for tackling a key challenge in the field and ushering in a new era of next-generation information devices.

The study centers around magnetic skyrmions—swirling, nanoscale magnetic structures that behave like particles. Skyrmions possess three key features that make them useful as data carriers in information devices: nanoscale size for high capacity, compatibility with high-speed operations in the GHz range, and the ability to be moved around with very low electrical currents.

A skyrmion-based device could, in theory, surpass modern electronics in applications such as large-scale AI computing, Internet of Things (IoT), and other big data applications.

Artificial intelligence (AI) is said to be a “black box,” with its logic obscured from human understanding—but how much does the average user actually care to know how AI works?

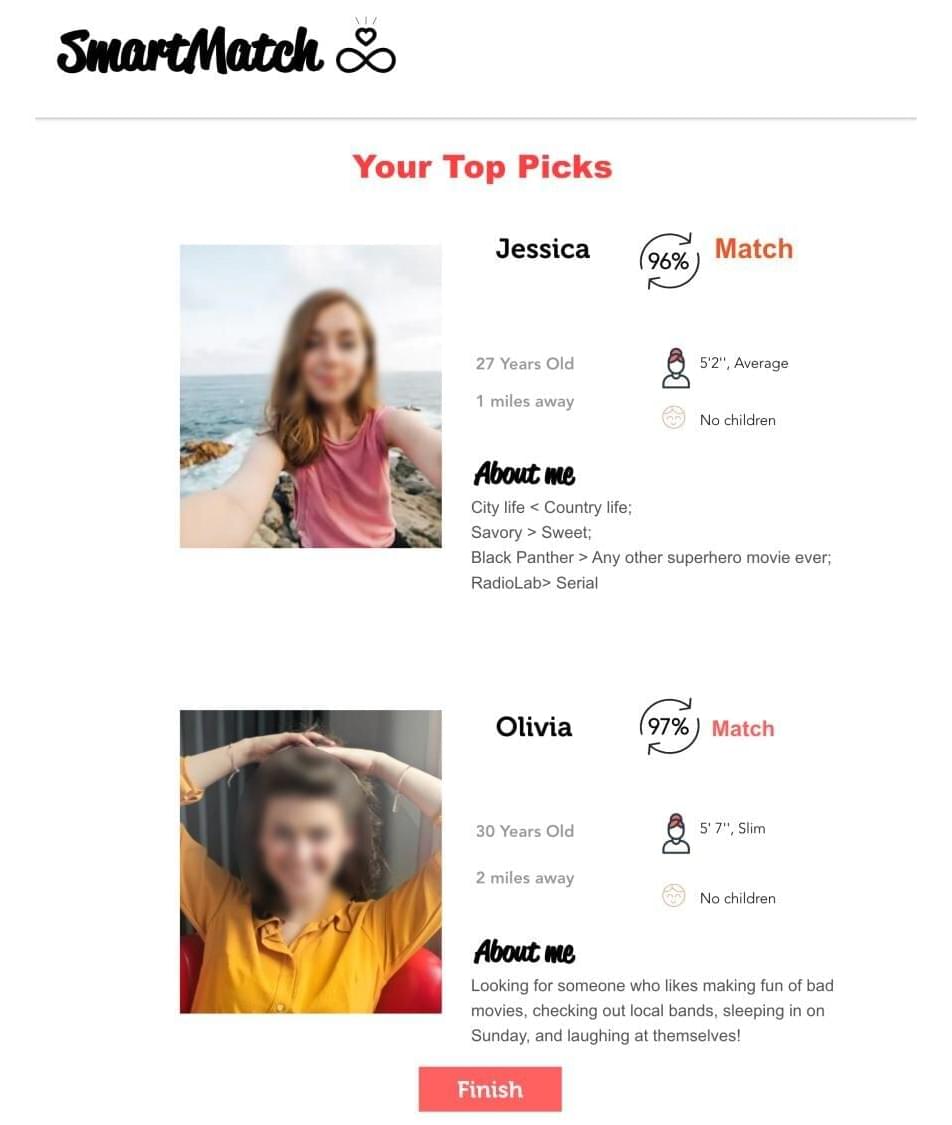

It depends on the extent to which a system meets users’ expectations, according to a new study by a team that includes Penn State researchers. Using a fabricated algorithm-driven dating website, the team found that whether the system met, exceeded or fell short of user expectations directly corresponded to how much the user trusted the AI and wanted to know about how it worked.

The findings are published in the journal Computers in Human Behavior.

A collaborative team of four professors and several graduate students from the Departments of Chemistry and Biochemical Science and Technology at National Taiwan University, together with the Department of Applied Chemistry at National Chi Nan University, has achieved a long-sought breakthrough.

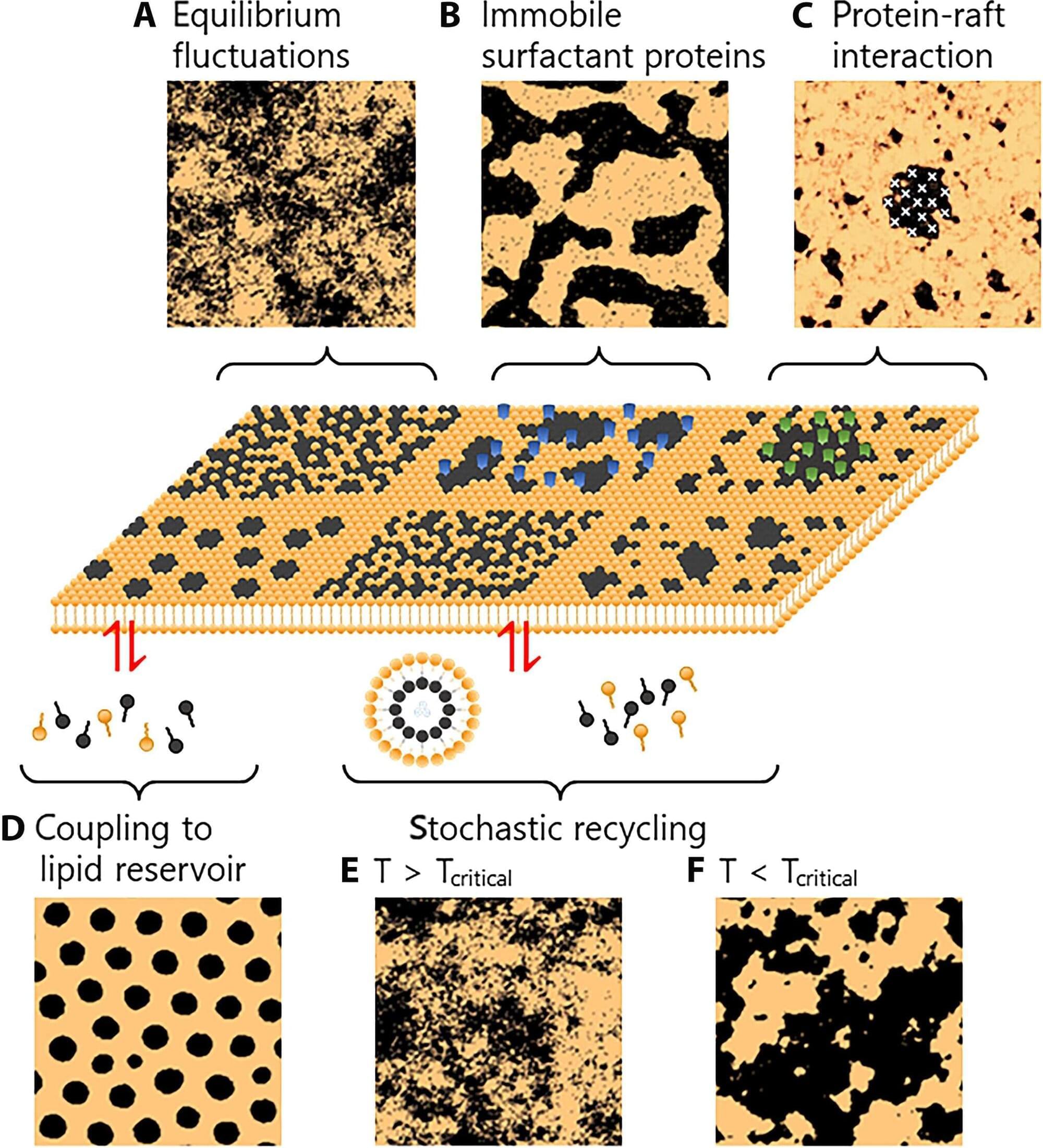

By combining atomic force microscopy (AFM) with a Hadamard product–based image reconstruction algorithm, the researchers successfully visualized, for the first time, the nanoscopic dynamics of membrane rafts in live cells—making visible what had long remained invisible on the cell membrane.

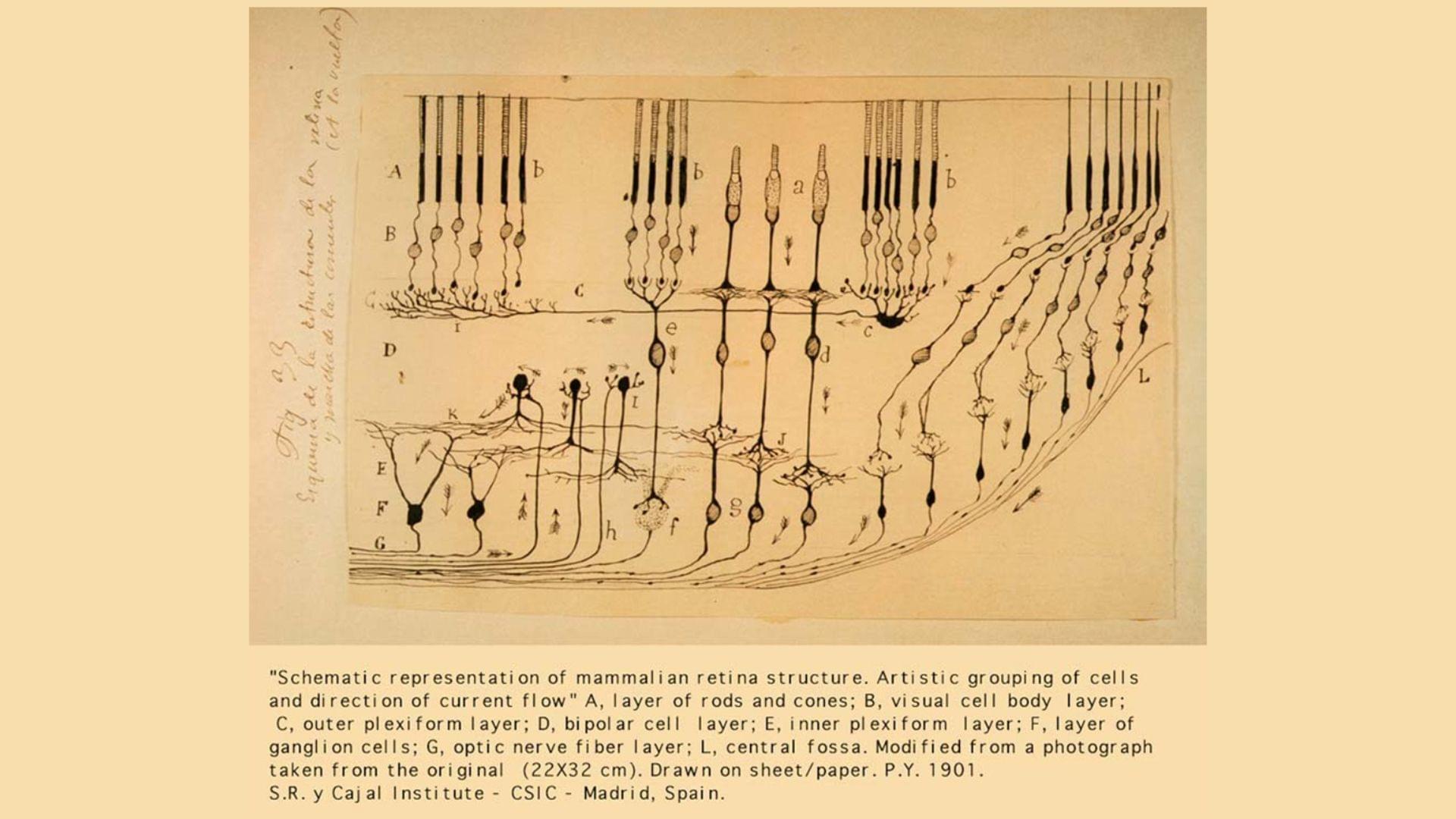

Membrane rafts are nanometer-scale structures rich in cholesterol and sphingolipids, believed to serve as vital platforms for cell signaling, viral entry, and cancer metastasis. Since the concept emerged in the 1990s, the existence and behavior of these lipid domains have been intensely debated.