By harnessing randomness, a new algorithm achieves a fundamentally novel — and faster — way of performing one of the most basic computations in math and computer science.

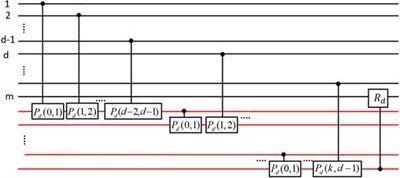

While we cannot efficiently emulate quantum algorithms on classical architectures, we can move the weight of complexity from time to hardware resources. This paper describes a proposition of a universal and scalable quantum computer emulator, in which the FPGA hardware emulates the behavior of a real quantum system, capable of running quantum algorithms while maintaining their natural time complexity. The article also shows the proposed quantum emulator architecture, exposing a standard programming interface, and working results of an implementation of an exemplary quantum algorithm.

Researchers in the UK have developed a way to coax microscopic particles and droplets into precise patterns by harnessing the power of sound in air. The implications for printing, especially in the fields of medicine and electronics, are far-reaching.

The scientists from the Universities of Bath and Bristol have shown that it’s possible to create precise, pre-determined patterns on surfaces from aerosol droplets or particles, using computer-controlled ultrasound. A paper describing the entirely new technique, called ‘sonolithography’, is published in Advanced Materials Technologies.

Professor Mike Fraser from the Department of Computer Science at the University of Bath, explained: “The power of ultrasound has already been shown to levitate small particles. We are excited to have hugely expanded the range of applications by patterning dense clouds of material in air at scale and being able to algorithmically control how the material settles into shapes.”

The Facebook research builds upon steady progress in tweaking deep learning algorithms to make them more efficient and effective. Self-supervised learning previously has been used to translate text from one language to another, but it has been more difficult to apply to images than words. LeCun says the research team developed a new way for algorithms to learn to recognize images even when one part of the image has been altered.

Most image recognition algorithms require lots of labeled pictures. This new approach eliminates the need for most of the labeling.

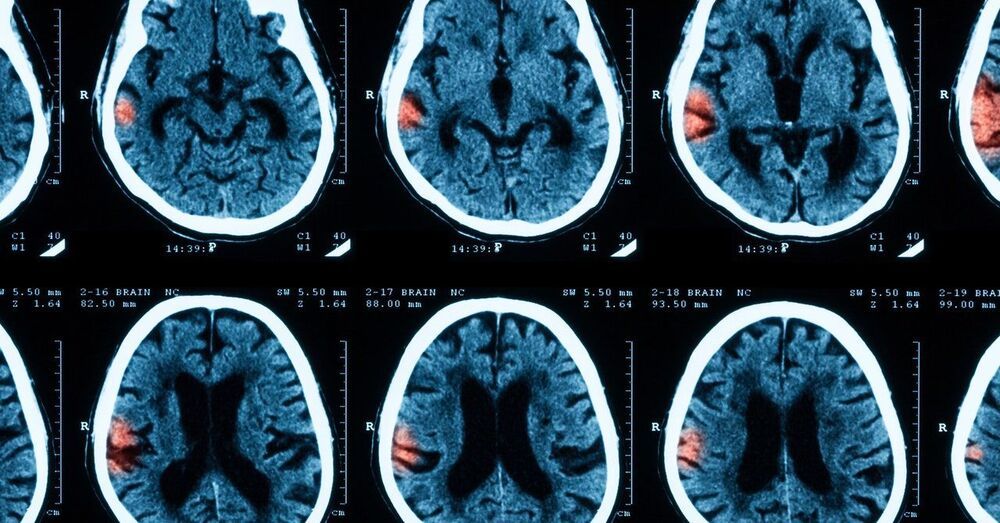

Dr. John Torday, Ph.D. is an Investigator at The Lundquist Institute of Biomedical Innovation, a Professor of Pediatrics and Obstetrics/Gynecology, and Faculty, Evolutionary Medicine, at the David Geffen School of Medicine at UCLA, and Director of the Perinatal Research Training Program, the Guenther Laboratory for Cell-Molecular Biology, and Faculty in the Division of Neonatology, at Harbor-UCLA Medical Center.

Dr. Torday studies the cellular-molecular development of the lung and other visceral organs, and using the well-established principles of cell-cell communication as the basis for determining the patterns of physiologic development, his laboratory was the first to determine the complete repertoire of lung alveolar morphogenesis. This highly regulated structure offered the opportunity to trace the evolution of the lung from its unicellular origins forward, developmentally and phylogenetically. The lung is an algorithm for understanding the evolution of other physiologic properties, such as in the kidney, skin, liver, gut, and central nervous system. Such basic knowledge of the how and why of physiologic evolution is useful in the effective diagnosis and treatment of disease.

Dr. Torday received his undergraduate degree in Biology and English from Boston University, and his MSc and PhD in Experimental Medicine from McGill University, Montreal, Canada. He did a post-doctoral Fellowship in Reproductive Endocrinology at the University of Wisconsin-Madison, WI.

Dr. Torday’s research has led to the publication of more than 150 peer-reviewed articles and 350 abstracts. More recently, he has gained an interest in the evolutionary aspects of comparative physiology and development, leading to the publication of 12 peer-reviewed articles on the cellular origins of vertebrate physiology, culminating in the book Evolutionary Biology, Cell-Cell Communication and Complex Disease.

Dr. Torday is also the co-author / co-editor on several volumes including: Evolution, the Logic of Biology, Evidence-Based Evolutionary Medicine, Morphogenesis, Environmental Stress and Reverse Evolution, and most recently, The Singularity of Nature: A Convergence of Biology, Chemistry and Physics.

A team of researchers at Uber AI Labs in San Francisco has developed a set of learning algorithms that proved to be better at playing classic video games than human players or other AI systems. In their paper published in the journal Nature, the researchers explain how their algorithms differ from others and why they believe they have applications in robotics, language processing and even designing new drugs.

😃

The Nobel-winning author talks about scaring Harold Pinter, life after death – and his new novel about an ‘artificial friend’

10 November 2020

Qudit is a multi-level computational unit alternative to the conventional 2-level qubit. Compared to qubit, qudit provides a larger state space to store and process information, and thus can provide reduction of the circuit complexity, simplification of the experimental setup and enhancement of the algorithm efficiency. This review provides an overview of qudit-based quantum computing covering a variety of topics ranging from circuit building, algorithm design, to experimental methods. We first discuss the qudit gate universality and a variety of qudit gates including the pi/8 gate, the SWAP gate, and the multi-level controlled-gate. We then present the qudit version of several representative quantum algorithms including the Deutsch-Jozsa algorithm, the quantum Fourier transform, and the phase estimation algorithm.