SETI, the search for extraterrestrial intelligence, is deploying machine-learning algorithms that filter out Earthly interference and spot signals humans might miss.

Welcome Back To Future Fuse Technology today is evolving at a rapid pace, enabling faster change and progress, causing an acceleration of the rate of change. However, it is not only technology trends and emerging technologies that are evolving, a lot more has changed this year due to the outbreak of COVID-19 making IT professionals realize that their role will not stay the same in the contactless world tomorrow. And an IT professional in 2023–24 will constantly be learning, unlearning, and relearning (out of necessity if not desire).Artificial intelligence will become more prevalent in 2023 with natural language processing and machine learning advancement. Artificial intelligence can better understand us and perform more complex tasks using this technology. It is estimated that 5G will revolutionize the way we live and work in the future. From the evolution of Artificial Intelligence (AI), the internet of things (IoT), and 5G network to cloud computing, big data, and analytics, technology has the capacity or potential to transform everything, revolutionizing the future of the world. Already, we see the rapid roll-out of autonomous vehicles (self-driving cars) currently in trial phases for all car companies, and Elon Musk’s Tesla is improving the technology by making it more secure and redefined. Forward-thinking and innovative companies seem not to miss any chance to bring breakthrough innovation to the world…in this video, we are looking into The World Will Be REVOLUTIONIZED by These 18 Rapidly Developing Technologies.

TAGS: #ai #technologygyan #futureTechnology.

RIGHT NOTICE: The Copyright Laws of the United States recognize a “fair use” of copyrighted content. Section 107 of the U.S. Copyright Act states: “Notwithstanding the provisions of sections 106 and 106A, the fair use of a copyrighted work, including such use by reproduction in copies or phonorecords or by any other means specified by that section, for purposes such as criticism, comment, news reporting, teaching (including multiple copies for classroom use), scholarship, or research, is not an infringement of copyright.” This video and our YouTube channel, in general, may contain certain copyrighted works that were not specifically authorized to be used by the copyright holder(s), but which we believe in good faith are protected by federal law and the fair use doctrine for one or more of the reasons noted above.

Sponsor: AG1, The nutritional drink I’m taking for energy and mental focus. Tap this link to get a year’s supply of immune-supporting vitamin D3-K2 & 5 travel packs FREE with your first order: https://www.athleticgreens.com/arvinash.

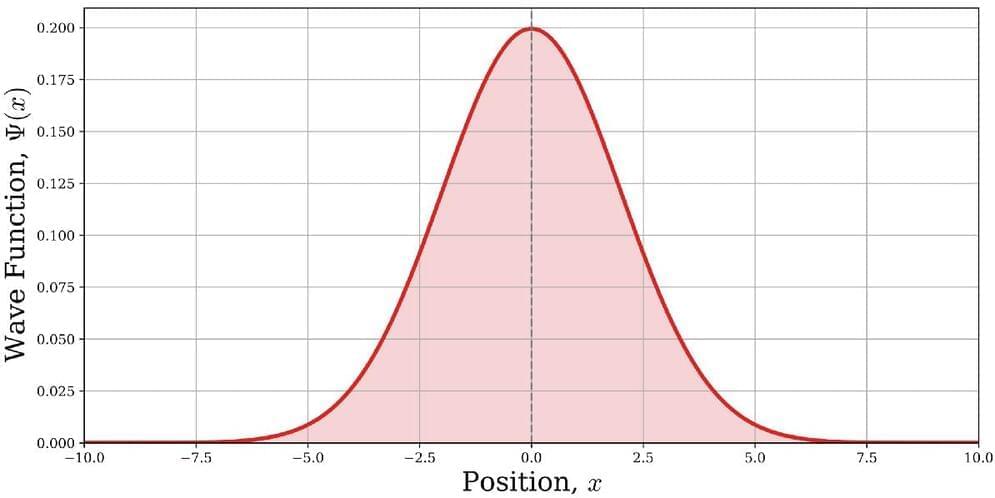

Science Asylum video on Schrodinger Equation:

JOIN our PATREON (Members help pay for the animations. THANK YOU so much!):

https://www.patreon.com/arvinash.

RECOMMENDED READING:

Schwartz, “Quantum Field Theory and the Standard model” https://amzn.to/3HmWdYt.

CHAPTERS:

0:00 The most important motion in the universe.

1:08 How get energy and mental focus.

2:20 A spring: Classical simple harmonic oscillator.

4:48 QUANTUM Harmonic oscillator.

6:00 Science Asylum — what is the Schrodinger equation?

7:30 Quantum Field Theory (QFT) uses spring math!

10:00 Intuitive description of what’s going on!

12:37 What is really oscillating in QFT?

SUMMARY:

Renowned physicist Neil Turok, Holder of the Higgs Chair of Theoretical Physics at the University of Edinburgh, joins me to discuss the state of science and the universe. is Physics in trouble? What hope is there to return to more productive and Simple theories? What is Peter Higgs up to?

Neil Turok has been director emeritus of the Perimeter Institute for Theoretical Physics since 2019. He specializes in mathematical physics and early-universe physics, including the cosmological constant and a cyclic model for the universe.

He has written several books including Endless Universe: Beyond the Big Bang and The Universe Within: From Quantum to Cosmos.

00:00:00 Intro.

00:03:28 What is the meaning of Neil’s book cover?

00:06:46 The Nature of the Endless Universe.

00:14:31 What would happen to James Clerk Maxwell and Michael Faraday on Twitter?

00:16:10 What’s wrong with physics today?

00:20:06 How did Neil’s life change after his theory was proven wrong?

00:23:28 Neil shows us fundamental laws of the Universe in equations.

00:33:59 How well do our modern equations satisfy the conditions of the observable Universe?

00:56:29 How is the Universe simple?

01:20:01 Can Neil’s model explain flatness without inflation?

01:54:54 Existential Questions on the meaning of life, advice to his former self, and things he’s changed his mind on.

Join this channel to get access to perks:

https://www.youtube.com/channel/UCmXH_moPhfkqCk6S3b9RWuw/join.

📺 Watch my most popular videos:📺

Social media company and Snapchat maker Snap has for years defined itself as a “camera company,” despite its failures to turn its photo-and-video recording glasses known as Spectacles into a mass-market product and, more recently, its decision to kill off its camera-equipped drone. But that hasn’t stopped the company from envisioning a future where AR glasses are a commonly used device, and one, as the company revealed on Tuesday’s fourth-quarter earnings call, that will eventually be powered by AI technology.

Investors wanted to get a sense of how Snap was thinking about the latest developments in AI — particularly in buzzy areas like generative A.I. which has benefitted from advances in algorithms, language models, and the increased processing power available to run the necessary calculations. One pointed to the AI image generator Midjourney’s bot for Discord, as an example of how AI could lead to increased user engagement within an app.

Snap CEO Evan Spiegel agreed that, in the near term, there were a lot of opportunities to use generative AI to make Snap’s camera more powerful. However, he noted that further down the road, AI would be critical to the growth of augmented reality, including AR glasses.

Is this the breakthrough the world has been waiting for from the Search for Extraterrestrial Intelligence Institute?

A scientist, Peter Ma, has applied machine learning and artificial intelligence to data collected by the Search for Extraterrestrial Intelligence (SETI) Institute, a press statement reveals.

Algorithm finds 8 promising signals that could be of alien origin.

Honglouwawa/iStock.

Based on initial results, there is a slight chance the new method may have unearthed non-Earth-based “technosignatures”. That would mean it had achieved SETI’s goal of finding signs of extraterrestrial intelligence.

As neural networks become more powerful, algorithms have become capable of turning ordinary text into images, animations and even short videos. These algorithms have generated significant controversy. An AI-generated image recently won first prize in an annual art competition while the Getty Images stock photo library is currently taking legal action against the developers of an AI art algorithm that it believes was unlawfully trained using Getty’s images.

So the music equivalent of these systems shouldn’t come as much surprise. And yet the implications are extraordinary.

A group of researchers at Google have unveiled an AI system capable of turning ordinary text descriptions into rich, varied and relevant music. The company has showcased these capabilities using descriptions of famous artworks to generate music.

There are two aspects to a computer’s power: the number of operations its hardware can execute per second and the efficiency of the algorithms it runs. The hardware speed is limited by the laws of physics. Algorithms—basically sets of instructions —are written by humans and translated into a sequence of operations that computer hardware can execute. Even if a computer’s speed could reach the physical limit, computational hurdles remain due to the limits of algorithms.

These hurdles include problems that are impossible for computers to solve and problems that are theoretically solvable but in practice are beyond the capabilities of even the most powerful versions of today’s computers imaginable. Mathematicians and computer scientists attempt to determine whether a problem is solvable by trying them out on an imaginary machine.

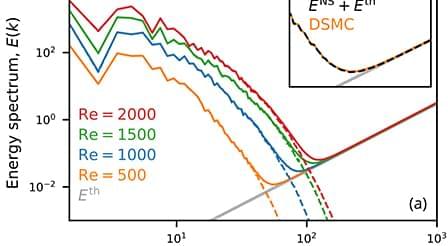

The dynamics of electrons submitted to voltage pulses in a thin semiconductor layer is investigated using a kinetic approach based on the solution of the electron Boltzmann equation using particle-in-cell/Monte Carlo collision simulations. The results showed that due to the fairly high plasma density, oscillations emerge from a highly nonlinear interaction between the space-charge field and the electrons. The voltage pulse excites electron waves with dynamics and phase-space trajectories that depend on the doping level. High-amplitude oscillations take place during the relaxation phase and are subsequently damped over time-scales in the range 100 – 400 fs and decrease with the doping level. The power spectra of these oscillations show a high-energy band and a low-energy peak that were attributed to bounded plasma resonances and to a sheath effect. The high-energy THz domain reduces to sharp and well-defined peaks for the high doping case. The radiative power that would be emitted by the thin semiconductor layer strongly depends on the competition between damping and radiative decay in the electron dynamics. Simulations showed that higher doping level favor enhanced magnitude and much slower damping for the high-frequency current, which would strongly enhance the emitted level of THz radiation.