Scientists and roboticists have long looked at nature for inspiration to develop new features for machines. In this case, researchers from Ecole Polytechnique Fédérale de Lausanne (EPFL), Switzerland were inspired by bats and other animals that rely on echolocation to design a method that would give small robots that ability to navigate themselves — one that doesn’t need expensive hardware or components too large or too heavy for tiny machines. In fact, according to PopSci, the team only used the integrated audio hardware of an interactive puck robot and built an audio extension deck using cheap mic and speakers for a tiny flying drone that can fit in the palm of your hand.

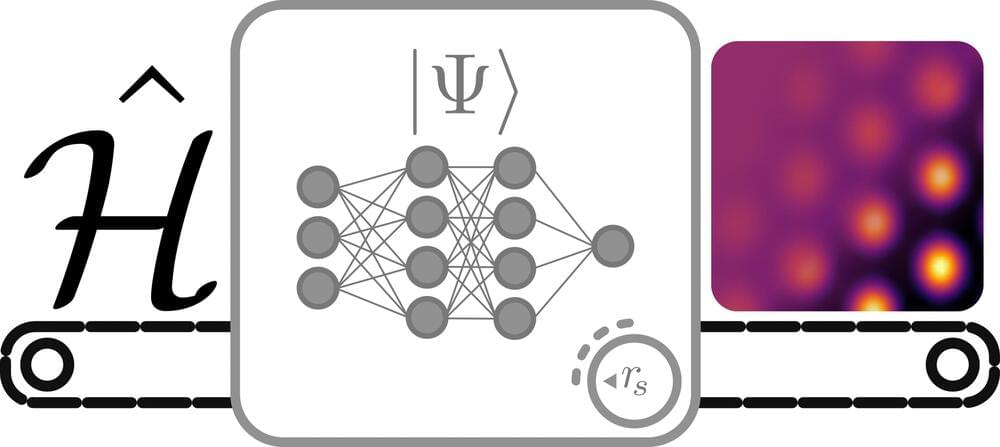

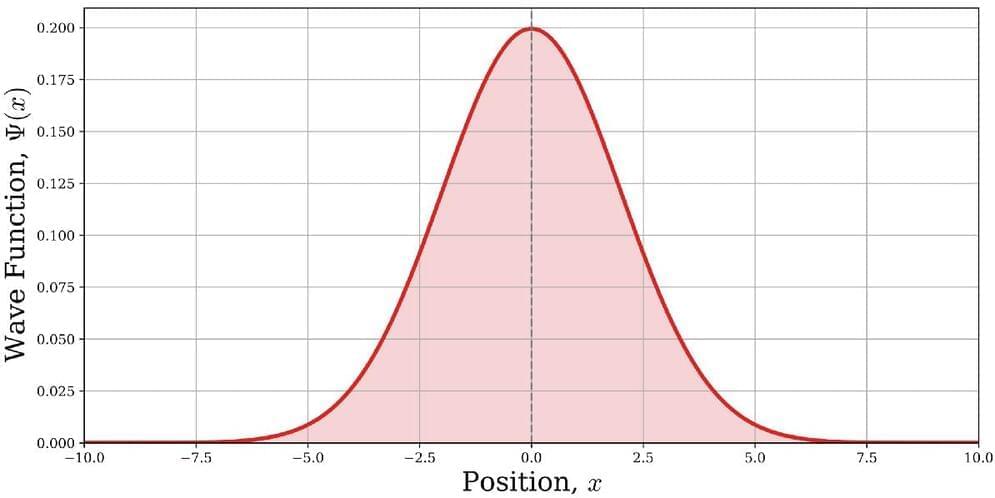

The system works just like bat echolocation. It was designed to emit sounds across frequencies, which a robot’s microphone then picks up as they bounce off walls. An algorithm the team created then goes to work to analyze sound waves and create a map with the room’s dimensions.

In a paper published in IEEE Robotics and Automation Letters, the researchers said existing “algorithms for active echolocation are less developed and often rely on hardware requirements that are out of reach for small robots.” They also said their “method is model-based, runs in real time and requires no prior calibration or training.” Their solution could give small machines the capability to be sent on search-and-rescue missions or to previously uncharted locations that bigger robots wouldn’t be able to reach. And since the system only needs onboard audio equipment or cheap additional hardware, it has a wide range of potential applications.