Researchers have characterized the thermodynamic properties of a model that uses cold atoms to simulate condensed-matter phenomena.

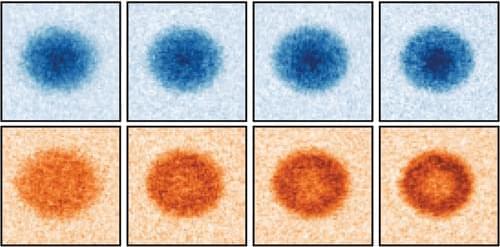

And this feature distinguishes neuromorphic systems from conventional computing systems. The brain has evolved over billions of years to solve difficult engineering problems by using efficient, parallel, low-power computation. The goal of NE is to design systems capable of brain-like computation. Numerous large-scale neuromorphic projects have emerged recently. This interdisciplinary field was listed among the top 10 technology breakthroughs of 2014 by the MIT Technology Review and among the top 10 emerging technologies of 2015 by the World Economic Forum. NE has two-way goals: one, a scientific goal to understand the computational properties of biological neural systems by using models implemented in integrated circuits (ICs); second, an engineering goal to exploit the known properties of biological systems to design and implement efficient devices for engineering applications. Building hardware neural emulators can be extremely useful for simulating large-scale neural models to explain how intelligent behavior arises in the brain. The principal advantages of neuromorphic emulators are that they are highly energy efficient, parallel and distributed, and require a small silicon area. Thus, compared to conventional CPUs, these neuromorphic emulators are beneficial in many engineering applications such as for the porting of deep learning algorithms for various recognitions tasks. In this review article, we describe some of the most significant neuromorphic spiking emulators, compare the different architectures and approaches used by them, illustrate their advantages and drawbacks, and highlight the capabilities that each can deliver to neural modelers. This article focuses on the discussion of large-scale emulators and is a continuation of a previous review of various neural and synapse circuits (Indiveri et al., 2011). We also explore applications where these emulators have been used and discuss some of their promising future applications.

“Building a vast digital simulation of the brain could transform neuroscience and medicine and reveal new ways of making more powerful computers” (Markram et al., 2011). The human brain is by far the most computationally complex, efficient, and robust computing system operating under low-power and small-size constraints. It utilizes over 100 billion neurons and 100 trillion synapses for achieving these specifications. Even the existing supercomputing platforms are unable to demonstrate full cortex simulation in real-time with the complex detailed neuron models. For example, for mouse-scale (2.5 × 106 neurons) cortical simulations, a personal computer uses 40,000 times more power but runs 9,000 times slower than a mouse brain (Eliasmith et al., 2012). The simulation of a human-scale cortical model (2 × 1010 neurons), which is the goal of the Human Brain Project, is projected to require an exascale supercomputer (1018 flops) and as much power as a quarter-million households (0.5 GW).

The electronics industry is seeking solutions that will enable computers to handle the enormous increase in data processing requirements. Neuromorphic computing is an alternative solution that is inspired by the computational capabilities of the brain. The observation that the brain operates on analog principles of the physics of neural computation that are fundamentally different from digital principles in traditional computing has initiated investigations in the field of neuromorphic engineering (NE) (Mead, 1989a). Silicon neurons are hybrid analog/digital very-large-scale integrated (VLSI) circuits that emulate the electrophysiological behavior of real neurons and synapses. Neural networks using silicon neurons can be emulated directly in hardware rather than being limited to simulations on a general-purpose computer. Such hardware emulations are much more energy efficient than computer simulations, and thus suitable for real-time, large-scale neural emulations.

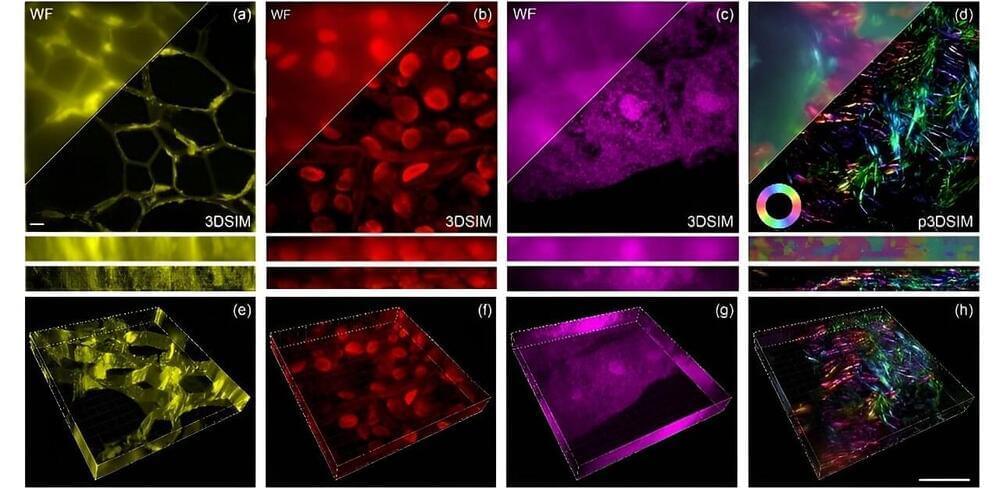

In the ever-evolving realm of microscopy, recent years have witnessed remarkable strides in both hardware and algorithms, propelling our ability to explore the infinitesimal wonders of life. However, the journey towards three-dimensional structured illumination microscopy (3DSIM) has been hampered by challenges arising from the speed and intricacy of polarization modulation.

Enter the high-speed modulation 3DSIM system “DMD-3DSIM,” combining digital display with super-resolution imaging, allowing scientists to see cellular structures in unprecedented detail.

As reported in Advanced Photonics Nexus, Professor Peng Xi’s team at Peking University developed this innovative setup around a digital micromirror device (DMD) and an electro-optic modulator (EOM). It tackles resolution challenges by significantly improving both lateral (side-to-side) and axial (top-to-bottom) resolution, for a 3D spatial resolution reportedly twice that achieved by traditional wide-field imaging techniques.

Chayka argues that cultivating our own personal taste is important, not because one form of culture is demonstrably better than another, but because that slow and deliberate process is part of how we develop our own identity and sense of self. Take that away, and you really do become the person the algorithm thinks you are.

As Chayka points out in Filterworld, algorithms “can feel like a force that only began to exist … in the era of social networks” when in fact they have “a history and legacy that has slowly formed over centuries, long before the Internet existed.” So how exactly did we arrive at this moment of algorithmic omnipresence? How did these recommendation machines come to dominate and shape nearly every aspect of our online and (increasingly) our offline lives? Even more important, how did we ourselves become the data that fuels them?

These are some of the questions Chris Wiggins and Matthew L. Jones set out to answer in How Data Happened: A History from the Age of Reason to the Age of Algorithms. Wiggins is a professor of applied mathematics and systems biology at Columbia University. He’s also the New York Times’ chief data scientist. Jones is now a professor of history at Princeton. Until recently, they both taught an undergrad course at Columbia, which served as the basis for the book.

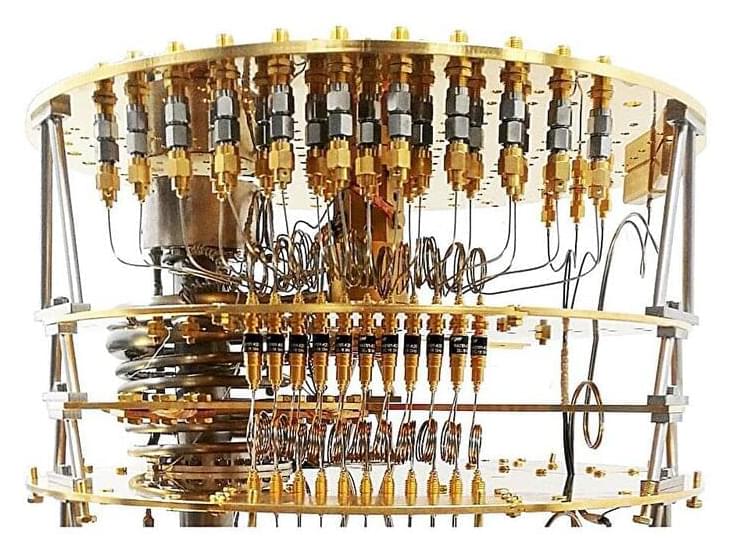

Physicists from Forschungszentrum Jülich and the Karlsruhe Institute of Technology have uncovered that Josephson tunnel junctions—the fundamental building blocks of superconducting quantum computers—are more complex than previously thought.

Just like overtones in a musical instrument, harmonics are superimposed on the fundamental mode. As a consequence, corrections may lead to quantum bits that are two to seven times more stable. The researchers support their findings with experimental evidence from multiple laboratories across the globe, including the University of Cologne, Ecole Normale Supérieure in Paris, and IBM Quantum in New York.

It all started in 2019, when Dr. Dennis Willsch and Dennis Rieger—two Ph.D. students from FZJ and KIT at the time and joint first authors of a new paper published in Nature Physics —were having a hard time understanding their experiments using the standard model for Josephson tunnel junctions. This model had won Brian Josephson the Nobel Prize in Physics in 1973.

Time’s inexorable march might well wait for no one, but a new experiment by researchers at the Technical University of Darmstadt in Germany and Roskilde University in Denmark shows how in some materials it might occasionally shuffle.

An investigation into the way substances like glass age has uncovered the first physical evidence of a material-based measure of time being reversible.

For the most part the laws of physics care little about time’s arrow. Flip an equation describing the movement of an object and you can easily calculate where it started. We describe such laws as time reversible.

Researchers at the University of Trento, Italy, have developed a novel approach for prime factorization via quantum annealing, leveraging a compact modular encoding paradigm and enabling the factorization of large numbers using D-Wave quantum devices.

Prime factorization is the procedure of breaking down a number into its prime components. Every integer greater than one can be uniquely expressed as a product of prime numbers.

In cryptography, prime factorization holds particular importance due to its relevance to the security of encryption algorithms, such as the widely used RSA cryptosystem.

The technology can reconstruct a hidden scene in just minutes using advanced mathematical algorithms.

Potential use case scenarios

Law enforcement agencies could use the technology to gather critical information about a crime scene without disturbing the evidence. This could be especially useful in cases where the scene is dangerous or difficult to access. For example, the technology could be used to reconstruct the scene of a shooting or a hostage situation from a safe distance.

The technology could also have applications in the entertainment industry. For instance, it could create immersive gaming experiences that allow players to explore virtual environments in 3D. It could also be used in the film industry to create more realistic special effects.

W/ Andrej Karpathy

The Tokenizer is a necessary and pervasive component of Large Language Models (LLMs), where it translates between strings and tokens (text chunks). Tokenizers are a completely separate stage of the LLM pipeline: they have their own training sets, training algorithms (Byte Pair Encoding), and after training implement two fundamental functions: encode() from strings to tokens, and decode() back from tokens to strings. In this lecture we build from scratch the Tokenizer used in the GPT series from OpenAI. In the process, we will see that a lot of weird behaviors and problems of LLMs actually trace back to tokenization. We’ll go through a number of these issues, discuss why tokenization is at fault, and why someone out there ideally finds a way to delete this stage entirely.

Chapters:

00:00:00 intro: Tokenization, GPT-2 paper, tokenization-related issues.

00:05:50 tokenization by example in a Web UI (tiktokenizer)

00:14:56 strings in Python, Unicode code points.

00:18:15 Unicode byte encodings, ASCII, UTF-8, UTF-16, UTF-32

00:22:47 daydreaming: deleting tokenization.

00:23:50 Byte Pair Encoding (BPE) algorithm walkthrough.

00:27:02 starting the implementation.

00:28:35 counting consecutive pairs, finding most common pair.

00:30:36 merging the most common pair.

00:34:58 training the tokenizer: adding the while loop, compression ratio.

00:39:20 tokenizer/LLM diagram: it is a completely separate stage.

00:42:47 decoding tokens to strings.

00:48:21 encoding strings to tokens.

00:57:36 regex patterns to force splits across categories.

01:11:38 tiktoken library intro, differences between GPT-2/GPT-4 regex.

01:14:59 GPT-2 encoder.py released by OpenAI walkthrough.

01:18:26 special tokens, tiktoken handling of, GPT-2/GPT-4 differences.

01:25:28 minbpe exercise time! write your own GPT-4 tokenizer.

01:28:42 sentencepiece library intro, used to train Llama 2 vocabulary.

01:43:27 how to set vocabulary set? revisiting gpt.py transformer.

01:48:11 training new tokens, example of prompt compression.

01:49:58 multimodal [image, video, audio] tokenization with vector quantization.

01:51:41 revisiting and explaining the quirks of LLM tokenization.

02:10:20 final recommendations.

02:12:50??? smile

Exercises:

- Advised flow: reference this document and try to implement the steps before I give away the partial solutions in the video. The full solutions if you’re getting stuck are in the minbpe code https://github.com/karpathy/minbpe/bl…

Links: