Researchers based at the Drexel University College of Engineering have devised a new method for performing structural safety inspections using autonomous robots aided by machine learning technology.

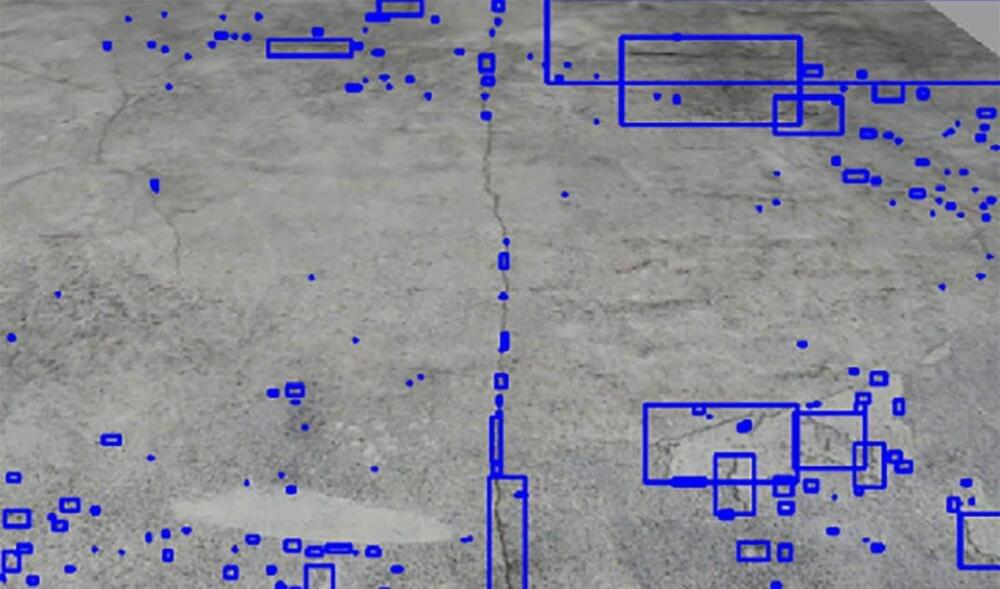

The article they published recently in the Elsevier journal Automation in Construction presented the potential for a new multi-scale monitoring system informed by deep-learning algorithms that work to find cracks and other damage to buildings before using LiDAR to produce three-dimensional images for inspectors to aid in their documentation.

The development could potentially work to benefit the enormous task of maintaining the health of structures that are increasingly being reused or restored in cities large and small across the country. Despite the relative age of America’s built environment, roughly two-thirds of today’s existing buildings will be in use in the year 2050, according to Gensler’s predictions.