“A phonon represents the collective motion of an astronomical number of atoms,” Cleland says. “And they all have to work together in order to obey quantum mechanics. There was this question in the back of my mind, will this really work? We tried it, and it’s kind of amazing, but it really does work.”

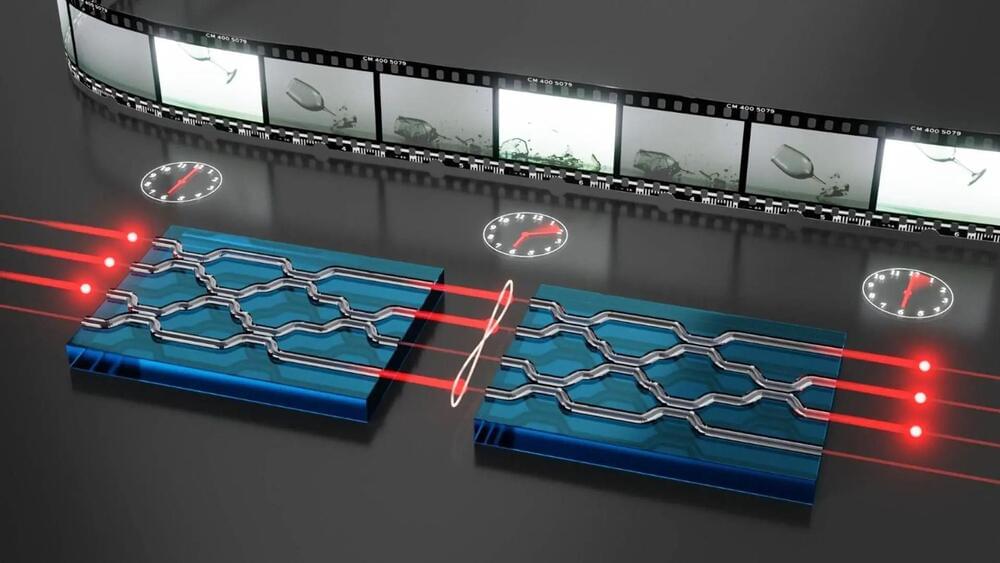

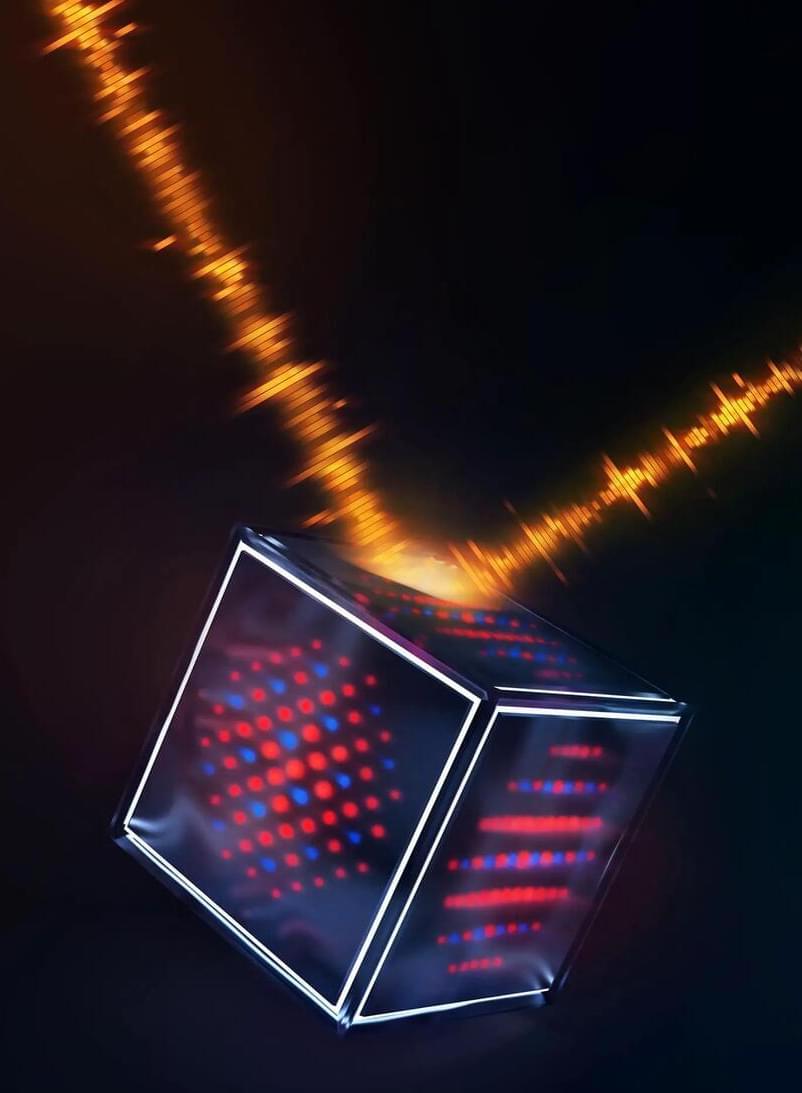

Splitting a phonon

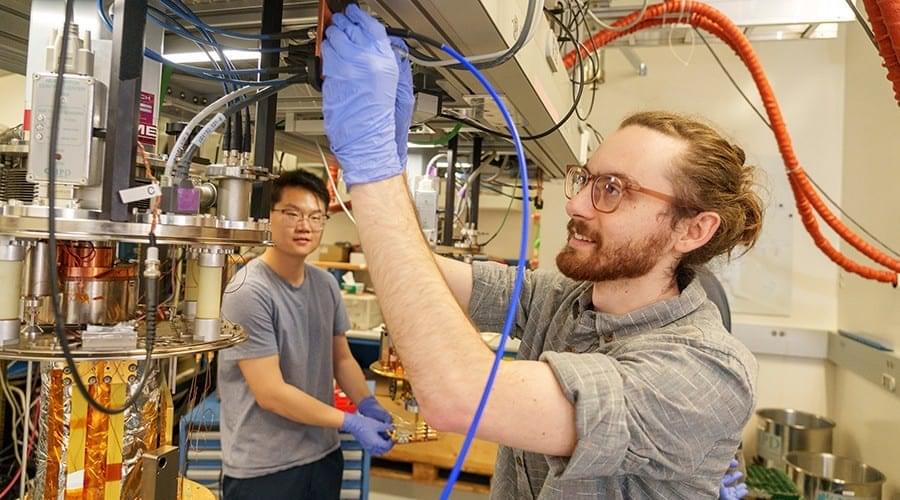

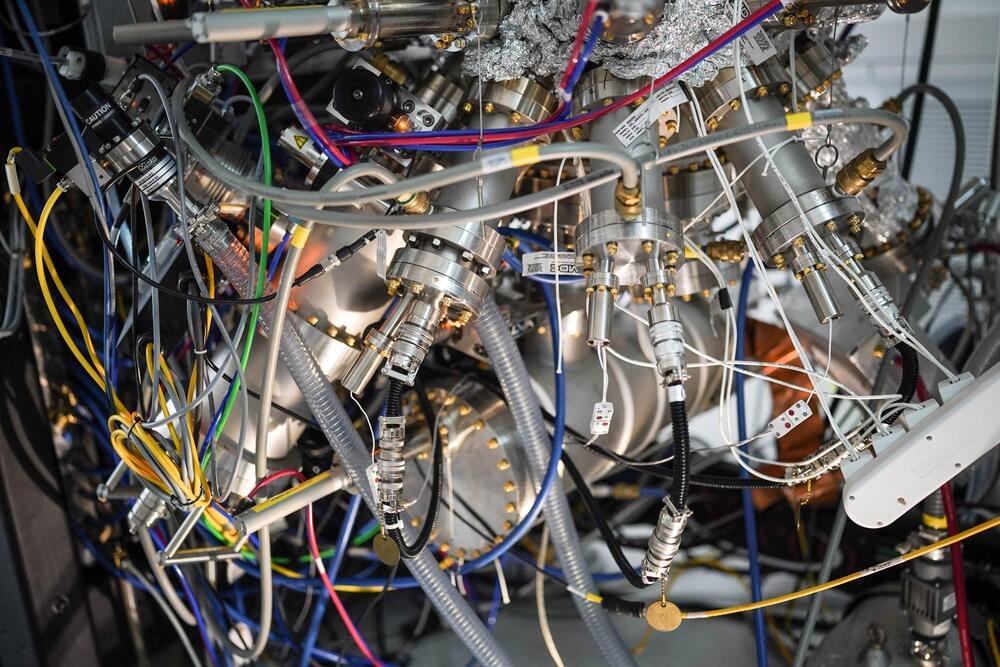

The team created single phonons as propagating wavepackets on the surface of a lithium niobate chip. The phonons were created and detected using two superconducting qubits, which were located on a separate chip, and coupled to the lithium niobate chip through the air. The two superconducting qubits were located either of the chip, with a two-millimetre-long channel between them hosting the travelling phonons.