“ tabindex=”0” accuracy and scale, brings scientists closer to understanding how neurons connect and communicate.

Mapping Thousands of Synaptic Connections

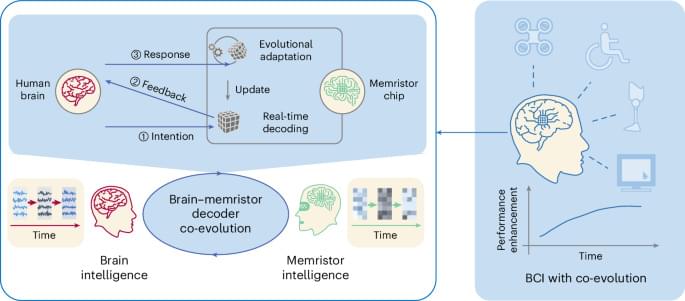

Harvard researchers have successfully mapped and cataloged over 70,000 synaptic connections from approximately 2,000 rat neurons. They achieved this using a silicon chip capable of detecting small but significant synaptic signals from a large number of neurons simultaneously.