“Lego block” artificial cells that can kill bacteria have been created by researchers at the University of California, Davis Department of Biomedical Engineering. The work is reported Aug. 29 in the journal ACS Applied Materials & Interfaces.

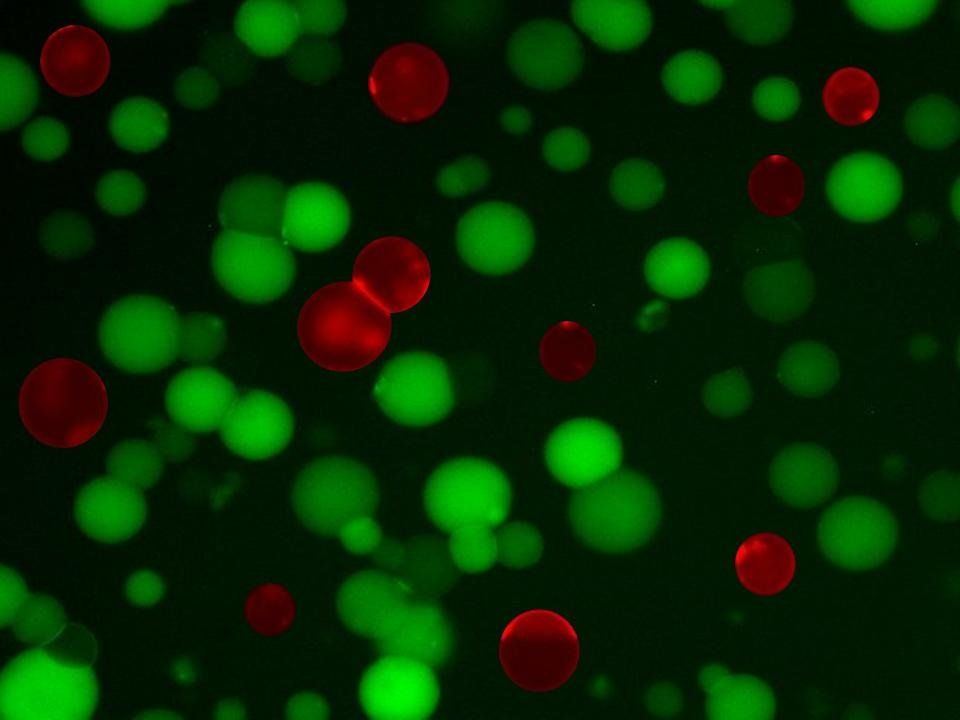

“We engineered artificial cells from the bottom-up – like Lego blocks – to destroy bacteria,” said Assistant Professor Cheemeng Tan, who led the work. The cells are built from liposomes, or bubbles with a cell-like lipid membrane, and purified cellular components including proteins, DNA and metabolites.

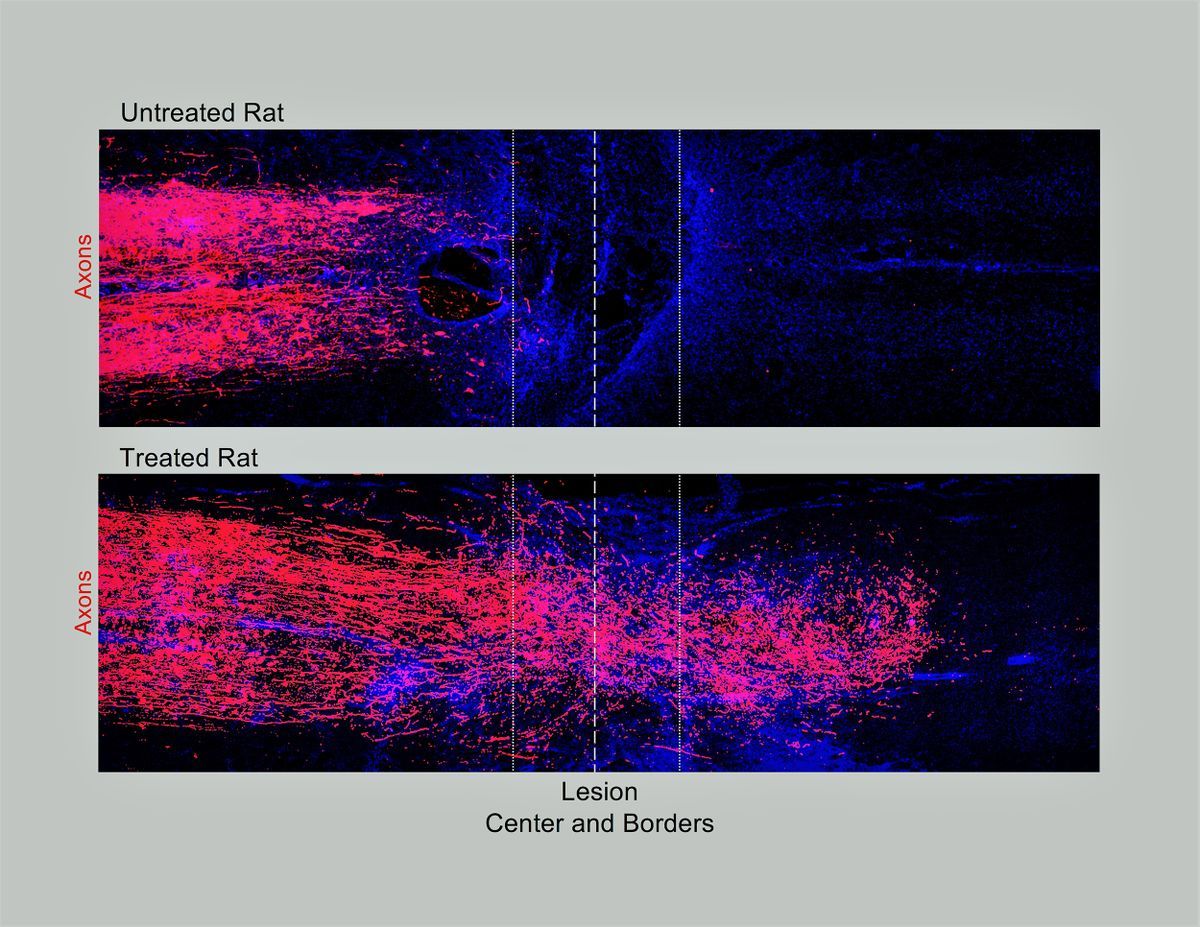

“We demonstrated that artificial cells can sense, react and interact with bacteria, as well as function as systems that both detect and kill bacteria with little dependence on their environment,” Tan said.