At the moment I think Meta VR gets laughed at, but this is a good explanation.

Clip from The Joe Rogan Experience #1863 with Mark Zuckerberg.

August 25th 2022

#JRE #podcast #joerogan #comedy #clip #facebook #AR #markzuckerberg

At the moment I think Meta VR gets laughed at, but this is a good explanation.

Clip from The Joe Rogan Experience #1863 with Mark Zuckerberg.

August 25th 2022

#JRE #podcast #joerogan #comedy #clip #facebook #AR #markzuckerberg

As the world increasingly embraces Web3, corporations are turning to metaverse applications to stay ahead of the curve. Based on Verified Market Research, the Metaverse market is anticipated to expand at a CAGR of 39.1 percent from 2022 to 2030, reaching USD 824.53 Billion in 2020 and USD 27.21 Billion in 2020. This is due to the increasing demand for AR/VR content and gaming and the need for more realistic and interactive training simulations.

These startups show Proof of Concept with a working product and clear value proposition for businesses and consumers.

● Launch a corporate accelerator: Another way to increase your exposure to the Metaverse is to launch a corporate accelerator. This will give you access to a broader range of startups and help you build a more diverse portfolio. In addition, it will allow you to offer mentorship and resources to the startups you invest in.

● Develop a clear investment strategy: It is also important to develop a clear investment strategy. This means knowing what industries you want to be involved in and what types of companies you want to invest in. A clear strategy can better decision which startups to invest in and how to support them best. For example, suppose your company is in the automotive industry. In that case, you may want to invest in startups working on new transportation technologies or developing new ways to use data from connected vehicles.

The LG Group has taken a leading role in investing in the Metaverse and is well-positioned to capitalize on the shift to this new paradigm. However, other enterprises need to take note of the company’s success and learn from its example. By following the steps outlined above, enterprises can increase their chances of success in the Metaverse and position themselves as leaders in this emerging market.

Were you unable to attend Transform 2022? Check out all of the summit sessions in our on-demand library now! Watch here.

Artificial intelligence (AI) systems must understand visual scenes in three dimensions to interpret the world around us. For that reason, images play an essential role in computer vision, significantly affecting quality and performance. Unlike the widely available 2D data, 3D data is rich in scale and geometry information, providing an opportunity for a better machine-environment understanding.

Data-driven 3D modeling, or 3D reconstruction, is a growing computer vision domain increasingly in demand from industries including augmented reality (AR) and virtual reality (VR). Rapid advances in implicit neural representation are also opening up exciting new possibilities for virtual reality experiences.

It’s an everyday scenario: you’re driving down the highway when out of the corner of your eye you spot a car merging into your lane without signaling. How fast can your eyes react to that visual stimulus? Would it make a difference if the offending car were blue instead of green? And if the color green shortened that split-second period between the initial appearance of the stimulus and when the eye began moving towards it (known to scientists as the saccade), could drivers benefit from an augmented reality overlay that made every merging vehicle green?

Qi Sun, a joint professor in Tandon’s Department of Computer Science and Engineering and the Center for Urban Science and Progress (CUSP), is collaborating with neuroscientists to find out.

He and his Ph.D. student Budmonde Duinkharjav—along with colleagues from Princeton, the University of North Carolina, and NVIDIA Research—recently authored the paper “Image Features Influence Reaction Time: A Learned Probabilistic Perceptual Model for Saccade Latency,” presenting a model that can be used to predict temporal gaze behavior, particularly saccadic latency, as a function of the statistics of a displayed image. Inspired by neuroscience, the model could ultimately have great implications for highway safety, telemedicine, e-sports, and in any other arena in which AR and VR are leveraged.

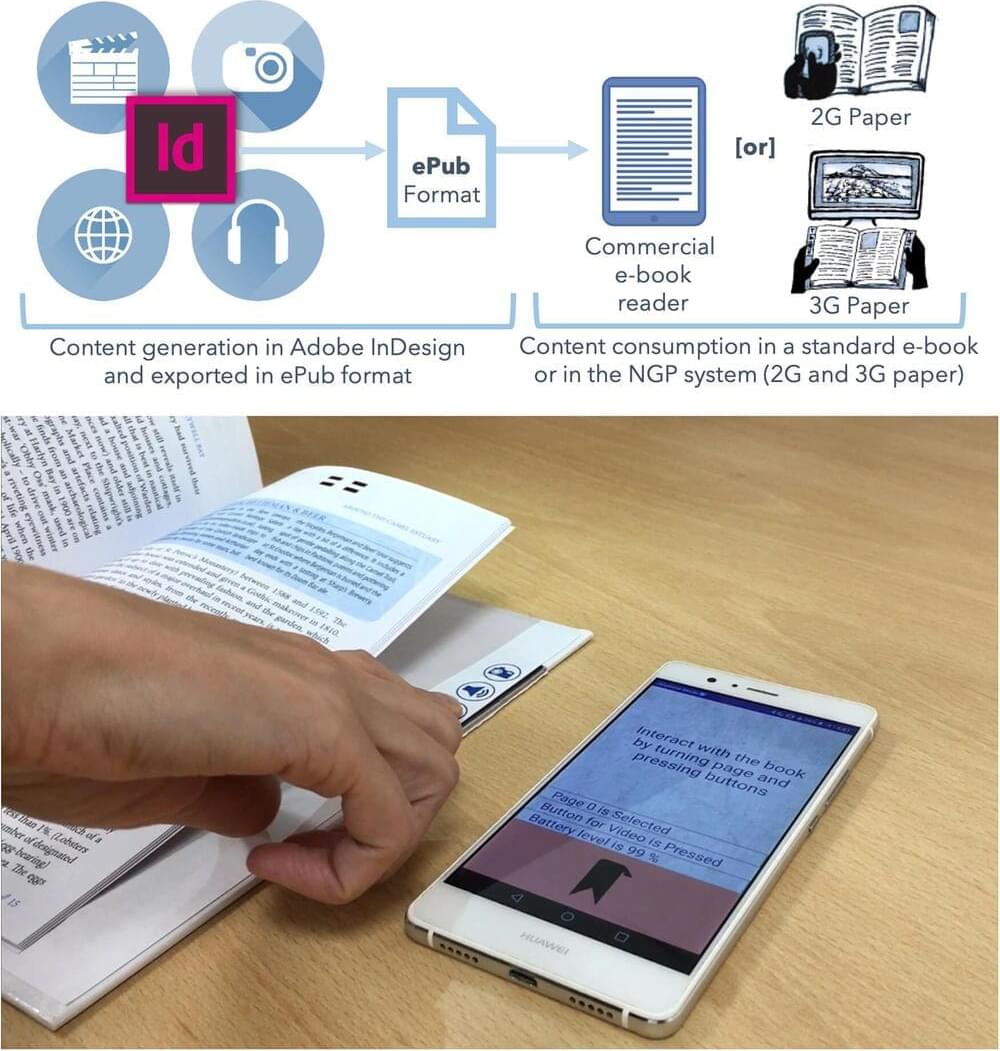

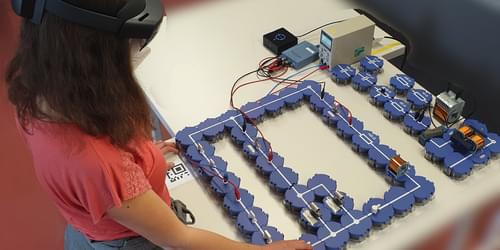

“Augmented books, or a-books, can be the future of many book genres, from travel and tourism to education. This technology exists to assist the reader in a deeper understanding of the written topic and get more through digital means without ruining the experience of reading a paper book.”

Power efficiency and pre-printed conductive paper are some of the new features which allow Surrey’s augmented books to now be manufactured on a semi-industrial scale. With no wiring visible to the reader, Surrey’s augmented reality books allow users to trigger digital content with a simple gesture (such as a swipe of a finger or turn of a page), which will then be displayed on a nearby device.

View insights.

In a paper distributed via ArXiv, titled “Exploring the Unprecedented Privacy Risks of the Metaverse,” boffins at UC Berkeley in the US and the Technical University of Munich in Germany play-tested an “escape room” virtual reality (VR) game to better understand just how much data a potential attacker could access. Through a 30-person study of VR usage, the researchers – Vivek Nair (UCB), Gonzalo Munilla Garrido (TUM), and Dawn Song (UCB) – created a framework for assessing and analyzing potential privacy threats. They identified more than 25 examples of private data attributes available to potential attackers, some of which would be difficult or impossible to obtain from traditional mobile or web applications. The metaverse that is rapidly becoming a part of our world has long been an essential part of the gaming community. Interaction-based games like Second Life, Pokemon Go, and Minecraft have existed as virtual social interaction platforms. The founder of Second Life, Philip Rosedale, and many other security experts have lately been vocal about Meta’s impact on data privacy. Since the core concept is similar, it is possible to determine the potential data privacy issues apparently within Meta.

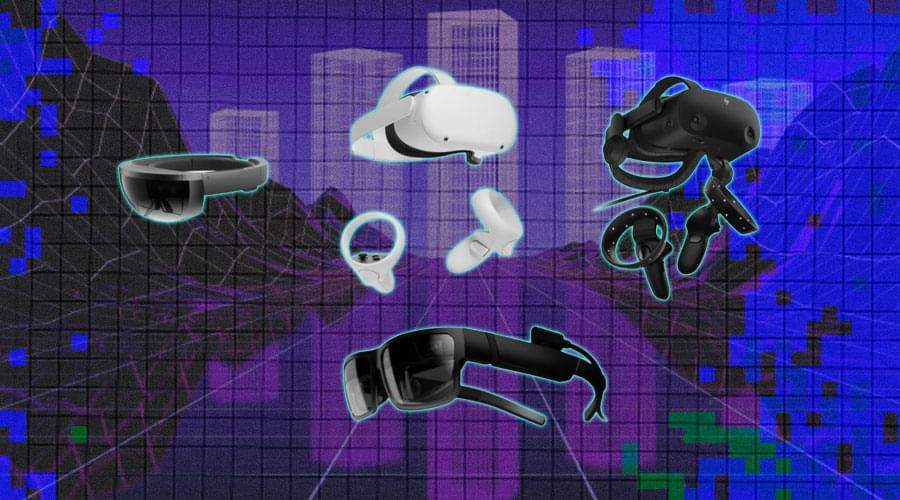

There has been a buzz going around the tech market that by the end of 2022, the metaverse can revive the AR/VR device shipments and take it as high as 14.19 million units, compared to 9.86 million in 2021, indicating a year-over-year increase of about 35% to 36%. The AR/VR device market will witness an enormous boom in the market due to component shortages and the difficulty to develop new technologies. The growth momentum will also be driven by the increased demand for remote interactivity stemming from the pandemic. But what will happen when these VR or metaverse headsets start stealing your precious data? Not just headsets but smart glasses too are prime suspect when it comes to privacy concerns.

Several weeks ago, Facebook introduced a new line of smart glasses called Ray-Ban Stories, which can take photos, shoot 30-second videos, and post them on the owner’s Facebook feed. Priced at US$299 and powered by Facebook’s virtual assistant, the web-connected shades can also take phone calls and play music or podcasts.

Microsoft’s big defense contract that looks to supply the US Army with modified HoloLens AR headsets isn’t going so well. As first reported by Bloomberg, the Senate panel that oversees defense spending announced significant cuts to the Army’s fiscal 2023 procurement request for the device.

Microsoft announced last year it had won a US Army defense contact worth up to $22 billion to develop an Integrated Visual Augmentation System (IVAS), a tactical AR headset for soldiers based on HoloLens 2 technology.

Now the Appropriations Defense Subcommittee announced it’s cut $350 million from the Army’s procurement plans for IVAS, leaving around $50 million for the device. The subcommittee cites concerns based around the program’s overall effectiveness.

Interested in learning what’s next for the gaming industry? Join gaming executives to discuss emerging parts of the industry this October at GamesBeat Summit Next. Register today.

The world of technology is rapidly shifting from flat media viewed in the third person to immersive media experienced in the first person. Recently dubbed “the metaverse,” this major transition in mainstream computing has ignited a new wave of excitement over the core technologies of virtual and augmented reality. But there is a third technology area known as telepresence that is often overlooked but will become an important part of the metaverse.

While virtual reality brings users into simulated worlds, telepresence (also called telerobotics) uses remote robots to bring users to distant places, giving them the ability to look around and perform complex tasks. This concept goes back to science fiction of the 1940s and a seminal short story by Robert A. Heinlein entitled Waldo. If we combine that concept with another classic sci-fi tale, Fantastic Voyage (1966), we can imagine tiny robotic vessels that go inside the body and swim around under the control of doctors who diagnose patients from the inside, and even perform surgical tasks.