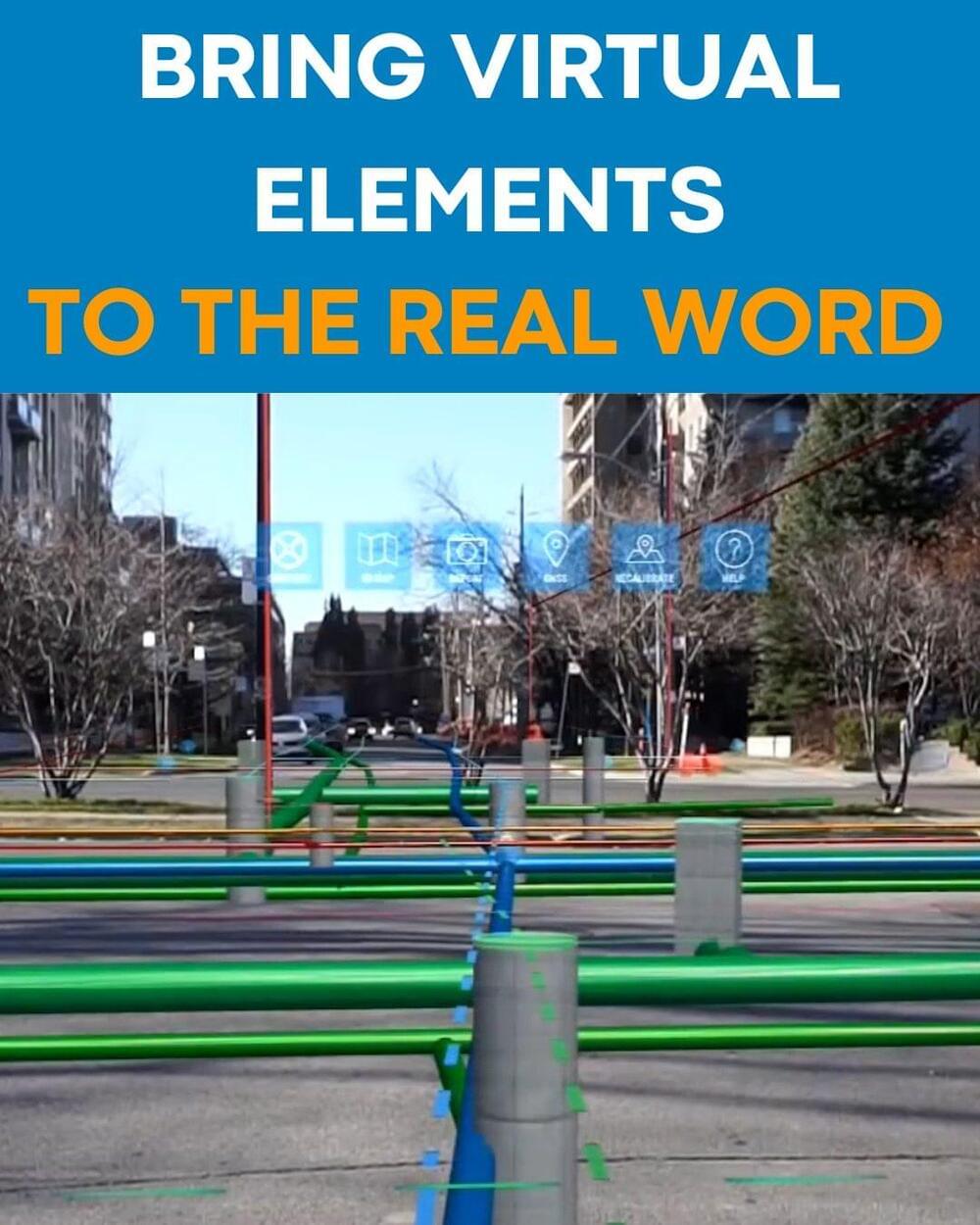

Click on photo to start video.

🎥 vGIS Inc.

Artificial Intelligence trained with first-person videos could better understand our world. At Meta, AR and AI development intersect in this space.

In the run-up to the CVPR 2022 computer vision conference, Meta is releasing the “Project Aria Pilot Dataset,” with more than seven hours of first-person videos spread across 159 sequences in five different locations in the United States. They show scenes from everyday life – doing the dishes, opening a door, cooking, or using a smartphone in the living room.

AI training for everyday life.

The latest in the company’s series of AddArmor vehicles, the 2022 B6 Escalade features lightweight B6 AR 500 bullet-resistant material in every body panel that offers B6-level protection, plus B6-level reinforced glass that can sustain prolonged strikes from rocks, bats, or other blunt objects, the company claims. A PA siren system (to disperse crowds) and run-flat tires also help prepare the Escalade for dangerous situations. B6 protection is the highest level of civilian pr… See more.

The 2022 B6 Cadillac Escalade will let drug kingpins travel with the peace of mind that shots from an assault rifle can’t penetrate their luxury SUV.

The talk is provided on a Free/Donation basis. If you would like to support my work then you can paypal me at this link:

https://paypal.me/wai69

Or to support me longer term Patreon me at: https://www.patreon.com/waihtsang.

Unfortunately my internet link went down in the second Q&A session at the end and the recording cut off. Shame, loads of great information came out about FPGA/ASIC implementations, AI for the VR/AR, C/C++ and a whole load of other riveting and most interesting techie stuff. But thankfully the main part of the talk was recorded.

TALK OVERVIEW

This talk is about the realization of the ideas behind the Fractal Brain theory and the unifying theory of life and intelligence discussed in the last Zoom talk, in the form of useful technology. The Startup at the End of Time will be the vehicle for the development and commercialization of a new generation of artificial intelligence (AI) and machine learning (ML) algorithms.

We will show in detail how the theoretical fractal brain/genome ideas lead to a whole new way of doing AI and ML that overcomes most of the central limitations of and problems associated with existing approaches. A compelling feature of this approach is that it is based on how neurons and brains actually work, unlike existing artificial neural networks, which though making sensational headlines are impeded by severe limitations and which are based on an out of date understanding of neurons form about 70 years ago. We hope to convince you that this new approach, really is the path to true AI.

In the last Zoom talk, we discussed a great unifying of scientific ideas relating to life & brain/mind science through the application of the mathematical idea of symmetry. In turn the same symmetry approach leads to a unifying of a mass of ideas relating to computer and information science. There’s been talk in recent years of a ‘master algorithm’ of machine learning and AI. We’ll explain that it goes far deeper than that and show how there exists a way of unifying into a single algorithm, the most important fundamental algorithms in use in the world today, which relate to data compression, databases, search engines and also existing AI/ML. Furthermore and importantly this algorithm is completely fractal or scale invariant. The same algorithm which is able to perform all these functionalities is able to run on a micro-controller unit (MCU), mobile phone, laptop and workstation, going right up to a supercomputer.

The application and utility of this new technology is endless. We will discuss the road map by which the sort of theoretical ideas I’ve been discussing in the Zoom, academic and public talks over the past few years, and which I’ve written about in the Fractal Brain Theory book, will become practical technology. And how the Java/C/C++ code running my workstation and mobile phones will become products and services.

In forthcoming years, everyone will get to observe how beautifully Metaverse will evolve towards immersive experiences in hyperreal virtual environments filled with avatars that look and sound exactly like us. Neil Stephenson’s Snow Crash describes a vast world full of amusement parks, houses, entertainment complexes, and worlds within themselves all connected by a virtual street tens of thousands of miles long. For those who are still not familiar with the metaverse, it is a virtual world in which users can put on virtual reality goggles and navigate a stylized version of themselves, known as an avatar, via virtual workplaces, and entertainment venues, and other activities. The metaverse will be an immersive version of the internet with interactive features using different technologies such as virtual reality (VR), augmented reality (AR), 3D graphics, 5G, hologram, NFT, blockchain, haptic sensors, and artificial intelligence (AI). To scale personalized content experiences to billions of people, one potential answer is generative AI, the process of using AI algorithms on existing data to create new content.

In computing, procedural generation is a method of creating data algorithmically as opposed to manually, typically through a combination of human-generated assets and algorithms coupled with computer-generated randomness and processing power. In computer graphics, it is commonly used to create textures and 3D models.

The algorithmic difficulty is typically seen in Diablo-style RPGs and some roguelikes which use instancing of in-game entities to create randomized items. Less frequently it can be used to determine the relative difficulty of hand-designed content to be subsequently placed procedurally, as can be seen with the monster design in Unangband. For example, the designer can rapidly create content, but leaves it up to the game to determine how challenging that content is to overcome, and consequently where in the procedurally generated environment this content will appear. Notably, the Touhou series of bullet hell shooters use algorithmic difficulty. Though the users are only allowed to choose certain difficulty values, several community mods enable ramping the difficulty beyond the offered values.

What if we could look into the future to see how every aspect of our daily lives – from raising pets and house plants to what we eat and how we date – will be impacted by technology? We can, and should, expect more from the future than the dystopia promised in current science fiction. The Future Of… will reveal surprising and personal predictions about the rest of our lives — and the lives of generations to come.

SUBSCRIBE: http://bit.ly/29qBUt7

About Netflix:

Netflix is the world’s leading streaming entertainment service with 222 million paid memberships in over 190 countries enjoying TV series, documentaries, feature films and mobile games across a wide variety of genres and languages. Members can watch as much as they want, anytime, anywhere, on any internet-connected screen. Members can play, pause and resume watching, all without commercials or commitments.

The Future Of | Official Trailer | Netflix.

https://youtube.com/Netflix.

This docuseries explores surprising predictions about augmented reality, wearable tech and other innovations that will impact our lives in the future.

There’s a lot going on when it comes to Apple’s rumored mixed reality headset, which is expected to combine both AR and VR technologies into a single device. However, at the same time, the company has also been working on new AR glasses. According to Haitong Intl Tech Research analyst Jeff Pu, Apple’s AR glasses will be announced in late 2024.

In a note seen by 9to5Mac, Pu mentions that Luxshare will remain as one of Apple’s main suppliers for devices to come between late 2022 and 2024. Among all devices, the analyst highlights products such as Apple Watch Series 8, iPhone 14, and Apple’s AR/VR headset. But more than that, Pu believes that Apple plans to introduce new AR glasses in the second half of 2024.

At this point, details about Apple’s AR glasses are unknown. What we do know so far is that, unlike Apple’s AR/VR headset, the new AR glasses will be highly dependent on the iPhone due to design limitations. Analyst Ming-Chi Kuo said in 2019 that the rumored “Apple Glasses” will act more like a display for the iPhone, similar to the first generation Apple Watch.

It doesn’t have to be all fun and games in the Metaverse, especially when its best use cases are the ones that need a different reality the most. Thanks to a few companies that have large marketing machines, the word “Metaverse” has become muddled in hype and controversy. While the current use of the coined word might be new to our ears, the technologies that empower it have been around for quite some time now. And they aren’t always used for games or entertainment, even if that is what everyone thinks these days. In fact, one of the most frequent early adopters of these technologies come from the medical field, which continuously tests new equipment, theories, and digital experiences to help improve lives. So while mainstream media, carmakers, and social networks continue to shine the light on new ways to experience different worlds, the Metaverse, its concepts, and its applications are already sneaking their way into medical and scientific institutions, ready to take healthcare to the next, augmented reality level.

Project Cambria is coming out Later This year, The Next generation Standalone Mixed Reality Headset.

“This demo was created using Presence Platform, which we built to help developers build mixed reality experiences that blend physical and virtual worlds. The demo, called “The World Beyond,” will be available on App Lab soon. It’s even better with full color passthrough and the other advanced technologies we’re adding to Project Cambria. More details soon.“

Let’s Get into it!

SUPPORT THE CHANNEL smile

► Become a VR Techy Patreon → https://www.patreon.com/TyrielWood.

► Become a Sponsor on YouTube → https://www.youtube.com/channel/UC5rM…

► Get some VR Tech Merch → tyriel-wood.creator-spring.com.

► VRCovers HERE: https://vrcover.com/?itm=274

► VR Rock Prescription lens: www.vr-rock.com (5% discount code: vrtech)

FOLLOW ME ON:

►Twitter → https://twitter.com/Tyrielwood.

►Facebook → https://www.facebook.com/TyrielWoodVR

► Instagram → https://www.instagram.com/tyrielwoodvr.

►Join our Discord → https://discord.gg/nsSjXx4kfj.

A BIG THANKS to my VR Techy Patreons for their support:

Trond Hjerde, Dinesh Punni, Randy Leestma, yu gao, Daniel Nehring II, Alexandre Soares da Silva, Kiyoshi Akita, Tolino Marco, Infligo, VeryEvilShadow.

MUSIC

► My Music from Epidemic Sounds → https://www.epidemicsound.com/referral/kf0ycv.

#Meta #VRHeadset #Cambria.