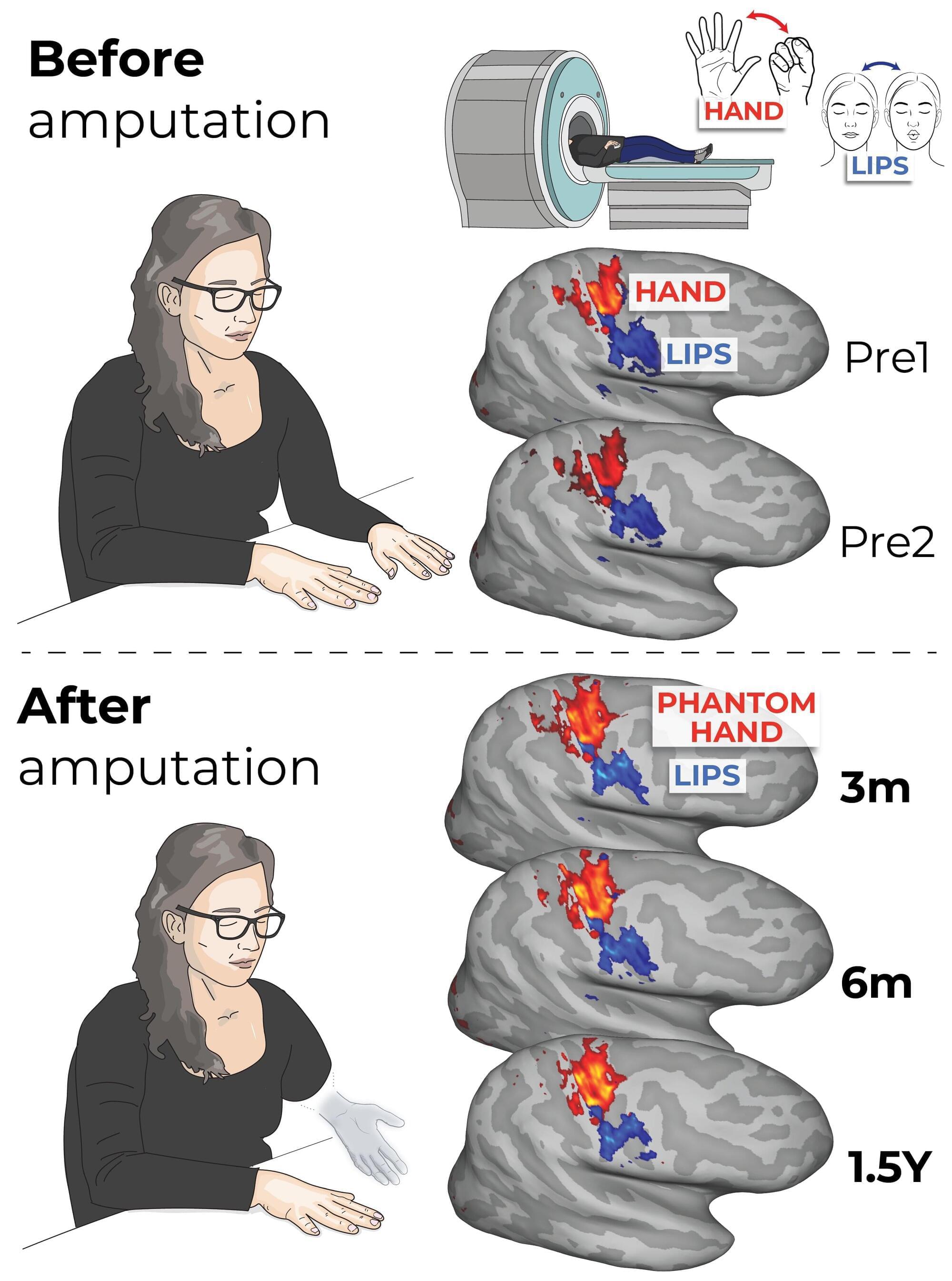

The brain holds a “map” of the body that remains unchanged even after a limb has been amputated, contrary to the prevailing view that it rearranges itself to compensate for the loss, according to new research from scientists in the UK and US.

The findings, published in Nature Neuroscience, have implications for the treatment of “phantom limb” pain, but also suggest that controlling robotic replacement limbs via neural interfaces may be more straightforward than previously thought.

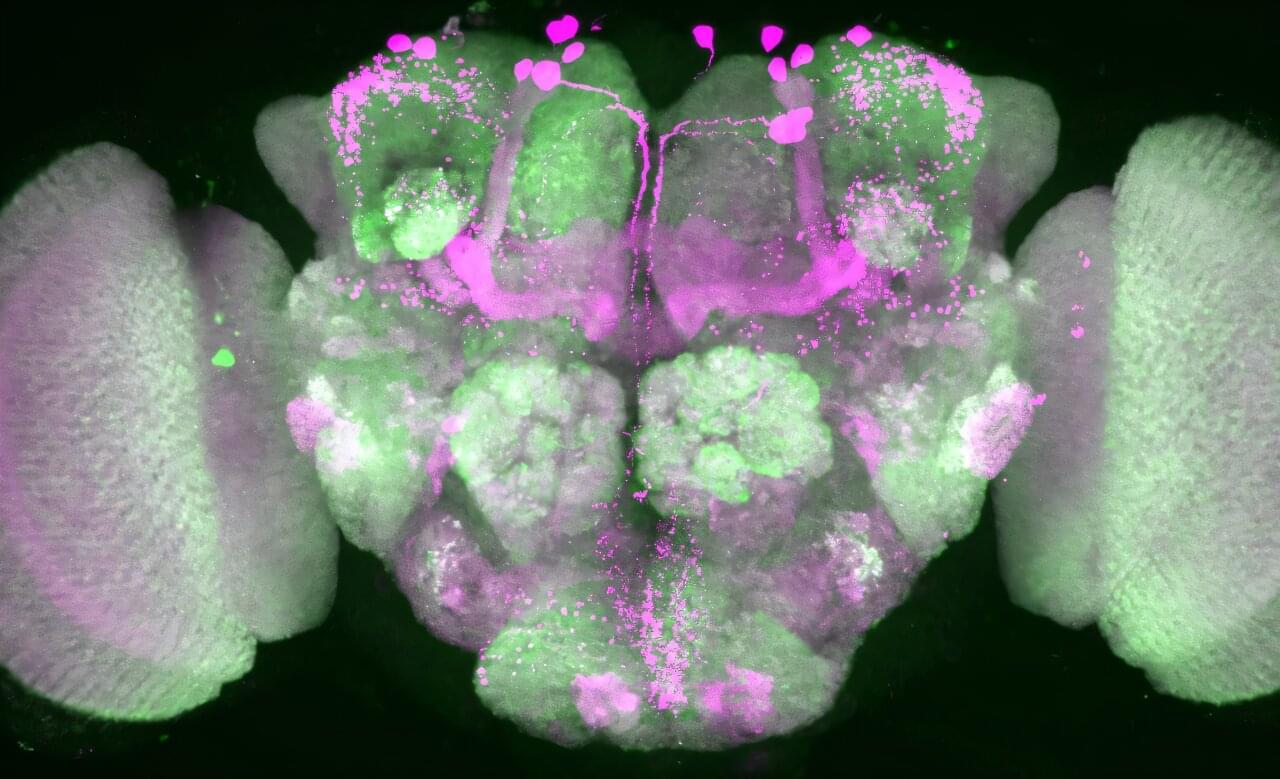

Studies have previously shown that within an area of the brain known as the somatosensory cortex there exists a map of the body, with different regions corresponding to different body parts.