Google’s Gemma AI helped discover a drug combo that makes tumors immune-visible, marking a milestone for AI-driven biology.

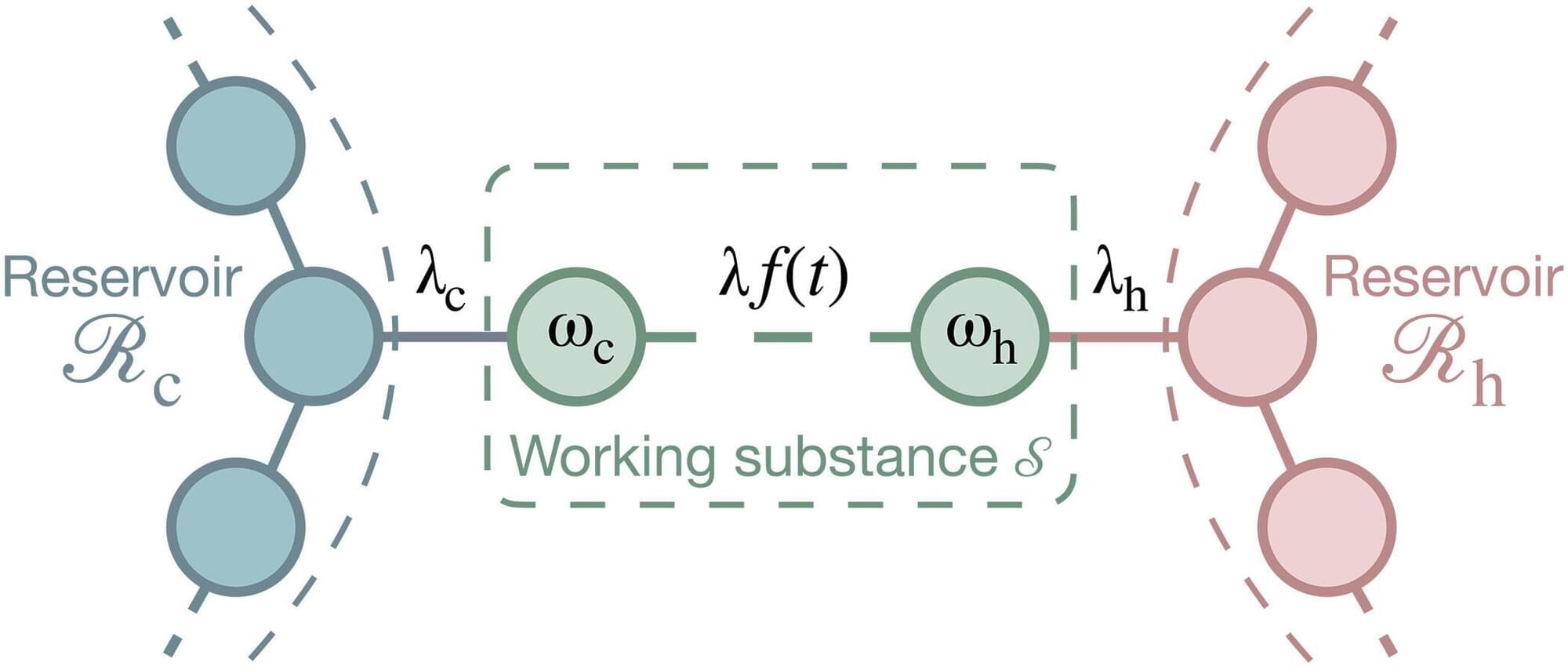

Two physicists at the University of Stuttgart have proven that the Carnot principle, a central law of thermodynamics, does not apply to objects on the atomic scale whose physical properties are linked (so-called correlated objects). This discovery could, for example, advance the development of tiny, energy-efficient quantum motors. The derivation has been published in the journal Science Advances.

Join Adam Perella and I at the Schellman AI Summit on November 18th, 2025 at Schellman HQ in Tampa Florida.

Your AI doesn’t just use data; it consumes it like a hungry teenager at a buffet.

This creates a problem when the same AI system operating across multiple regulatory jurisdictions is subject to conflicting legal requirements. Imagine your organization trains your AI in California, deploys it in Dublin, and serves users globally.

This means that you operate in multiple jurisdictions, each demanding different regulatory requirements from your organization.

Welcome to the fragmentation of cross-border AI governance, where over 1,000 state AI bills introduced in 2025 meet the EU’s comprehensive regulatory framework, creating headaches for businesses operating internationally.

As compliance and attestation leaders, we’re well-positioned to offer advice on how to face this challenge as you establish your AI governance roadmap.

Cross-border AI accountability isn’t going away; it’s only accelerating. The companies that thrive will be those that treat regulatory complexity as a competitive advantage, not a compliance burden.

Microrobots, small robotic systems that are less than 1 centimeter (cm) in size, could tackle some real-world tasks that cannot be completed by bigger robots. For instance, they could be used to monitor confined spaces and remote natural environments, to deliver drugs or to diagnose diseases or other medical conditions.

Researchers at Seoul National University recently introduced new modular and durable microrobots that can adapt to their surroundings, effectively navigating a range of environments. These tiny robots, introduced in a paper published in Advanced Materials, can be fabricated using 3D printing technology.

“Microrobots, with their insect-like size, are expected to make contributions in fields where conventional robots have struggled to operate,” Won Jun Song, first author of the paper, told Tech Xplore. “However, most microrobots developed to date have been highly specialized, tailored for very specific purposes, making them difficult to deploy across diverse environments and applications. Our goal was to present a new approach toward creating general-purpose microrobots.”