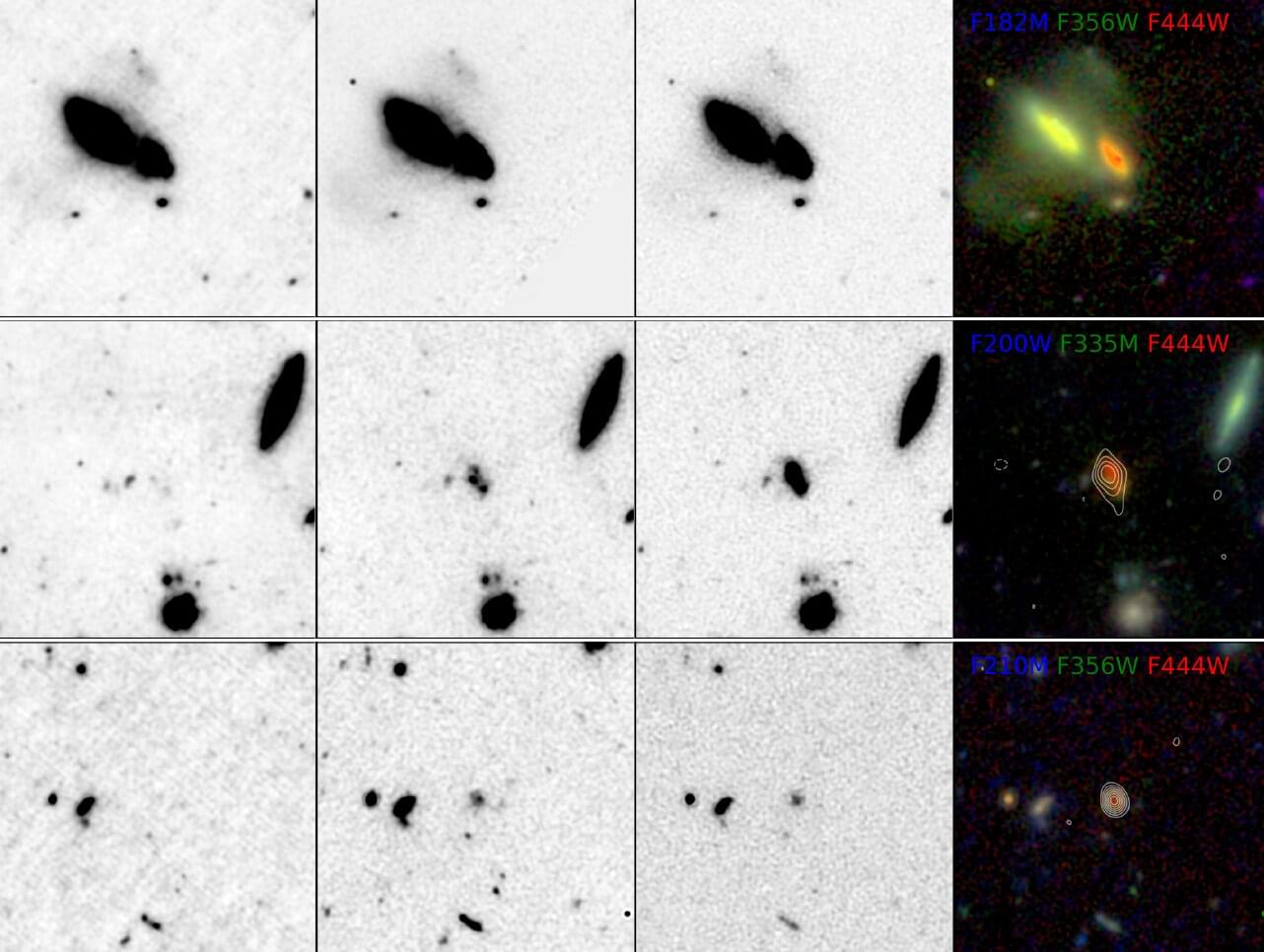

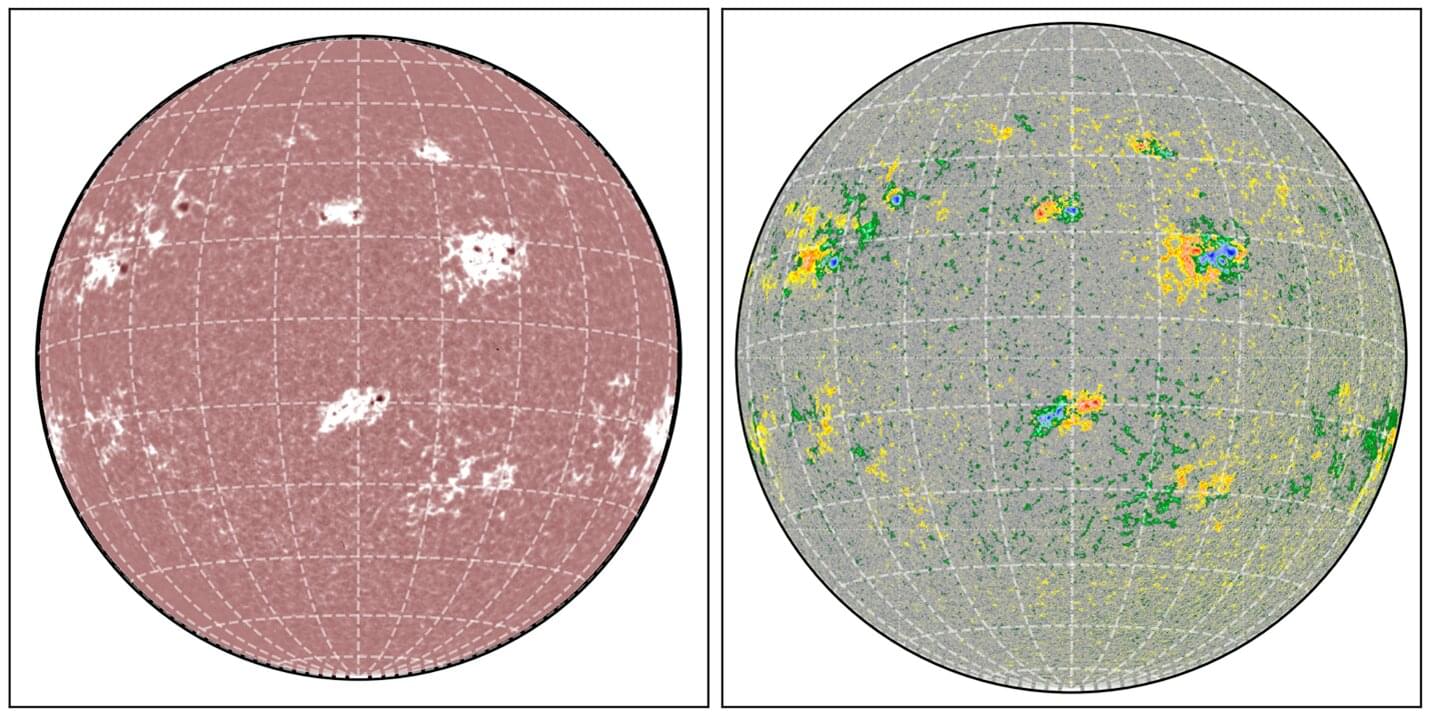

Astronomers have discovered a vast, dense cluster of massive galaxies just 1 billion years after the Big Bang, each forming stars at an intense rate from collapsing clouds of dust. Reported in Astronomy & Astrophysics by an international team, led by Guilaine Lagache at Aix-Marseille University, the structure appears to challenge existing models of how rapidly stars could have formed in the early universe.

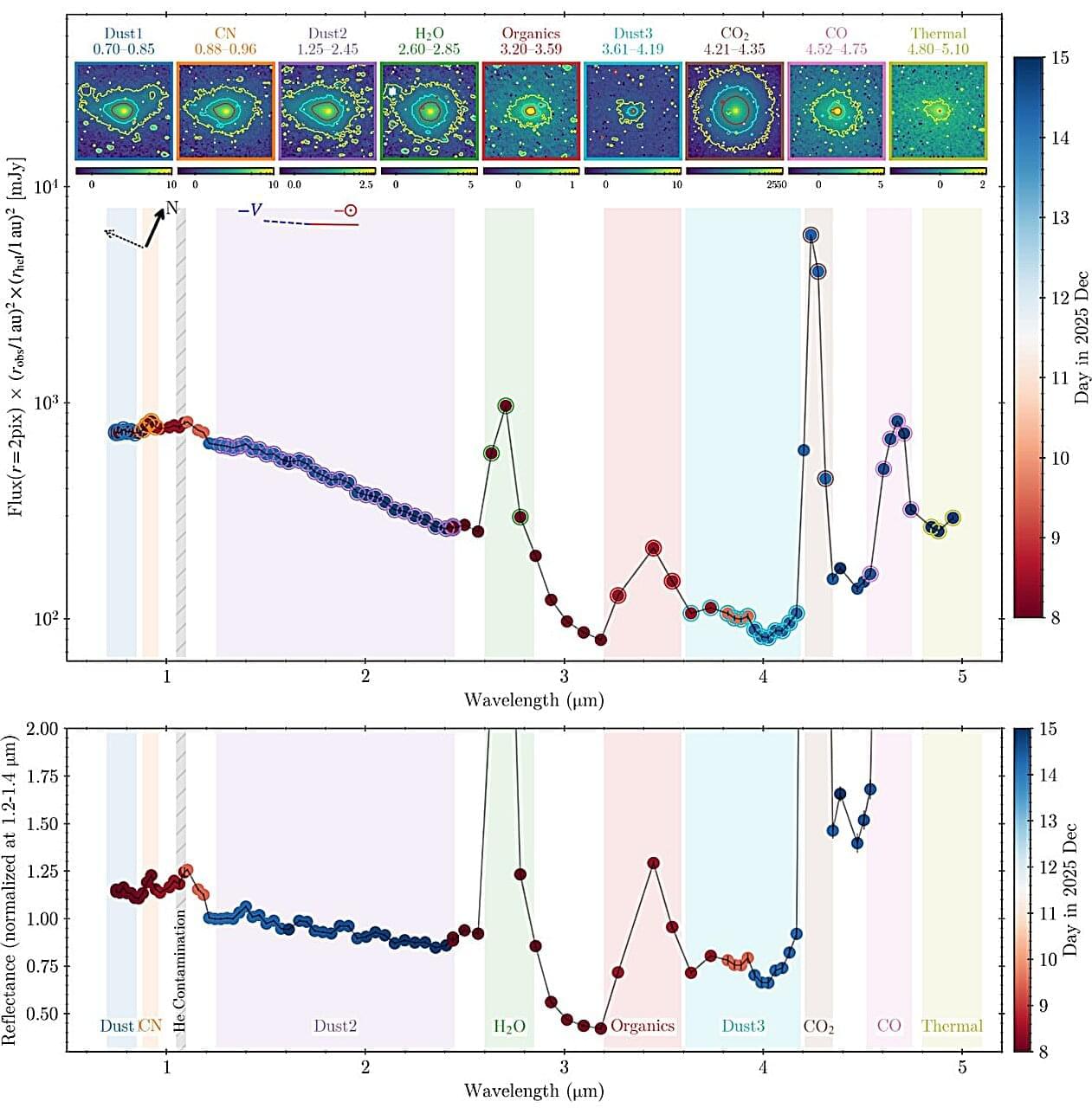

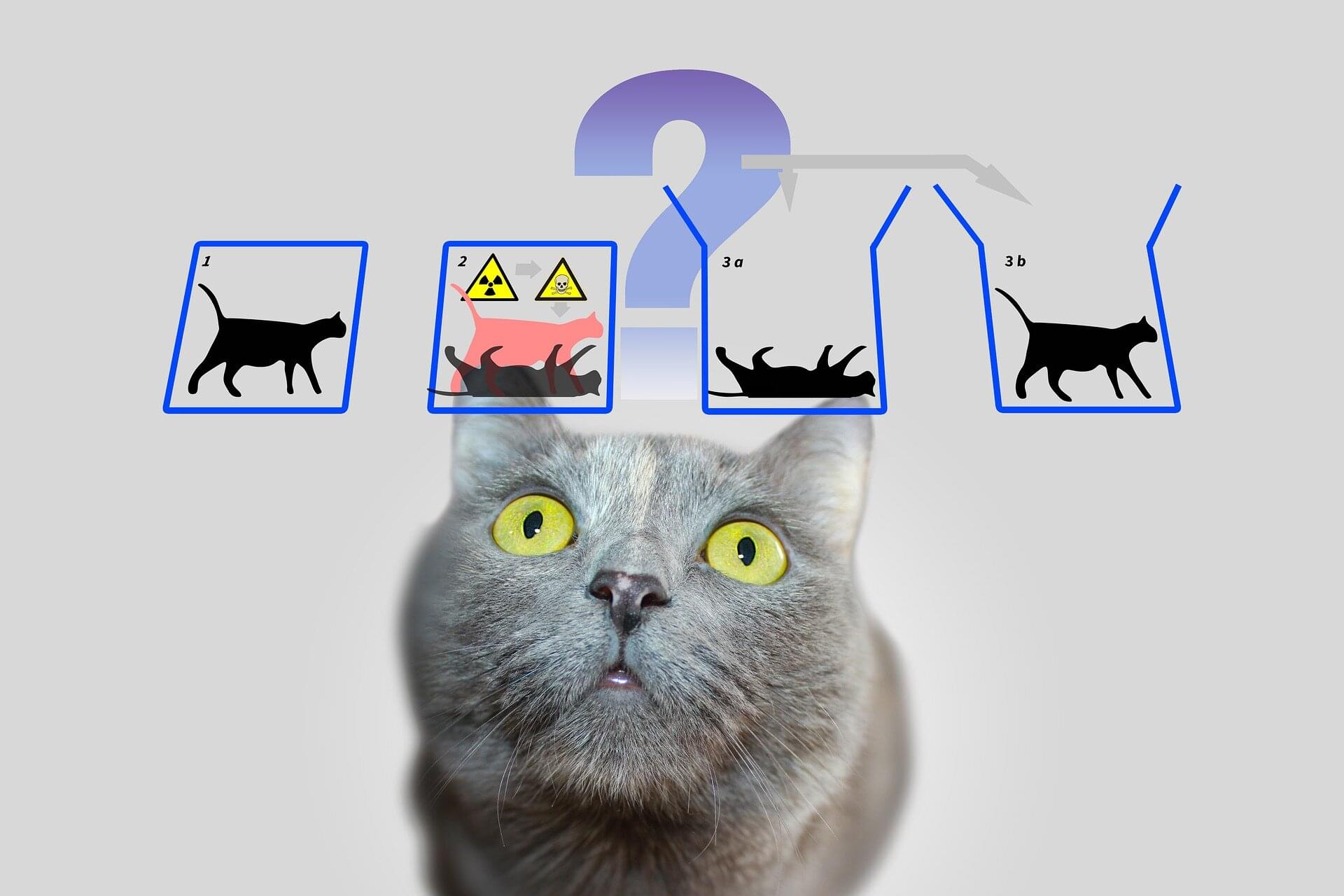

In many newly forming galaxies, immense clouds of gas and dust collapse under their own gravity, igniting rapid bursts of star formation. This process can be studied by observing extremely distant galaxies, whose light is only now reaching Earth after traveling for more than 12 billion years.

However, these observations present a challenge for astronomers. Since dust within the distant galaxy is a strong absorber of the light produced by newly forming stars, these regions are often impossible to observe directly at visible wavelengths.