With transformer #AI protein #design and major advances in #DNA #synthesis, how do we deal with #biosecurity concerns?

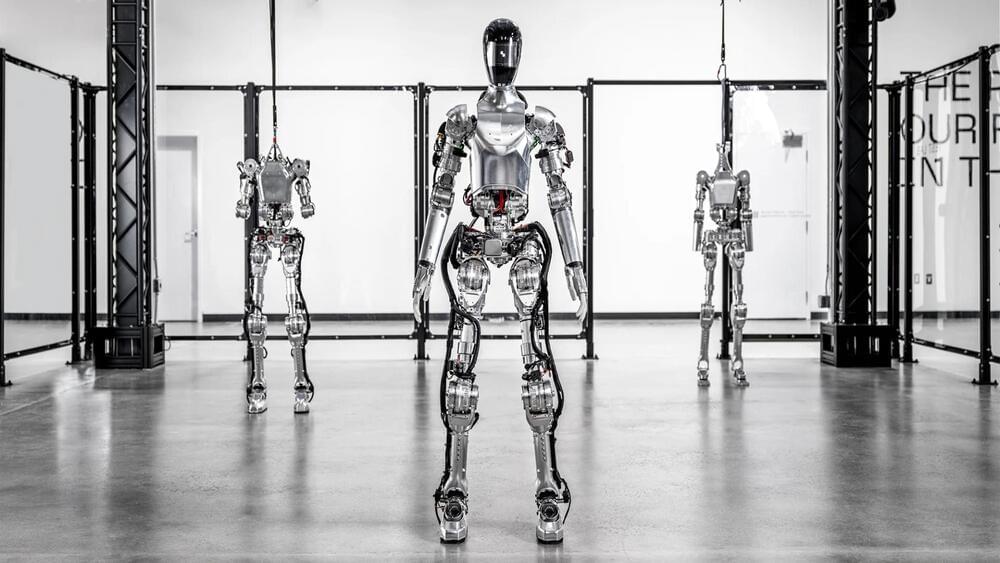

In the future, soft robots will be able to perform tasks that cannot be done by conventional robots. These soft robots could be used in terrain that is difficult to access and in environments where they are exposed to chemicals or radiation that would harm electronically controlled robots made of metal. This requires such soft robots to be controllable without any electronics, which is still a challenge in development.

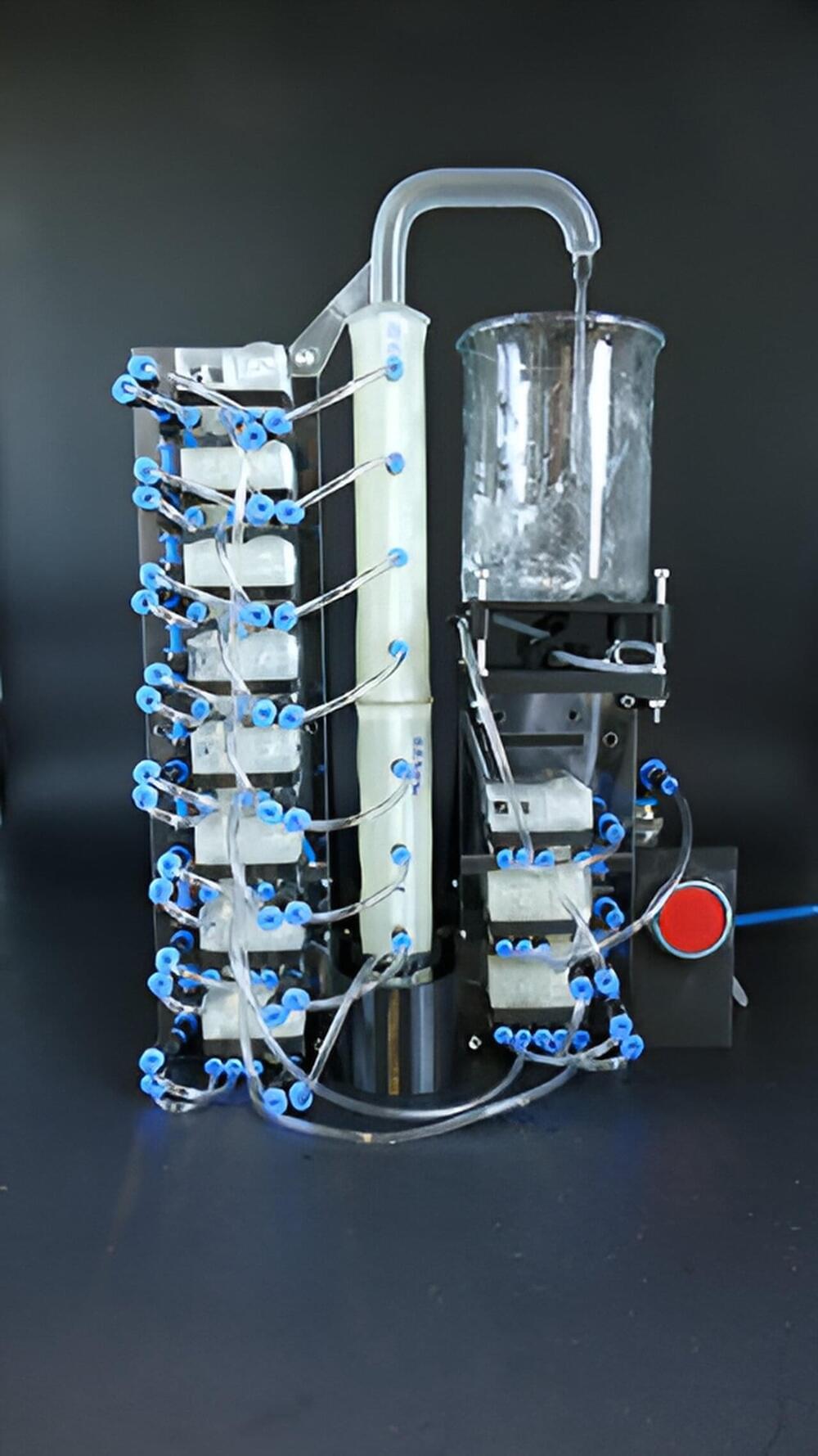

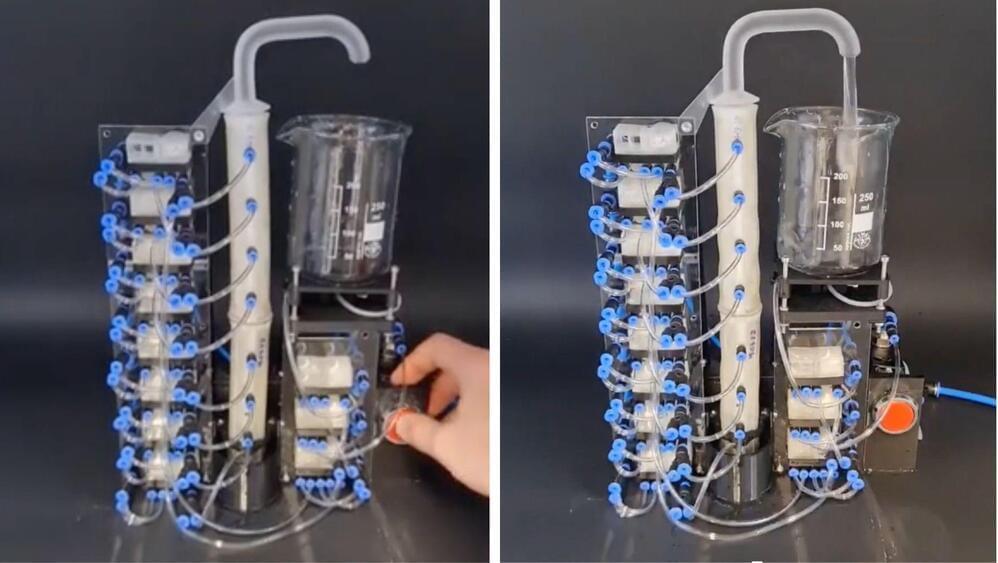

A research team at the University of Freiburg has now developed 3D-printed pneumatic logic modules that control the movements of soft robots using air pressure alone. These modules enable logical switching of the air flow and can thus imitate electrical control.

The modules make it possible for the first time to produce flexible and electronics-free soft robots entirely in a 3D printer using conventional filament printing material.

Frame rate would be even worse than the original, though. MUCH worse.

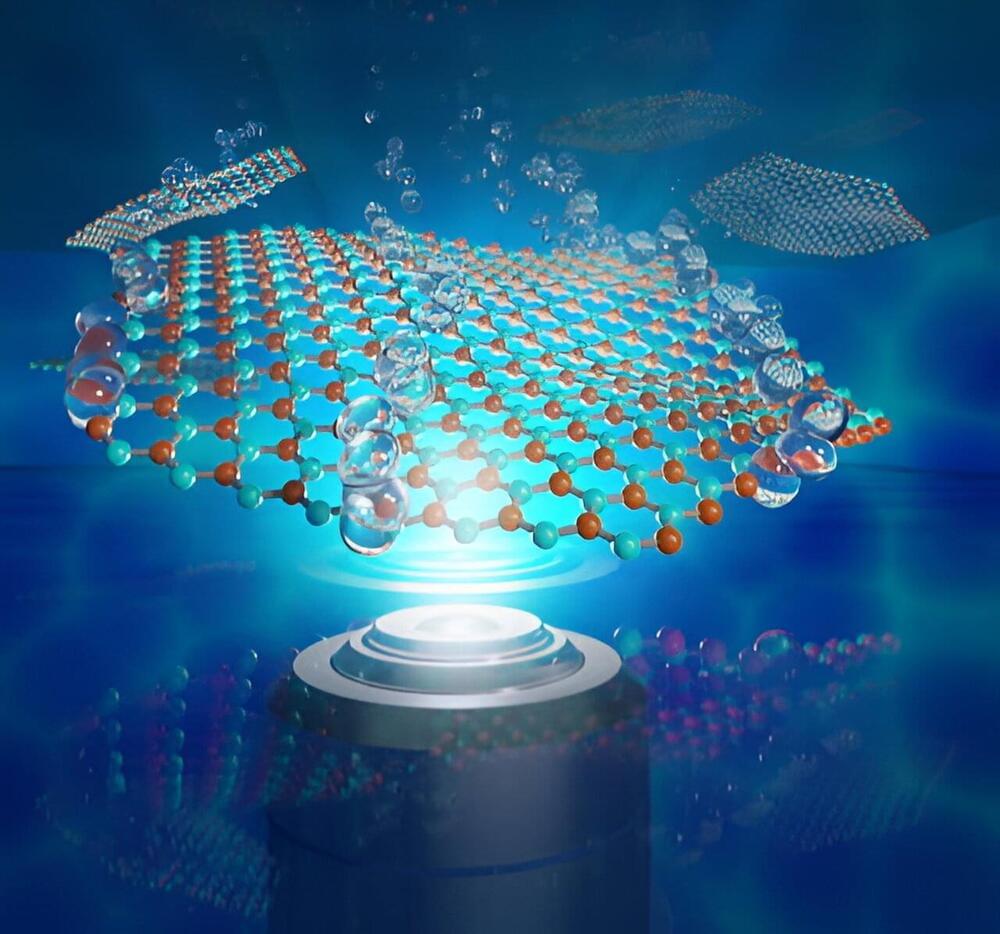

A team of Rice University researchers mapped out how flecks of 2D materials move in liquid ⎯ knowledge that could help scientists assemble macroscopic-scale materials with the same useful properties as their 2D counterparts.

“Two-dimensional nanomaterials are extremely thin—only several atoms thick—sheet-shaped materials,” said Utana Umezaki, a Rice graduate student who is a lead author on a study published in ACS Nano. “They behave very differently from materials we’re used to in daily life and can have really useful properties: They can withstand a lot of force, resist high temperatures and so on. To take advantage of these unique properties, we have to find ways to turn them into larger-scale materials like films and fibers.”

In order to maintain their special properties in bulk form, sheets of 2D materials have to be properly aligned ⎯ a process that often occurs in solution phase. Rice researchers focused on graphene, which is made up of carbon atoms, and hexagonal boron nitride, a material with a similar structure to graphene but composed of boron and nitrogen atoms.

The organoids are the size of grain of sand and a special robot is used to screen which treatment kills off the tumour more effectively.

It helps take the guesswork out of treating advanced bowel cancer which can be difficult to beat.

“Each time you give a patient an ineffective treatment, you lose two to three months on something that won’t work,” Gibbs said.

Researchers have developed an ingenious air-powered soft valve circuit system devoid of electronics, showcasing its utility in a drink dispenser and its durability as a car drives over it.

The 3D-printed valve system showcases how well soft devices without electronics can work, even when facing challenges that could turn off regular robots.

According to reseachers at the University of Freiburg, its integration into everyday applications heralds a new era in robust and adaptable robotics. Soft circuit devices, which are flexible and don’t use metal, can handle damage much better than those with delicate electronics. They can survive being crushed or exposed to harsh chemicals without breaking.