Past studies suggest that how people spend their time outside of work or academic endeavors can contribute to their overall well-being and life satisfaction. Yet how humans perceive different leisure activities that they engage in and the extent to which they feel that these activities contribute to their life’s purpose has not yet been extensively investigated.

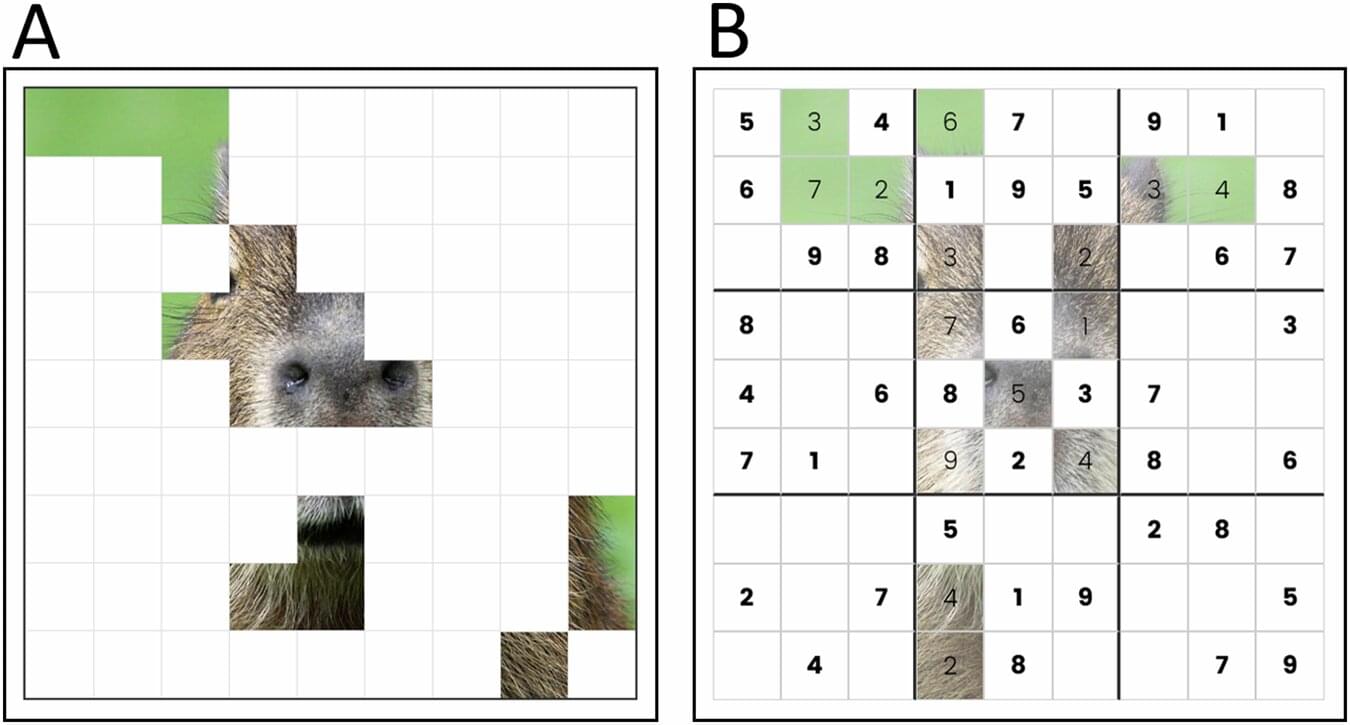

Researchers at the University of Toronto recently carried out a study aimed at exploring how effort, specifically in the context of leisure activities or pastimes, relates to meaning and purpose. Their findings, published in Communications Psychology, suggest that activities that require more effort, such as Sudoku puzzles or other mentally challenging games, are perceived as more meaningful than less demanding activities, such as watching videos on social media—yet they can be equally enjoyable.

“The paper was inspired largely by some recent work from us and others looking at the seemingly paradoxical nature of effort,” Aidan Campbell, first author of the paper, told Medical Xpress. “It’s something people tend to minimize and almost universally experience as frustrating or unpleasant, but at the same time, many actively seek it out or view effort as this virtuous thing which enhances the value of one’s actions.