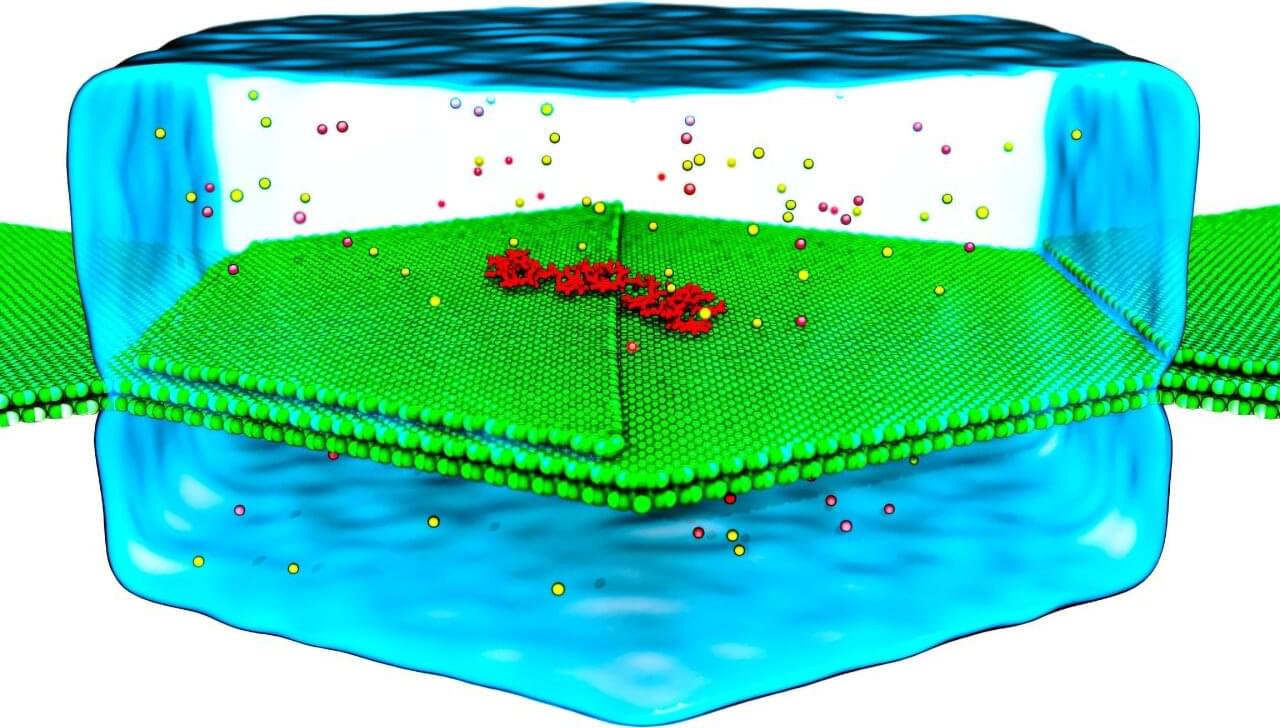

Researchers studying how large AI models such as ChatGPT learn and remember information have discovered that their memory and reasoning skills occupy distinct parts of their internal architecture. Their insights could help make AI safer and more trustworthy.

AI models trained on massive datasets rely on at least two major processing features. The first is memory, which allows the system to retrieve and recite information. The second is reasoning, solving new problems by applying generalized principles and learned patterns. But up until now, it wasn’t known if AI’s memory and general intelligence are stored in the same place.

So researchers at the startup Goodfire.ai decided to investigate the internal structure of large language and vision models to understand how they work.