It says the new form of transportation is faster, safer, cheaper, and more sustainable than existing modes.

Category: transportation – Page 337

Tesla Model Y pre-production units start rolling out in Giga Texas

When Gigafactory Texas was starting its construction, officials in the area started to fondly describe the project’s pace as the “Speed of Elon” on account of its rapid progress. This “Speed of Elon” seems to have never let up since Giga Texas broke ground about 13 months ago as the first image of a pre-production Tesla Model Y was just shared online.

The image was initially shared on Instagram, and it depicted a black Model Y that looked fresh out of the production line. The post was eventually deleted, but not before the image was shared across platforms such as Twitter and Reddit. It’s difficult not to be excited, after all, considering that Giga Texas broke ground just over a year ago in July 2020.

Based on the recently-shared image, it appears that Giga Texas’ Model Y production facility is now ready to start cranking out the all-electric crossovers, at least to some degree. The vehicle was not alone in the picture either, as another Model Y in the background could also be seen passing through the assembly line.

Tesla’s 4680 battery cell pilot production line hits 70–80% yield: report

Tesla has a number of programs that have the potential to change markets, and one of these is arguably the 4,680 cells. Created using a dry electrode process and optimized for price and efficiency, the 4,680 batteries could very well be the key to Tesla’s possible invasion of the mainstream auto and energy market. If Tesla pulls off its 4,680 production ramp, its place at the summit of the sustainable energy market would be all but ensured.

Unfortunately, Tesla’s publicly disclosed target for the 4,680 cells’ production ramp appears to have been made on “Elon Time.” This means that during Battery Day last year, Tesla’s target of hitting a capacity of 10 GWh by late September2021included some optimistic assumptions. Similar to other projects like Elon Musk’s Alien Dreadnaught factory, however, the pilot production of the 4,680 cells have met some challenges.

Tesla admitted to these difficulties during the Q22021earnings call, when Elon Musk explained that one of the main challenges in the 4,680 cell production ramp was related to the batteries’ calendaring, or the process when the dry cathode material is squashed to a particular height. Partly due to the use of nickel in the 4,680 cells, which are extremely hard, some of the calendar rolls end up being dented.

An ‘Uncrashable’ Car? Luminar Says Its Lidar Can Get There

As a recent New York Times article highlighted, self-driving cars are taking longer to come to market than many experts initially predicted. Automated vehicles where riders can sit back, relax, and be delivered to their destinations without having to watch the road are continuously relegated to the “not-too-distant future.”

There’s not just debate on when this driverless future will arrive, there’s also a lack of consensus on how we’ll get there, that is, which technologies are most efficient, safe, and scalable to take us from human-driven to computer-driven (Tesla is the main outlier in this debate). The big players are lidar, cameras, ultrasonic sensors, and radar. Last week, one lidar maker showcased some new technology that it believes will tip the scales.

California-based Luminar has built a lidar it calls Iris not only has a longer range than existing systems, it’s also more compact; gone are the days of a big, bulky setup that all but takes over the car. Perhaps most importantly, the company is aiming to manufacture and sell Iris at a price point well below the industry standard.

Aquas, a flying ship that moves passengers and cargo at speed up to 200 km/h

These kinds of seaplanes will be mainly used for passenger transport but could also improve search and rescue operations at sea, thanks to the advantage of offering versatile loading and unloading. This multi-purpose flying vessel concept was inspired by the new needs and demands of potential operators worldwide.

History, however, shows that – like everything – the ground-effect marine crafts also have their drawbacks. The ship hovering just above the water is not able to tilt too much during the flight (so as not to hit the water), so any change of flight direction must be planned early enough because its execution takes quite a long time.

RDC Aqualines boasts of being a multinational company specializing in the design, development, and future production of a new generation of marine transportation vessels, using mainly ground effect technology. The “flying ship,” as they call it, is offered in various sizes, from a 3-seater to an ekranoplan-like bike, a hydrofoil speedboat, and the ekranoplan-like ferry described above.

HyPoint, Piasecki team up to develop hydrogen fuel cell systems for eVTOLs

The California-based startup HyPoint has collaborated with the aircraft developer Piasecki Aircraft Corporation (PiAC) to develop hydrogen fuel cell systems for electric vertical takeoff and landing (eVTOL) vehicle applications. The ultimate goal is to deliver a customizable, FAA-certified, zero carbon-emission hydrogen fuel cell system to the global eVTOL market.

Through the partnership, Piasecki will gain an exclusive license to the technology created as part of the partnership, while HyPoint will maintain ownership of its underlying hydrogen fuel cell technology.

HyPoint’s revolutionary approach uses compressed air for both cooling and oxygen supply to deliver a hydrogen fuel cell system that significantly outperforms existing battery and hydrogen fuel cell alternatives. According to the company, the new system will offer eVTOL makers four times the energy density of existing lithium-ion batteries, double the specific power of existing hydrogen fuel cell systems, and that costs up to 50% less relative to the operative costs of turbine-powered rotorcraft.

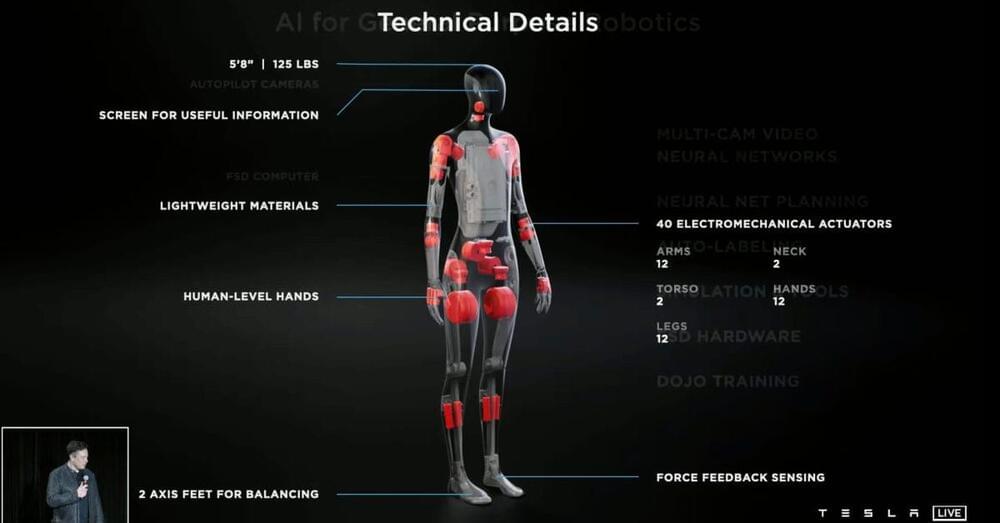

Tesla starts hiring roboticists for its ‘Tesla Bot’ humanoid robot project

Hurray.

Tesla has started to hire roboticists to build its recently announced “Tesla Bot,” a humanoid robot to become a new vehicle for its AI technology.

When Elon Musk explained the rationale behind Tesla Bot, he argued that Tesla was already making most of the components needed to create a humanoid robot equipped with artificial intelligence.

The automaker’s computer vision system developed for self-driving cars could be leveraged for use in the robot, which could also use things like Tesla’s battery system and suite of sensors.

This autonomous Tesla HGV brings ultra-futurism to Elon’s semi dreams

A Tesla semi-truck with a very Tesla-worthy aesthetics highlighted by the contoured yet sharp design language that in a way reminds me of the iPhone 12!

Tesla’s visionary Semi all-electric truck powered by four independent motors on the rear is scheduled for production in 2022. The semi is touted to be the safest, most comfortable truck with an acceleration of 0–60 mph in just 20 seconds and a range of 300–500 miles. While the prototype version looks absolutely badass, how the final version will look is anybody’s guess.

Stingray refuels Advanced Hawkeye in latest aerial trials

https://buff.ly/3y6P5Zu #unmanned #Boeing #northropgrumman #aircraft

The Boeing-owned test Stingray, MQ-25 T1, passed fuel to an E-2D airborne early warning and control (AEW&C) receiver aircraft flown by the US Navy’s (USN’s) Air Test and Evaluation Squadron VX-20 during the event the day prior to the announcement.

“During a test flight from MidAmerica St Louis Airport on 18 August, pilots from VX-20 conducted a successful wake survey behind MQ-25 T1 to ensure performance and stability before making contact with T1’s aerial refuelling drogue. The E-2D received fuel from T1’s aerial refuelling store during the flight,” Boeing said.

This first contact for the Stingray unmanned tanker with an Advanced Hawkeye receiver aircraft came nearly three months after the first aerial refuelling test was performed on 4 June with a Boeing F/A-18F Super Hornet receiver. Both the Advanced Hawkeye and Super Hornet flights were conducted at operationally relevant speeds and altitudes, with both receiver aircraft performing manoeuvres in close proximity to the Stingray.

Cerebras Systems Announces World’s First Brain-Scale Artificial Intelligence Solution

Technology Breakthroughs Enable Training of 120 Trillion Parameters on Single CS-2, Clusters of up to 163 Million Cores with Near Linear Scaling, Push Button Cluster Configuration, Unprecedented Sparsity Acceleration.

For more information, please visit http://cerebras.net/product/.

About Cerebras Systems.

Cerebras Systems is a team of pioneering computer architects, computer scientists, deep learning researchers, and engineers of all types. We have come together to build a new class of computer to accelerate artificial intelligence work by three orders of magnitude beyond the current state of the art. The CS-2 is the fastest AI computer in existence. It contains a collection of industry firsts, including the Cerebras Wafer Scale Engine (WSE-2). The WSE-2 is the largest chip ever built. It contains 2.6 trillion transistors and covers more than 46,225 square millimeters of silicon. The largest graphics processor on the market has 54 billion transistors and covers 815 square millimeters. In artificial intelligence work, large chips process information more quickly producing answers in less time. As a result, neural networks that in the past took months to train, can now train in minutes on the Cerebras CS-2 powered by the WSE-2.