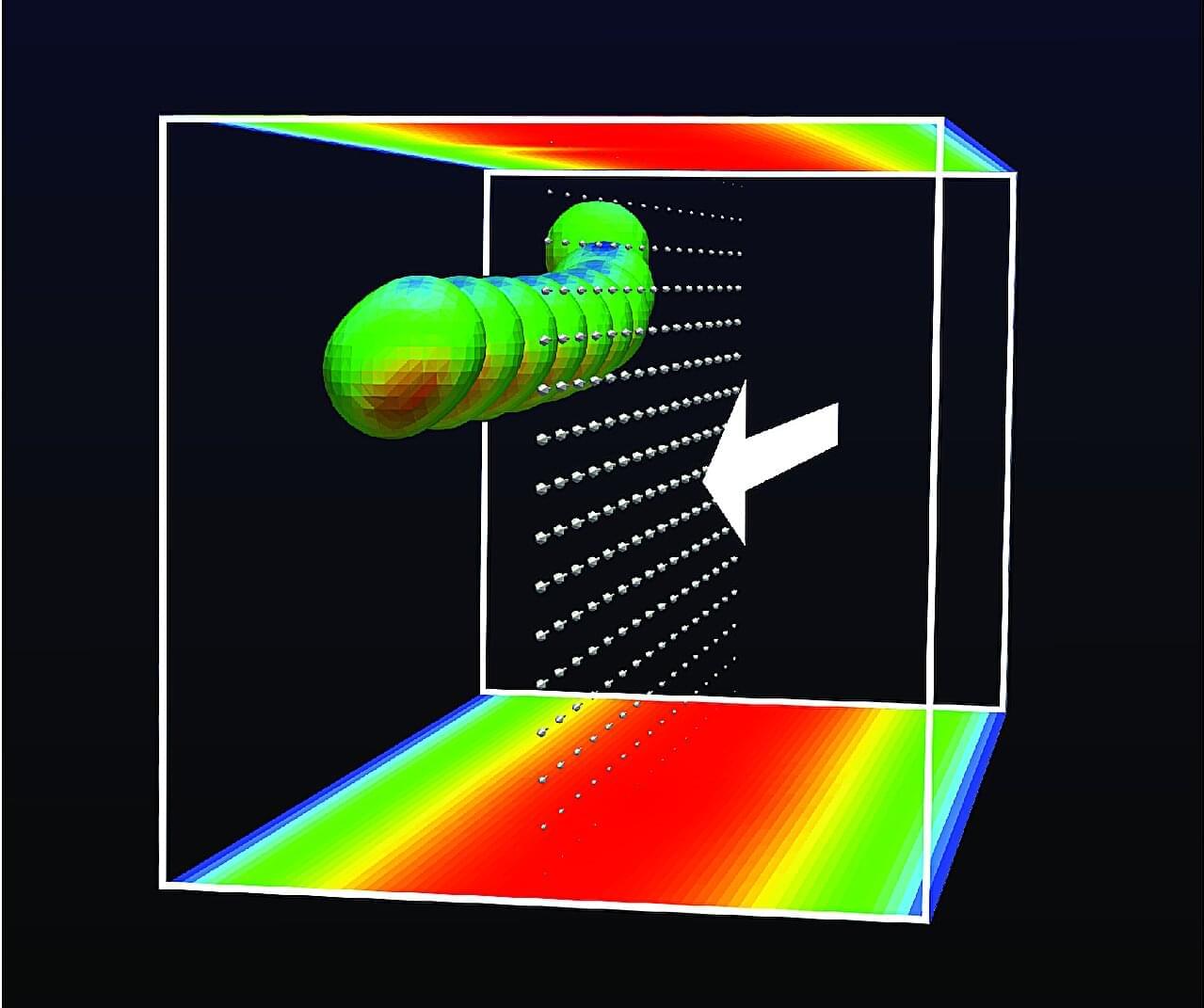

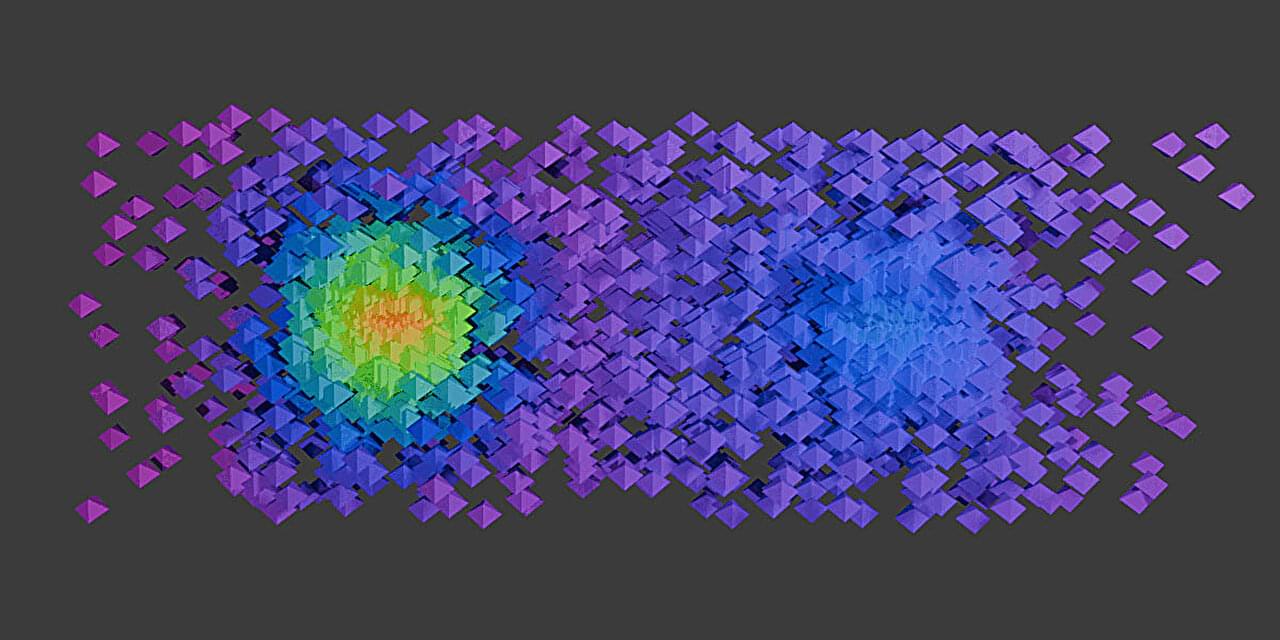

Researchers have discovered a novel criterion for sorting particles in microfluidic channels, paving the way for advancements in disease diagnostics and liquid biopsies. Using the supercomputer “Fugaku,” a joint team from the University of Osaka, Kansai University and Okayama University revealed that soft particles, like biological cells, exhibit unique focusing patterns compared to rigid particles.

The outcomes, published in the Journal of Fluid Mechanics, pave the way for next-generation microfluidic devices leveraging cell and particle deformability, promising highly efficient cell sorting with biomedical applications such as early cancer detection.

Microfluidics involves manipulating fluids at a microscopic scale. Controlling particle movement within microchannels is crucial for cell sorting and diagnostics, expected to realize early cancer detection and treatment. While prior research focused on rigid particles, which typically focus near channel walls, the behavior of deformable particles remained largely unexplored.