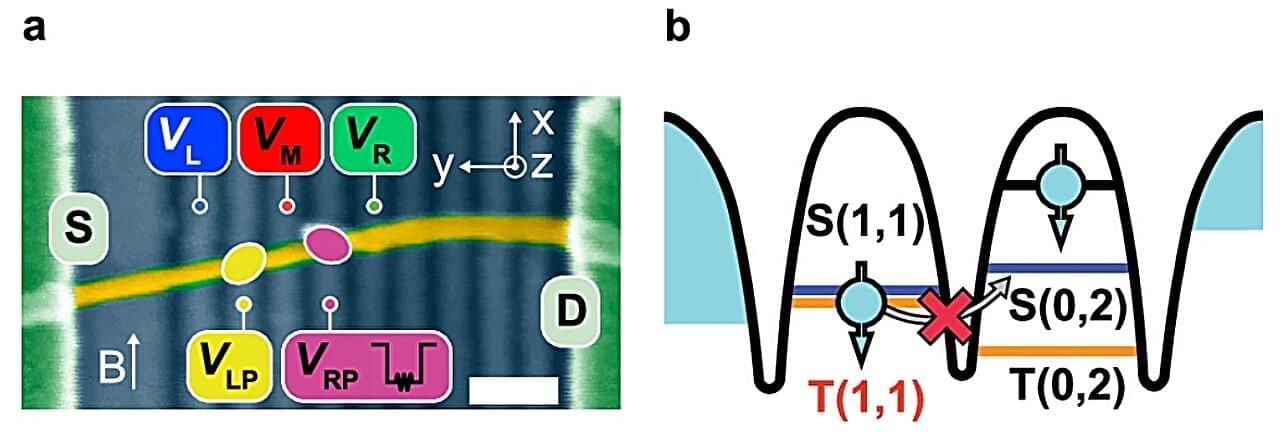

There are high hopes for quantum computers: they are supposed to perform specific calculations much faster than current supercomputers and, therefore, solve scientific and practical problems that are insurmountable for ordinary computers. The centerpiece of a quantum computer is the quantum bit, qubit for short, which can be realized in different ways—for instance, using the energy levels of atoms or the spins of electrons.

When making such qubits, however, researchers face a dilemma. On the one hand, a qubit needs to be isolated from its environment as much as possible. Otherwise, its quantum superpositions decay in a short time and the quantum calculations are disturbed. On the other hand, one would like to drive qubits as fast as possible in analogy with the clocking of classical bits, which requires a strong interaction with the environment.

Normally, these two conditions cannot be fulfilled at the same time, as a higher driving speed automatically entails a faster decay of the superpositions and, therefore, a shorter coherence time.