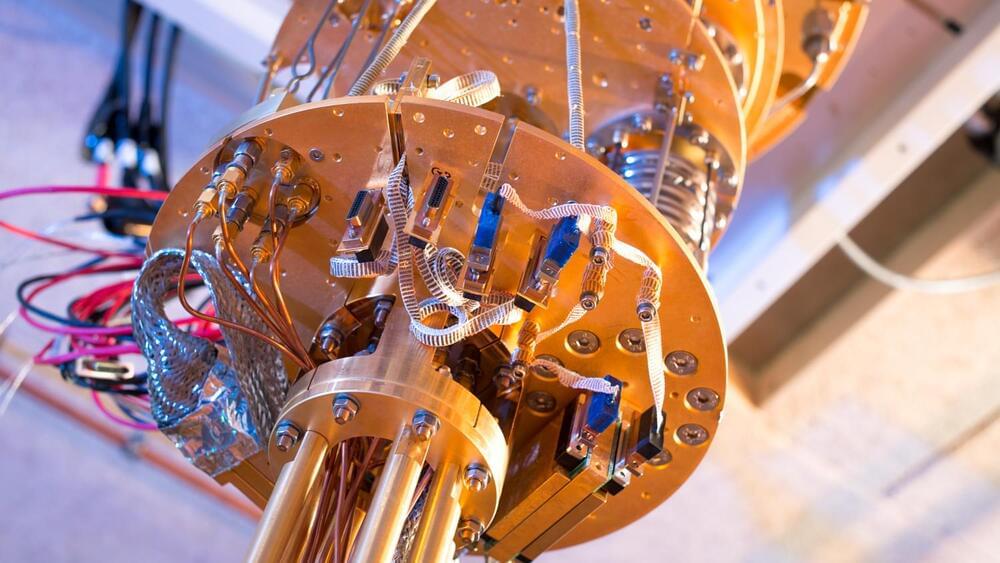

Microsoft today announced its roadmap for building its own quantum supercomputer, using the topological qubits the company’s researchers have been working on for quite a few years now. There are still plenty of intermediary milestones to be reached, but Krysta Svore, Microsoft’s VP of advanced quantum development, told us that the company believes that it will take fewer than 10 years to build a quantum supercomputer using these qubits that will be able to perform a reliable one million quantum operations per second. That’s a new measurement Microsoft is introducing as the overall industry aims to move beyond the current era of noisy intermediate-scale quantum (NISQ) computing.

We think… More.

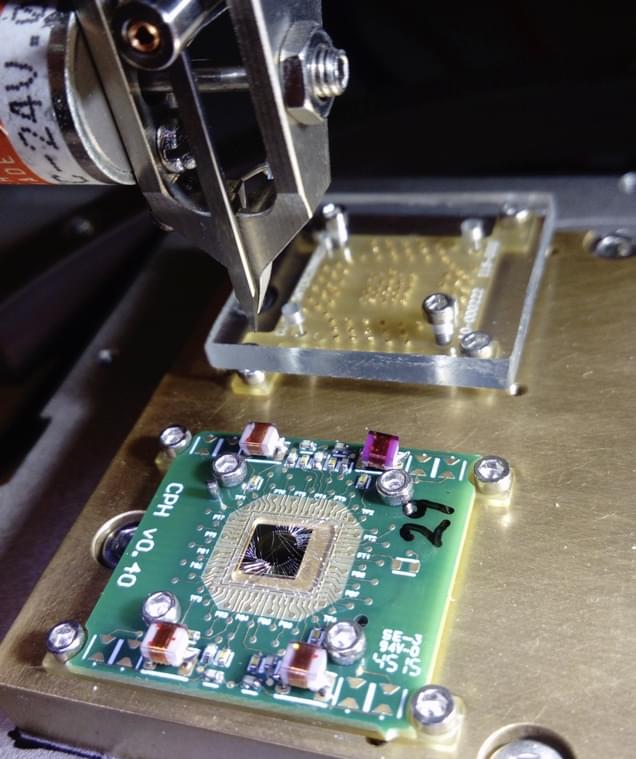

At its Ignite conference, Microsoft today put its stake in the ground and discussed its progress in building a quantum computer and giving developers tools to experiment with this new computing paradigm on their existing machines.

There’s a lot to untangle here, and few people will claim that they understand the details of quantum computing. What Microsoft has done, though, is focus on a different aspect of how quantum computing can work — and that may just allow it to get a jump on IBM, Google and other competitors that are also looking at this space. The main difference between what Microsoft is doing is that its system is based on advances in topology that the company previously discussed. Most of the theoretical work behind this comes from Fields Medal-recipient Michael Freedman, who joined Microsoft Research in 1997, and his team.

What topology does is that it gives you this ability to have much better fidelity, Microsoft’s corporate vice president for quantum research Todd Holmdahl told me. If you look at our competitors, some of them have three nines of fidelity and we could be at a thousand or ten thousand times that. That means a logical qubit, we could potentially implement it with 10 physical qubits. What the team essentially did is use Freedman’s theories to implement the error correction that’s so central to quantum computing at the physical level. I’m not going to pretend I really understand what topological qubits are, but it’s essentially harder to disturb than classical qubits (and in quantum computing, even at at the lowest currently achievable temperatures, you always need to account for some noise that can disturb the system’s state).