“We’re looking at a race, a race between China, between IBM, Google, Microsoft, Honeywell,” Kaku said. “All the big boys are in this race to create a workable, operationally efficient quantum computer. Because the nation or company that does this will rule the world economy.”

It’s not just the economy quantum computing could impact. A quantum computer is set up at Cleveland Clinic, where Chief Research Officer Dr. Serpil Erzurum believes the technology could revolutionize the world of health care.

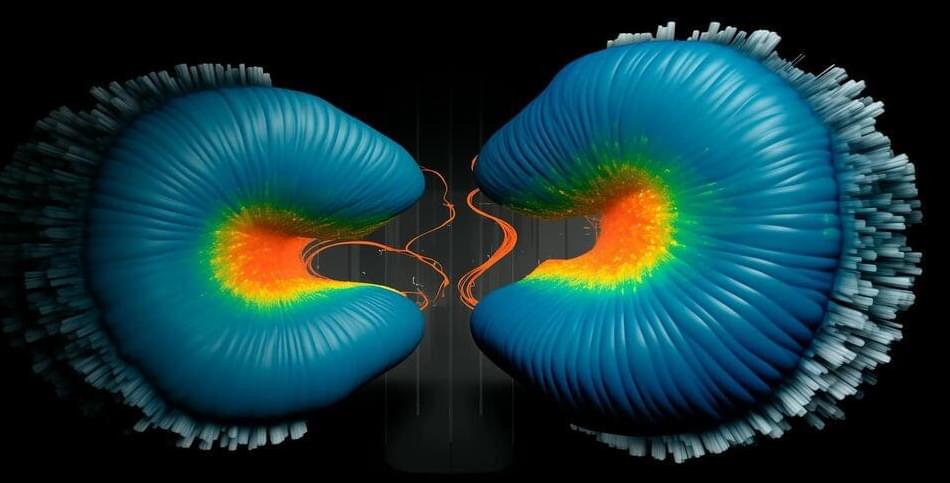

Quantum computers can potentially model the behavior of proteins, the molecules that regulate all life, Erzurum said. Proteins change their shape to change their function in ways that are too complex to follow, but quantum computing could change that understanding.