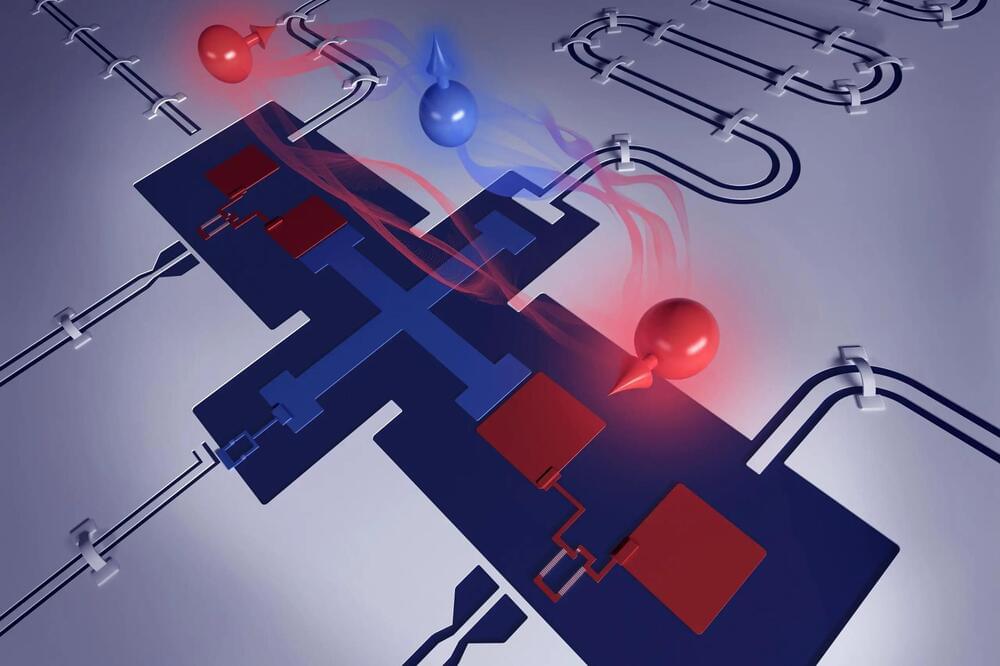

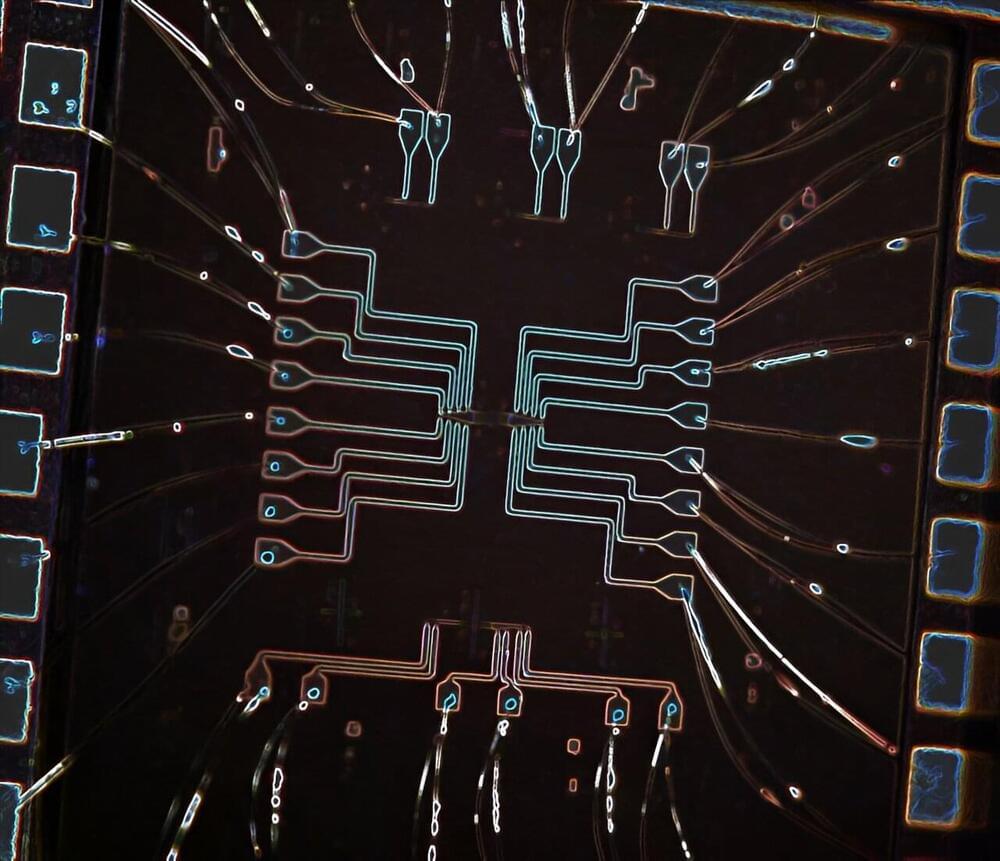

A complete quantum computing system could be as large as a two-car garage when one factors in all the paraphernalia required for smooth operation. But the entire processing unit, made of qubits, would barely cover the tip of your finger.

Today’s smartphones, laptops and supercomputers contain billions of tiny electronic processing elements called transistors that are either switched on or off, signifying a 1 or 0, the binary language computers use to express and calculate all information. Qubits are essentially quantum transistors. They can exist in two well-defined states—say, up and down—which represent the 1 and 0. But they can also occupy both of those states at the same time, which adds to their computing prowess. And two—or more—qubits can be entangled, a strange quantum phenomenon where particles’ states correlate even if the particles lie across the universe from each other. This ability completely changes how computations can be carried out, and it is part of what makes quantum computers so powerful, says Nathalie de Leon, a quantum physicist at Princeton University. Furthermore, simply observing a qubit can change its behavior, a feature that de Leon says might create even more of a quantum benefit. “Qubits are pretty strange. But we can exploit that strangeness to develop new kinds of algorithms that do things classical computers can’t do,” she says.

Scientists are trying a variety of materials to make qubits. They range from nanosized crystals to defects in diamond to particles that are their own antiparticles. Each comes with pros and cons. “It’s too early to call which one is the best,” says Marina Radulaski of the University of California, Davis. De Leon agrees. Let’s take a look.