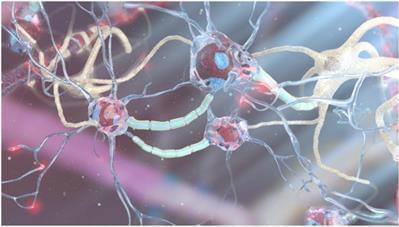

Process, and store data, most of which is handled by the rapidly expanding cloud. A stable, secure, real-time system may allow for interfacing the cloud with the human brain. One promising strategy for enabling such a system, denoted here as a “human brain/cloud interface” (“B/CI”), would be based on technologies referred to here as “neuralnanorobotics.” Future neuralnanorobotics technologies are anticipated to facilitate accurate diagnoses and eventual cures for the ∼400 conditions that affect the human brain. Neuralnanorobotics may also enable a B/CI with controlled connectivity between neural activity and external data storage and processing, via the direct monitoring of the brain’s ∼86 × 109 neurons and ∼2 × 1014 synapses. Subsequent to navigating the human vasculature, three species of neuralnanorobots (endoneurobots, gliabots, and synaptobots) could traverse the blood–brain barrier (BBB), enter the brain parenchyma, ingress into individual human brain cells, and autoposition themselves at the axon initial segments of neurons (endoneurobots), within glial cells (gliabots), and in intimate proximity to synapses (synaptobots). They would then wirelessly transmit up to ∼6 × 1016 bits per second of synaptically processed and encoded human–brain electrical information via auxiliary nanorobotic fiber optics (30 cm3) with the capacity to handle up to 1018 bits/sec and provide rapid data transfer to a cloud based supercomputer for real-time brain-state monitoring and data extraction. A neuralnanorobotically enabled human B/CI might serve as a personalized conduit, allowing persons to obtain direct, instantaneous access to virtually any facet of cumulative human knowledge. Other anticipated applications include myriad opportunities to improve education, intelligence, entertainment, traveling, and other interactive experiences. A specialized application might be the capacity to engage in fully immersive experiential/sensory experiences, including what is referred to here as “transparent shadowing” (TS). Through TS, individuals might experience episodic segments of the lives of other willing participants (locally or remote) to, hopefully, encourage and inspire improved understanding and tolerance among all members of the human family.

“We’ll have nanobots that… connect our neocortex to a synthetic neocortex in the cloud… Our thinking will be a… biological and non-biological hybrid.”

— Ray Kurzweil, TED 2014