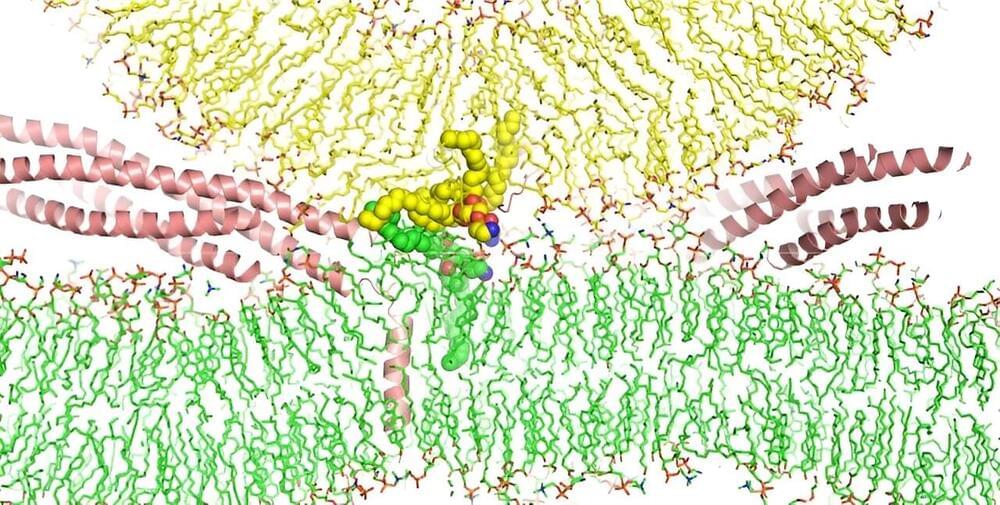

An intricate simulation performed by UT Southwestern Medical Center researchers using one of the world’s most powerful supercomputers sheds new light on how proteins called SNAREs cause biological membranes to fuse.

Their findings, reported in the Proceedings of the National Academy of Sciences, suggest a new mechanism for this ubiquitous process and could eventually lead to new treatments for conditions in which membrane fusion is thought to go awry.

“Biology textbooks say that SNAREs bring membranes together to cause fusion, and many people were happy with that explanation. But not me, because membranes brought into contact normally do not fuse. Our simulation goes deeper to show how this important process takes place,” said study leader Jose Rizo-Rey (“Josep Rizo”), Ph.D., Professor of Biophysics, Biochemistry, and Pharmacology at UT Southwestern.