Using this technique, even a non-conducting material like glass could be turned into a conductor some day feel researchers.

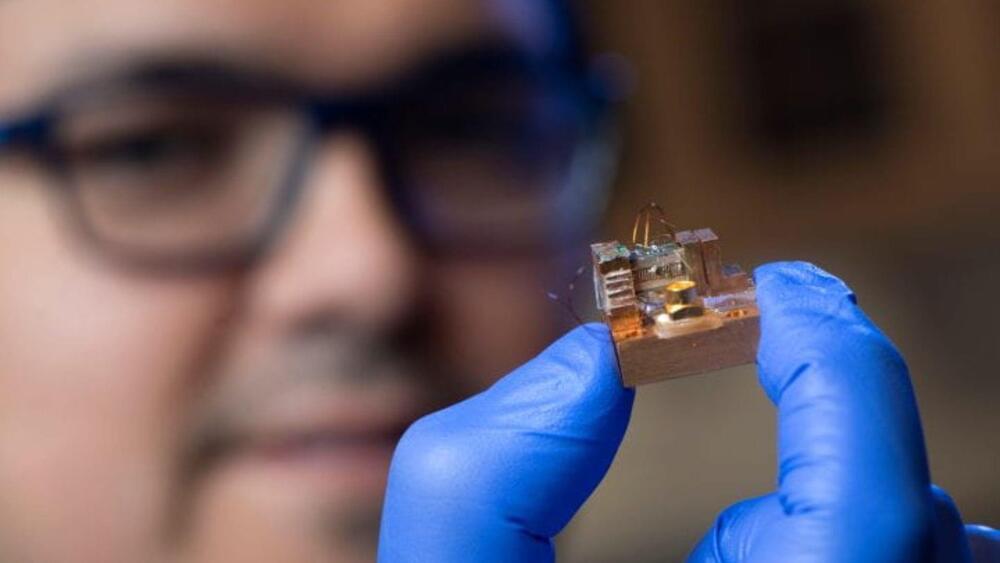

A collaboration between scientists at the University of California, Irvine (UCI) and Los Alamos National Laboratory (LANL) has developed a method that converts everyday materials into conductors that can be used to build quantum computers, a press release said.

Computing devices that are ubiquitous today are built of silicon, a semiconductor material. Under certain conditions, silicon behaves like a conducting material but has limitations that impact its ability to compute larger numbers. The world’s fastest supercomputers are built by putting together silicon-based components but are touted to be slower than quantum computers.

Quantum computers do not have the same limitations of silicon-based ocmputing and prototypes being built today can compute in seconds what supercomputers would take years to complete. This can open up a whole new level of computing prowess if they could be built and operated with easier-to-work material. Researchers at UCI have been working to determine how high-quality quantum materials can be obtained. They have now found a simpler way to make them from everyday materials.