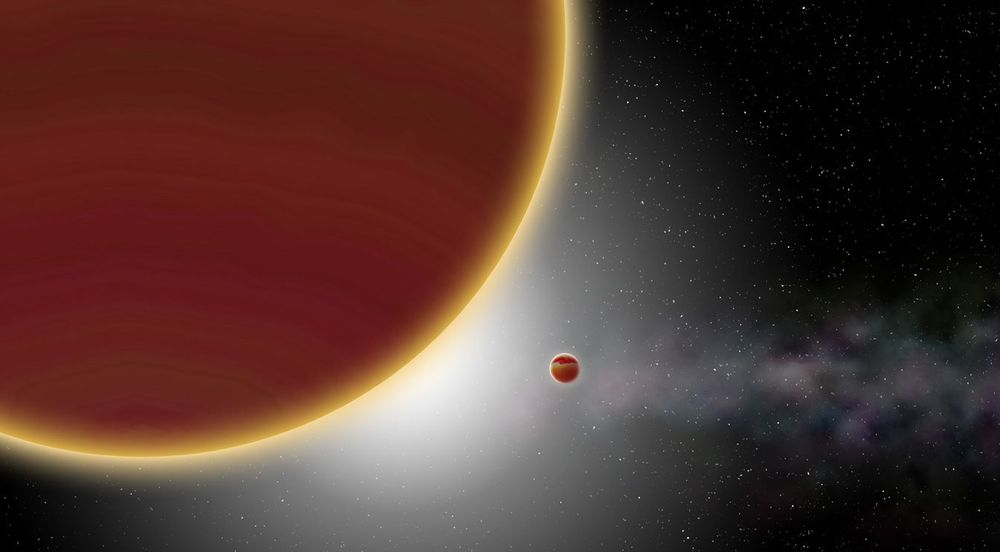

Great new podcast episode on our strange planet Mercury with planetary geophysicist Catherine Johnson, who eloquently explains what’s known about our tiny, innermost planet’s many remaining mysteries. Please have a listen.

Guest Catherine Johnson, a planetary geophysicist at the University of British Columbia in Vancouver, discusses this bizarre little world; the innermost planet in our solar system. A planet that’s so close to our Sun that its surface temperatures can hit 800 F. But surprisingly, its poles harbor enough water ice to completely bury a major metropolis. Some have even argued that Mercury may have once been habitable. Where it formed still remains a mystery, but it does have a tiny magnetic field, a very oversized iron core, and one of the largest impact basins in the solar system. A European mission is currently en route to orbit the planet in 2025.