Battering RAM lets attackers bypass Intel SGX and AMD SEV-SNP with a $50 DDR4 interposer.

A new method and proof-of-concept tool called EDR-Freeze demonstrates that evading security solutions is possible from user mode with Microsoft’s Windows Error Reporting (WER) system.

The technique eliminates the need of a vulnerable driver and puts security agents like endpoint detection and response (EDR) tools into a state of hibernation.

By using the WER framework together with the MiniDumpWriteDump API, security researcher TwoSevenOneThree (Zero Salarium) found a way to suspend indefinitely the activity of EDR and antivirus processes indefinitely.

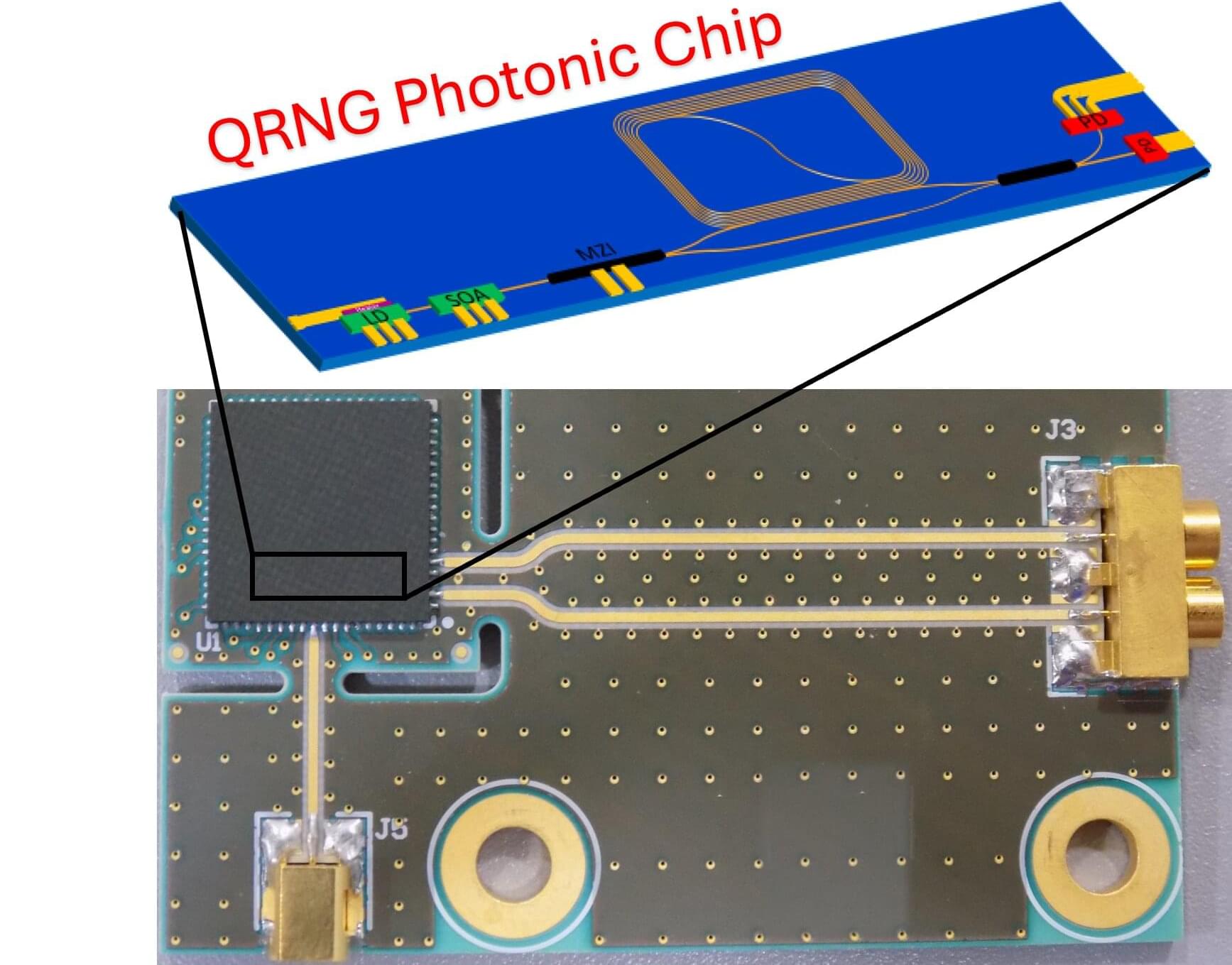

Researchers have developed a chip-based quantum random number generator that provides high-speed, high-quality operation on a miniaturized platform. This advance could help move quantum random number generators closer to being built directly into everyday devices, where they could strengthen security without sacrificing speed.

Imagine the benefits if the entire internet got a game-changing upgrade to speed and security. This is the promise of the quantum internet—an advanced system that uses single photons to operate. Researchers at Tohoku University have developed a new photonic router that can direct single and quantum entangled photons with unprecedented levels of efficiency. This advancement in quantum optics brings us closer to quantum networks and next-generation photonic quantum technologies becoming an everyday reality.

The findings were published in Advanced Quantum Technologies on September 2, 2025.

Photons are the backbone of many emerging quantum applications, from secure communication to powerful quantum computers. To make these technologies practical, photons must be routed quickly and reliably, without disturbing the delicate quantum states they carry.

Two Supermicro BMC flaws (CVE-2025–7937, 6198) bypass signature checks, risking persistent server compromise

The U.S. Secret Service on Tuesday said it took down a network of electronic devices located across the New York tri-state area that were used to threaten U.S. government officials and posed an imminent threat to national security.

“This protective intelligence investigation led to the discovery of more than 300 co-located SIM servers and 100,000 SIM cards across multiple sites,” the Secret Service said.

The devices were concentrated within a 35-mile (56 km) radius of the global meeting of the United Nations General Assembly in New York City. An investigation into the incident has been launched by the Secret Service’s Advanced Threat Interdiction Unit.